Paper:

Hybrid Caption Including Formula or Figure for Deaf and Hard-of-Hearing Students

Daisuke Wakatsuki*, Tatsuya Arai*, and Takeaki Shionome**

*Tsukuba University of Technology

4-3-15 Amakubo, Tsukuba, Ibaraki 305-8520, Japan

**Teikyo University

1-1 Toyosatodai, Utsunomiya, Tochigi 320-8551, Japan

Captions, which are used as a means of information support for deaf and hard-of-hearing students, are usually presented only in text form. Therefore, when mathematical equations and figures are frequently used in a classroom, the captions will show several demonstrative words such as “this equation” or “that figure.” As there is a delay between the teacher’s utterance and the display of the captions sometimes, it is difficult for users to grasp the target of these demonstrative words accurately. In this study, we prepared hybrid captions with mathematical equations and figures and verified their effectiveness via a comparison with conventional text-only captions. The results suggested that the hybrid captions were at least as effective as the conventional captions at helping the students understand the lesson contents. A subjective evaluation with a questionnaire survey also showed that the experimental participants found the hybrid captions to be acceptable without any discomfort. Furthermore, there was no difference in the number of eye movements of the participants during the experiment, suggesting that the physical load was similar for both types of captions.

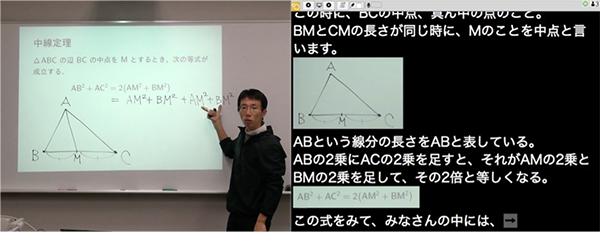

Example of a hybrid captioned lecture

- [1] J. E. Preminger and H. Levitt, “Computer-Assisted Remote Transcription (CART): A Tool To Aid People Who Are Deaf or Hard of Hearing in the Workplace,” Volta Review, Vol.99, No.4, pp. 219-230, 1997.

- [2] L. B. Elliot, M. S. Stinson, B. G. McKee, V. S. Everhart, and P. J. Francis, “College Students’ Perceptions of the C-Print Speech-to-Text Transcription System,” J. of Deaf Studies and Deaf Education, Vol.6, No.4, pp. 285-298, doi: 10.1093/deafed/6.4.285, 2001.

- [3] M. Marschark, G. Leigh, P. Sapere, D. Burnham, C. Convertino, M. Stinson, H. Knoors, M. P. J. Vervloed, and W. Noble, “Benefits of sign language interpreting and text alternatives to classroom learning by deaf students,” J. of Deaf Studies and Deaf Education, Vol.11, No.4, pp. 421-437, doi: 10.1093/deafed/enl013, 2006.

- [4] L. B. Elliot, M. S. Stinson, D. Easton, and J. Bourgeois, “College Students Learning with C-Print’s Education Software and Automatic Speech Recognition,” American Educational Research Association Annual Meeting, 2008.

- [5] W. S. Lasecki, C. D. Miller, A. Sadilek, A. Abumoussa, D. Borrello, R. Kushalinagar, and J. P. Bigham, “Real-Time Captioning by Groups of Non Experts,” Proc. of the 25th Annual ACM Symp. on User Interface Software and Technology, pp. 23-34, doi: 10.1145/2380116.2380122, 2012.

- [6] M. Shirasawa and A. Nakajima, “PC Notebook Take Skill Up Teaching Materials Collection – Let’s Try Combination Input!,” Research and Support Center on Higher Education for People with Disabilities, Tsukuba University of Technology, 2012 (in Japanese).

- [7] S. Kurita, “IPtalk,” http://www.s-kurita.net/ (in Japanese) [accessed April 3, 2019]

- [8] S. Miyoshi, S. Kawano, M. Shirasawa, K. Isoda, M. Hasuike, M. Kobayashi, E. Ogasawara, M. Umehara, T. Kanazawa, S. Nakano, and T. Ifukube, “Proposal of Mobile Type Remote-Captioning System for Deaf or Hard of Hearing People and Evaluation by Captionists,” J. of Life Support Engineering, Vol.22, No.4, pp. 146-151, doi: 10.5136/lifesupport.22.146, 2010 (in Japanese with English Abstract).

- [9] D. Wakatsuki, N. Kato, T. Shionome, S. Kawano, T. Nishioka, and I. Naito, “Development of Web-Based Remote Speech-to-Text Interpretation System captiOnline,” J. Adv. Comput. Intell. Intell. Inform., Vol.21, No.2, pp. 310-320, doi: 10.20965/jaciii.2017.p0310, 2017.

- [10] T. Shionome, D. Wakatsuki, Y. Shiraishi, Z. Jianwei, A. Morishima, and R. Hiraga, “A Basic Study on Speech-to-Text Interpretation via Crowdsourcing – Development of Web based Remote Speech-to-Text Interpretation System “captiOnline” –,” IPSJ SIG Technical Report, Vol.2017-AAC-3, No.17, 2017 (in Japanese).

- [11] Y. Takeuchi, H. Ohta, N. Ohnishi, D. Wakatsuki, and H. Minagawa, “Extraction of displayed objects corresponding to demonstrative words for use in remote transcription,” Computers Helping People with Special Needs: ICCHP 2010, Lecture Notes in Computer Science, Vol.6180, pp. 152-159, doi: 10.1007/978-3-642-14100-3_24, 2010.

- [12] H. Kawaguchi, Y. Takeuchi, T. Matsumoto, H. Kudo, and N. Ohnishi, “Extraction of Spoken Mathematical Formulas in Slides for Real-Time Captioning,” IEICE Trans., Vol.J97-D, No.5, pp. 1035-1043, 2014 (in Japanese).

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.