Paper:

Adaptive Personalized Multiple Machine Learning Architecture for Estimating Human Emotional States

Akihiro Matsufuji, Eri Sato-Shimokawara, and Toru Yamaguchi

Tokyo Metropolitan University

6-6 Asahigaoka, Hino, Tokyo 191-0065, Japan

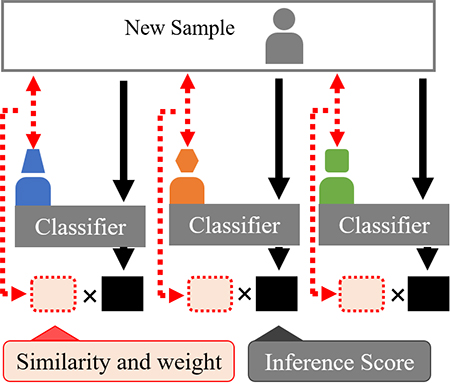

Robots have the potential to facilitate the future education of all generations, particularly children. However, existing robots are limited in their ability to automatically perceive and respond to a human emotional states. We hypothesize that these sophisticated models suffer from individual differences in human personality. Therefore, we proposed a multi-characteristic model architecture that combines personalized machine learning models and utilizes the prediction score of each model. This architecture is formed with reference to an ensemble machine learning architecture. In this study, we presented a method for calculating the weighted average in a multi-characteristic architecture by using the similarities between a new sample and the trained characteristics. We estimated the degree of confidence during a communication as a human internal state. Empirical results demonstrate that using the multi-model training of each person’s information to account for individual differences provides improvements over a traditional machine learning system and insight into dealing with various individual differences.

Adaptation using personalized models

- [1] B. Gonsior, S. Sosnowski, M. Buß, D. Wollherr, and K. Kühnlenz, “An emotional adaption approach to increase helpfulness towards a robot,” 2012 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 2429-2436, 2012.

- [2] M. L. Walters, D. S. Syrdal, K. Dautenhahn, R. te Boekhorst, and K. L. Koay, “Avoiding the uncanny valley, robot appearance personality and consistency of behavior in an attention seeking home scenario for a robot companion,” Autonomous Robots, Vol.24, No.2, pp. 159-178, 2008.

- [3] A. Cherubini, R. Passama, B. Navarro et al., “A collaborative robot for the factory of the future: BAZAR,” The Int. J. of Advanced Manufacturing Technology, Vol.105, No.9, pp. 3643-3659, 2019.

- [4] B. Choi, W. Lee, G. Park, Y. Lee, J. Min, and S. Hong, “Development and control of a military rescue robot for casualty extraction task,” J. of Field Robotics, Vol.36, No.4, pp. 656-676, 2019.

- [5] R. Meyer von Wolff, S. Hobert, and M. Schumann, “How May I Help You? – State of the Art and Open Research Questions for Chatbots at the Digital Workplace,” Proc. of the 52nd Hawaii Int. Conf. on System Sciences, 2019.

- [6] A. Mehrabian, “Silent Messages,” Wadsworth, 1971.

- [7] L. Ballihi, A. Lablack, B. B. Amor, I. M. Bilasco, and M. Daoudi, “Positive/Negative Emotion Detection from RGB-D Upper Body Images,” Face and Facial Expression Recognition from Real World Videos, Lecture Notes in Computer Science, Vol.8912, pp. 109-120, 2015.

- [8] A. Mimura and M. Hagiwara, “Understanding Presumption System from Facial Images,” IEEJ Trans. on Electronics Information and Systems, Vol.120, No.2, pp. 273-278, 2000.

- [9] M. Mancini and G. Castellano, “Real-Time Analysis and Synthesis of Emotional Gesture Expressivity,” Proc. of the Doctoral Consortium of 2nd Int. Conf. on Affective Computing and Intelligent Interaction, 2007.

- [10] S. Okada, Y. Matsugi, Y. Nakano, Y. Hayashi, H.-H. Huang, Y. Takase, and K. Nitta, “Estimating Communication Skills based on Multimodal Information in Group Discussions,” Trans. of Japanese Society for Artificial Intelligence, Vol.31, No.6, pp. AI30-E_1-12, 2016.

- [11] F. Nagasawa, T. Ishihara, S. Okada, and K. Nitta, “A Case Study Toward Implementing Adaptive Interview Strategy Based on User’s Attitude Recognition for a Interview Robot,” Proc. of the 31st Annual Conf. of the Japanese Society for Artificial Intelligence, 2H4-OS-35b-1, 2017 (in Japanese).

- [12] J. Ngiam, A. Khosla, M. Kim, J. Nam, H. Lee, and A. Y. Ng, “Multimodal deep learning,” Proc. of the 28th Int. Conf. on Machine Learning (ICML-11), pp. 689-696, 2011.

- [13] T. G. Dietterich, “Ensemble methods in machine learning,” Multiple Classifier Systems, Lecture Notes in Computer Science, Vol.1857, pp. 1-15, 2000.

- [14] Q. Sun and B. Pfahringer, “Bagging ensemble selection,” AI 2011: Advances in Artificial Intelligence, Lecture Notes in Computer Science, Vol.7106, pp. 251-260, 2011.

- [15] L. Breiman, “Random forests,” Machine Learning, Vol.45, No.1, pp. 5-32, 2001.

- [16] T. G. Dietterich, “An experimental comparison of three methods for constructing ensembles of decision trees: Bagging, boosting, and randomization,” Machine Learning, Vol.40, No.2, pp. 139-157, 2000.

- [17] E. Marinoiu, M. Zanfir, V. Olaru, and G. Sminchisescu, “3D human sensing, action and emotion recognition in robot assisted therapy of children with autism,” Proc. of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 2158-2167, 2018.

- [18] E. Lakomkin, M. A. Zamani, C. Weber, S. Magg, and S. Wermter, “On the Robustness of Speech Emotion Recognition for Human-Robot Interaction with Deep Neural Networks,” 2018 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 854-860, 2018.

- [19] A. Matsufuji, S. Tang, E. Kasano, W.-F. Hsieh, Y. Ho, E. Sato-Shimokawara, L.-H. Chen, and T. Yamaguchi, “Multi Characteristic Model Architecture for Estimating Human Internal State,” The 6th Int. Workshop on Advanced Computational Intelligence and Intelligent Informatics (IWACIII 2019), SAT2-B5, 2019.

- [20] I. L. Ruiz and M. A. Gómez-Nieto, “Building of robust and interpretable QSAR classification models by means of the rivality index,” J. of Chemical Information and Modeling, Vol.59, No.6, pp. 2785-2804, 2019.

- [21] E. Kasano, S. Muramatsu, A. Matsufuji, E. Sato-Shimokawara, and T. Yamaguchi, “Estimation of Speakers Confidence in Conversation Using Speech Information and Head Motion,” 2019 16th Int. Conf. on Ubiquitous Robots, pp. 294-298, 2019.

- [22] A. Lee, K. Oura, and K. Tokuda, “MMDAgent – A fully open-source toolkit for voice interaction systems,” Proc. of the 2013 IEEE Int. Conf. on Acoustics, Speech and Signal Processing (ICASSP), pp. 8382-8385, 2013.

- [23] P. Boersma, “Praat, A System for Doing Phonetics by Computer,” Glot Int., Vol.5, No.9-10, pp. 341-345, 2002.

- [24] A. Vinciarelli, M. Pantic, and H. Bourlard, “Social signal processing: survey of an emerging domain, Image and Vision Computing,” Vol.27, No.12, pp. 1743-1759, 2009.

- [25] M. Giuliani, N. Mirnig, G. Stollnberger, S. Stadler, R. Buchner, and M. Tscheligi, “Systematic analysis of video data from different human-robot interaction studies: a categorization of social signals during error situations,” Frontiers in Psychology, Vol.6, Article 931, 2015.

- [26] R. Stiefelhagen, C. Fugen, R. Gieselmann, H. Holzapfel, K. Nickel, and A. Waibel, “Natural human-robot interaction using speech, head pose and gestures,” Proc. of 2004 IEEE/RSJ Int. Conf. Intelligent Robots and Systems (IROS 2004), Vol.3, pp. 2422-2427, 2004.

- [27] L.-P. Morency, C. Sidner, C. Lee, and T. Darrel, “Head gestures for perceptual interfaces: The role of context in improving recognition,” Artificial Intelligence, Vol.171, No.8-9, pp. 568-585, 2007.

- [28] I. H. Witten, E. Frank, M. A. Hall, and C. J. Pal, “Appendix B – The WEKA Workbench,” “Data Mining: Practical Machine Learning Tools and Techniques,” 4th Edition, pp. 533-552, Elsevier, 2017.

- [29] D. M. Allen, “The relationship between variable selection and data augmentation and a method for prediction,” Technometrics, Vol.16, No.1, pp. 125-127, 1974.

- [30] A. Matsufuji, E. Sato-Shimokawara, T. Yamaguchi, and L.-H. Chen, “Adaptive Multi Model Architecture by Using Similarity Between Trained User and New User,” 2019 Int. Conf. on Technologies and Applications of Artificial Intelligence (TAAI), pp. 1-6, 2019.

- [31] C. Cortes and V. Vapnik, “Support-vector networks,” Machine Learning, Vol.20, No.3, pp. 273-297, 1995.

- [32] F. Pedregosa, G. Varoquaux, A. Gramfort et al., “Scikit-learn: Machine learning in Python,” J. of Machine Learning Research, Vol.12, pp. 2825-2830, 2011.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.