Paper:

Modular Neural Network for Learning Visual Features, Routes, and Operation Through Human Driving Data Toward Automatic Driving System

Shun Otsubo*, Yasutake Takahashi*, and Masaki Haruna**

*Graduate School of Engineering, University of Fukui

3-9-1 Bunkyo, Fukui, Fukui 910-8507, Japan

**Advanced Technology R&D Center, Mitsubishi Electric Corporation

8-1-1 Tsukaguchi-honmachi, Amagasaki, Hyogo 661-8661, Japan

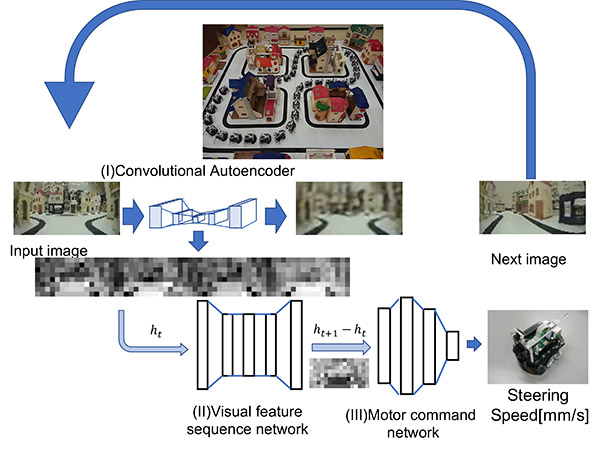

This paper proposes an automatic driving system based on a combination of modular neural networks processing human driving data. Research on automatic driving vehicles has been actively conducted in recent years. Machine learning techniques are often utilized to realize an automatic driving system capable of imitating human driving operations. Almost all of them adopt a large monolithic learning module, as typified by deep learning. However, it is inefficient to use a monolithic deep learning module to learn human driving operations (accelerating, braking, and steering) using the visual information obtained from a human driving a vehicle. We propose combining a series of modular neural networks that independently learn visual feature quantities, routes, and driving maneuvers from human driving data, thereby imitating human driving operations and efficiently learning a plurality of routes. This paper demonstrates the effectiveness of the proposed method through experiments using a small vehicle.

Modular neural network for automatic driving

- [1] J. Levinson, J. Askeland, J. Becker, J. Dolson, D. Held, S. Kammel, J. Z. Kolter, D. Langer, O. Pink, V. Pratt, M. Sokolsky, G. Stanek, D. Stavens, A. Teichman, M. Werling, and S. Thrun, “Towards fully autonomous driving: Systems and algorithms,” Proc. of 2011 IEEE Intelligent Vehicles Symp. (IV), pp. 163-168, 2011.

- [2] T. Ort, L. Paull, and D. Rus, “Autonomous Vehicle Navigation in Rural Environments Without Detailed Prior Maps,” Proc. of 2018 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 2040-2047, 2018.

- [3] H. Cho, Y. Seo, B. V. K. V. Kumar, and R. R. Rajkumar, “A multi-sensor fusion system for moving object detection and tracking in urban driving environments,” Proc. of 2014 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 1836-1843, 2014.

- [4] C. Caraffi, T. Vojíř, J. Trefný, J. Šochman, and J. Matas, “A system for real-time detection and tracking of vehicles from a single car-mounted camera,” Proc. of 2012 15th Int. IEEE Conf. on Intelligent Transportation Systems, pp. 975-982, 2012.

- [5] D. Held, J. Levinson, and S. Thrun, “A probabilistic framework for car detection in images using context and scale,” Proc. of 2012 IEEE Int. Conf. on Robotics and Automation, pp. 1628-1634, 2012.

- [6] B. Huval, T. Wang, S. Tandon, J. Kiske, W. Song, J. Pazhayampallil, M. Andriluka, P. Rajpurkar, T. Migimatsu, R. Cheng-Yue, F. Mujica, A. Coates, and A. Y. Ng, “An Empirical Evaluation of Deep Learning on Highway Driving,” arXiv: 1504.01716, 2015.

- [7] D. Barnes, W. Maddern, and I. Posner, “Find your own way: Weakly-supervised segmentation of path proposals for urban autonomy,” Proc. of 2017 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 203-210, 2017.

- [8] V. Badrinarayanan, A. Kendall, and R. Cipolla, “SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.39, No.12, pp. 2481-2495, 2017.

- [9] J. Dequaire, D. Rao, P. Ondrúška, D. Z. Wang, and I. Posner, “Deep Tracking on the Move: Learning to Track the World from a Moving Vehicle using Recurrent Neural Networks,” arXiv: 1609.09365, 2016.

- [10] M. Bojarski, D. D. Testa, D. Dworakowski, B. Firner, B. Flepp, P. Goyal, L. D. Jackel, M. Monfort, U. Muller, J. Zhang, X. Zhang, J. Zhao, and K. Zieba, “End to End Learning for Self-Driving Cars,” arXiv: 1604.07316, 2016.

- [11] H. Xu, Y. Gao, F. Yu, and T. Darrell, “End-to-End Learning of Driving Models from Large-Scale Video Datasets,” Proc. of 2017 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 3530-3538, 2017.

- [12] Y. Pan, C. Cheng, K. Saigol, K. Lee, X. Yan, E. Theodorou, and B. Boots, “Agile Off-Road Autonomous Driving Using End-to-End Deep Imitation Learning,” arXiv: 1709.07174, 2017.

- [13] J. Zhang and K. Cho, “Query-Efficient Imitation Learning for End-to-End Autonomous Driving,” arXiv: 1605.06450, 2016.

- [14] L. Xu, J. Hu, H. Jiang, and W. Meng, “Establishing Style-Oriented Driver Models by Imitating Human Driving Behaviors,” IEEE Trans. on Intelligent Transportation Systems, Vol.16, No.5, pp. 2522-2530, 2015.

- [15] R. Girshick, J. Donahue, T. Darrell, and J. Malik, “Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation,” Proc. of 2014 IEEE Conf. on Computer Vision and Pattern Recognition, pp. 580-587, 2014.

- [16] Y. LeCun, B. Boser, J. S. Denker, D. Henderson, R. E. Howard, W. Hubbard, and L. D. Jackel, “Backpropagation Applied to Handwritten Zip Code Recognition,” Neural Computation, Vol.1, No.4, pp. 541-551, 1989.

- [17] A. Kendall, J. Hawke, D. Janz, P. Mazur, D. Reda, J.-M. Allen, V.-D. Lam, A. Bewley, and A. Shah, “Learning to Drive in a Day,” Proc. of 2019 Int. Conf. on Robotics and Automation (ICRA), pp. 8248-8254, 2019.

- [18] D. P. Kingma and J. Ba, “Adam: A Method for Stochastic Optimization,” arXiv: 1412.6980, 2014.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.