Paper:

Analog Value Associative Memory Using Restricted Boltzmann Machine

Yuichiro Tsutsui and Masafumi Hagiwara†

Department of Information and Computer Science, Keio University

3-14-1 Hiyoshi, Kohoku-ku, Yokohama, Kanagawa 223-8522, Japan

†Corresponding author

In this paper, we propose an analog value associative memory using Restricted Boltzmann Machine (AVAM). Research on treating knowledge is becoming more and more important such as in natural language processing and computer vision fields. Associative memory plays an important role to store knowledge. First, we obtain distributed representation of words with analog values using word2vec. Then the obtained distributed representation is learned in the proposed AVAM. In the evaluation experiments, we found simple but very important phenomenon in word2vec method: almost all of the values in the generated vectors are small values. By applying traditional normalization method for each word vector, the performance of the proposed AVAM is largely improved. Detailed experimental evaluations are carried out to show superior performance of the proposed AVAM.

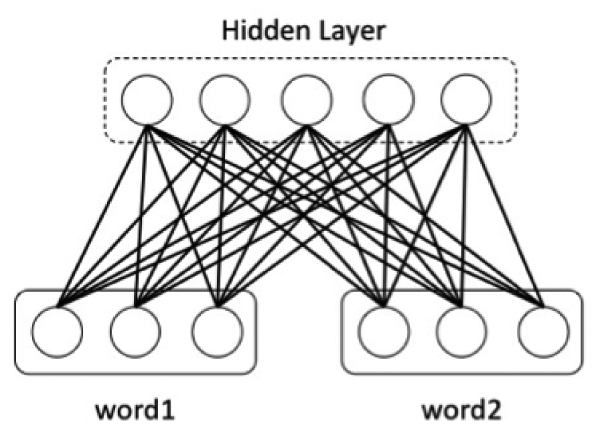

Structure of the proposed analog value associative memory using RBM (AVAM)

- [1] H. Alani et al., “Automatic ontology based knowledge extraction from web documents,” Intelligent Systems, IEEE, Vol.18, No.1, pp. 14-21, 2003.

- [2] H. C. Liu et al., “Dynamic adaptive fuzzy petri nets for knowledge representation and reasoning,” Systems, Man, and Cybernetics: Systems, IEEE Trans., Vol.43, No.6, pp. 99-1410, 2013.

- [3] M. Beetz, et al., “Know Rob 2.0 – A 2nd generation knowledge processing framework for cognition-enabled robotic agents,” IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 512-519, 2018.

- [4] A. M. Collins and M. R. Quillian, “Retrieval time from semantic memory,” J. of Verbal Learning and Verbal Behavior, Vol.8, No.2, pp. 240-247, 1969.

- [5] M. R. Quillian, “The teachable language comprehender: A simulation program and theory of language,” Commun. ACM, Vol.12, No.8, pp. 459-476, 1969.

- [6] M. L. Minsky, “A framework for representing knowledge,” The Psychology of Computer Vision, pp. 211-277, 1975.

- [7] M. L. Minsky and S. A. Papert, “Perceptrons,” Personal Media, 1993 (in Japanese).

- [8] R. Mizoguchi, “Ontology Engineering,” Ohmsha, 2005 (in Japanese).

- [9] D. J. Willshaw, O. P. Buneman, and H. C. Longuet-Higgins, “Nonholographic associative memory,” Nature, Vol.222, No.5197, pp. 960-962, 1969.

- [10] K. Nakano, “Associatron-A model of associative memory,” IEEE Trans. Syst. Man. Cybrn, Vol.SMC-2, No.3, pp. 380-388, 1972.

- [11] T. Kohonen, “Self-Organization and Associative Memory,” Springer Series in Information Sciences, Vol.8, Springer-Verlag, Berlin, Heidelberg, New York, Tokyo, 1984.

- [12] J. J. Hopfield, “Neural networks and physical systems with emergent collective computational abilities,” Proc. National Academy Sciences, Vol.79, pp. 2554-2558, 1982.

- [13] J. J. Hopfield, “Neurons with grabbed response have collective computational properties like those of two state neurons,” Proc. National Academy Sciences, Vol.81, pp. 3088-3092, 1982.

- [14] B. Kosko, “Bidirectional Associative Memories,” IEEE Trans. Syst. Man. Cybrn, Vol.18, No.1, pp. 49-60, 1988.

- [15] B. Kosko, “Adaptive bidirectional associative memories,” Applied Optics, Vol.26, No.23, pp. 4947-4960, 1987.

- [16] M. Hagiwara, “Multidirectional associative memory,” Proc. IEEE and INNS Int. Joint Conf. on Neural Networks, Vol.1, pp. 3-6, 1990.

- [17] T. Kojima, H. Nagaoka, and T.Da-Te, “Some properties of an associative memory model using the Boltzmann Machine learning,” Proc. IEEE Int. Joint Conf. on Neural Networks, Vol.3, pp. 2662-2665, 1993.

- [18] D. Ackley, G. E. Hinton, and T. Sejnowski, “A Learning Algorithm for Boltzmann Machines,” Cognitive Science, Vol.9, No.1, pp. 147-169, 1985.

- [19] T. Kojima, H. Nonaka, and T. Da-Te, “Capacity of the associative memory using the Boltzmann machine learning,” Proc. the IEEE Int. Conf. on Neural Networks, Vol.5, pp. 2572-2577, 1995.

- [20] P. Smolensky, “Information processing in dynamical systems: Foundations of harmony theory,” Parallel Distributed Processing: Explanations in the Microstructure of Cognition, Vol.1, pp. 194-281. MIT Press, 1986.

- [21] K. Nagatani and M. Hagiwara, “Restricted Boltzmann Machine associative memory,” IEEE Int. Joint Conf. on Neural Networks, pp. 3745-3750, 2014.

- [22] T. Mikolov et al., “Efficient estimation of word representations in vector space,” Int. Conf. on Learning Representations, Vol.3781, pp. 1-12, 2013.

- [23] T. Mikolov, Q. V. Le, and I. Sutskever, “Exploiting similarities among languages for machine translation,” CoRR, Vol.abs/1309.4168, 2013.

- [24] K. J. Oh et al., “Travel intention-based attraction network for recommending travel destinations,” 2016 Int. Conf. on Big Data and Smart Computing (BigComp), pp. 277-280, 2016.

- [25] Y. Zhao, J. Wang, and F. Wang, “Word embedding based retrieval model for similar cases recommendation,” 2015 Chinese Automation Congress (CAC), pp. 2268-2272, 2015.

- [26] I. V. Serban et al., “Hierarchical neural network generative models for movie dialogues,” CoRR, Vol.abs/1507.04808, 2015.

- [27] H. Okaya, “Deep Learning,” Kodansha, 2015 (in Japanese).

- [28] Y. Tsutsui and M. Hagiwara, “Construction of semantic network using restricted boltzmann machine,” IEICE NC2015-71, Vol.115, No.514, pp. 13-18, 2016 (in Japanese).

- [29] G. E. Hinton, “Training products of experts by minimizing contrastive divergence,” Neural Computation, Vol.14, No.8, pp. 1771-1800, 2002.

- [30] T. Mikolov, W.-T. Yih, and G. Zweig, “Linguistic regularities in continuous space word representations,” Proc. of the 2013 Conf. of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL-HLT-2013), pp. 746-751, 2013.

- [31] H. Nishio, “Natural Language Processing by word2vec,” O’Reilly Japan, 2014 (in Japanese).

- [32] J. Okamoto and S. Ishizaki, “Construction of associative concept dictionary with distance information, and comparison with electronic concept dictionary,” Natural Language Processing, Vol.8, No.4, pp. 37-54, 2001.

- [33] L. Zhang and D. Zhang, “Visual understanding via multi-feature shared learning with global consistency,” IEEE Trans. on Multimedia, Vol.18, No.2, pp. 247-259, 2016.

- [34] L. Zhang, W. Zuo, and D. Zhang, “LSDT: Latent sparse domain transfer learning for visual adaptation,” IEEE Trans. on Image Processing, Vol.25, No.3, pp. 1177-1191, 2016.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.