Paper:

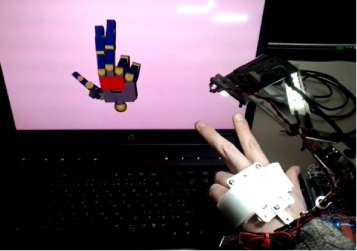

Wearable Device for High-Speed Hand Pose Estimation with a Ultrasmall Camera

Motomasa Tomida and Kiyoshi Hoshino

Graduate School of Systems and Information Engineering, University of Tsukuba

1-1-1 Tennodai, Tsukuba, Ibakaki 305-0006, Japan

Hand pose estimation with ultrasmall camera

Hand pose estimation with ultrasmall camera- [1] N. S. Pollard, J. K. Hodgins, M. J. Riley, and C. G. Atkeson, “Adapting human motion for the control of a humanoid robot,” Proc. of IEEE Int. Conf. on Robotics and Automation, Vol.2, pp. 1390-1397, 2002,

- [2] T. Asfour and R. Dillmann, “Human-like motion of a humanoid robot arm based on a closed form solution of the inverse kinematics problem,” Proc. IEEE/RSJ Conf. Intelligent Robots and Systems, pp. 1407-1412, Las Vegas, Nevada, October 2003.

- [3] J. M. Rehg and T. Kanade, “Visual tracking of high DOF articulated structures: an application to human hand tracking,” European Conf. Computer Vision, pp. 35-46, 1994.

- [4] Y. Kameda and M. Minoh, “A human motion estimation method using 3-successive video frames,” Proc. Virtual Systems and Multimedia, pp. 135-140, 1996.

- [5] S. Lu, D. Metaxas, D. Samaras, and J. Oliensis, “Using multiple cues for hand tracking and model refinement,” Proc. CVPR2003, Vol.2, pp. 443-450, 2003.

- [6] T. Gumpp, P. Azad, K. Welke, E. Oztop, R. Dillmann, and G. Cheng, “Unconstrained real-time markerless hand tracking for humanoid interaction,” Proc. IEEE-RAS Int. Conf. on Humanoid Robots, CD-ROM, 2006.

- [7] V. Athitos and S. Scarloff, “An appearance-based framework for 3D hand shape classification and camera viewpoint estimation,” Proc. Automatic Face and Gesture Recognition, pp. 40-45, 2002.

- [8] K. Hoshino and T. Tanimoto, “Real time search for similar hand images from database for robotic hand control,” IEICE Trans. on Fundamentals of Electronics, Communications and Computer Sciences, Vol.E88-A, pp. 2514-2520, 2005.

- [9] Y. Wu, J. Lin, and T. S. Huang, “Analyzing and capturing articulated hand motion in image sequences,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.27, pp. 1910-1922, 2005.

- [10] K. Hoshino, E. Tamaki, and T. Tanimoto, “Copycat hand – Robot hand imitating human motions at high speed and with high accuracy,” Advanced Robotics, Vol.21, No.15, pp. 1743-1761, 2007.

- [11] K. Hoshino and M. Tomida, “3D hand pose estimation using a single camera for unspecified users,” J. of Robotics and Mechatronics, Vol.21, No.6, pp. 749-757, 2009.

- [12] N. Otsu and T. Kurita, “A new scheme for practical, flexible and intelligent vision systems,” Proc. IAPR. Workshop on Computer Vision, pp. 431-435, 1998.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.