Paper:

Comparison of External Human–Machine Interfaces for Presenting the Intention to Yield from an Automated Vehicle to a Driver

Hidehiro Saeki*,†

and Kazunori Shidoji**

and Kazunori Shidoji**

*Graduate School of Integrated Frontier Sciences, Kyushu University

744 Motooka, Nishi-ku, Fukuoka, Fukuoka 819-0395, Japan

†Corresponding author

**Faculty of Information Science and Electrical Engineering, Kyushu University

744 Motooka, Nishi-ku, Fukuoka, Fukuoka 819-0395, Japan

Drivers use turn signals and stop lamps to inform the surrounding environment of their intentions and behavior. However, these notifications are insufficient in reality; therefore, drivers also use gestures and eye contact to communicate with others, as necessary. However, automated vehicles that are currently being tested or put into practical use are not equipped with these communication functions. Therefore, we focus on communication methods using an external human–machine interface (eHMI). In this study, we conducted an experimental investigation using a driving simulator to examine the effect of an eHMI on a driver who intends to enter the main road from a parking lot of an off-road facility when an automated vehicle traveling on the main road presents an intention to yield. The results of a subjective evaluation indicated that the driver understanding of the eHMI was related to the number of contacts with it. When the text-display type eHMI presented specific information, drivers merging onto the mainline made their merging decisions earlier than in other conditions.

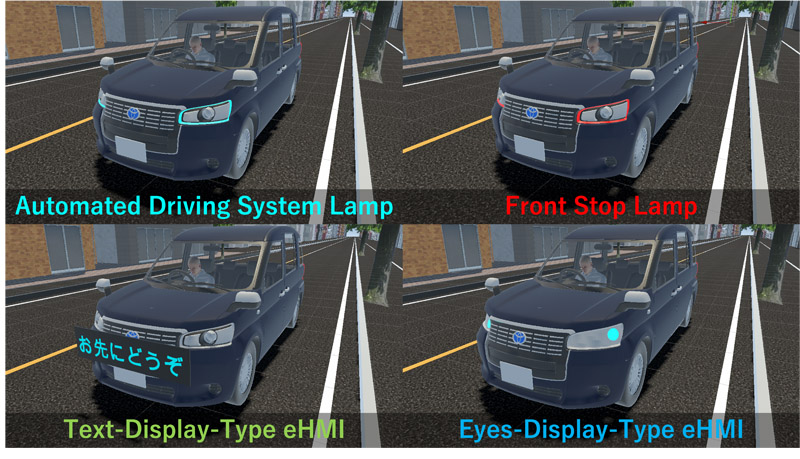

Four eHMI designs compared in this study

- [1] K. Suzuki, J. Lee, and A. Kanbe, “HMI design when using level 2 automated driving function – Effects of system status presentation considering the risk of malfunction on driver behavior –,” J. Robot. Mechatron., Vol.32, No.3, pp. 520-529, 2020. https://doi.org/10.20965/jrm.2020.p0520

- [2] K. Renge, “Effect of driving experience on drivers’ decoding process of roadway interpersonal communication,” Ergonomics, Vol.43, No.1, pp. 27-39, 2000. https://doi.org/10.1080/001401300184648

- [3] K. Mahadevan, E. Sanoubari, S. Somanath, J. E. Young, and E. Sharlin, “AV-pedestrian interaction design using a pedestrian mixed traffic simulator,” Proc. of the 2019 on Designing Interactive Systems Conf., pp. 475-486, 2019. https://doi.org/10.1145/3322276.3322328

- [4] SAE International, “Taxonomy and definitions for terms related to driving automation systems for on-road motor vehicles,” SAE J3016_202104, 2021. https://doi.org/10.4271/J3016_202104

- [5] A. Habibovic et al., “Communicating intent of automated vehicles to pedestrians,” Frontiers in Psychology, Vol.9, Article No.1336, 2018. https://doi.org/10.3389/fpsyg.2018.01336

- [6] A. Loew et al., “Go ahead, please!—Evaluation of external human–machine interfaces in a real-world crossing scenario,” Frontiers in Computer Science, Vol.4, Article No.863072, 2022. https://doi.org/10.3389/fcomp.2022.863072

- [7] D. Eisele and T. Petzoldt, “Effects of traffic context on eHMI icon comprehension,” Transportation Research Part F: Traffic Psychology and Behaviour, Vol.85, pp. 1-12, 2022. https://doi.org/10.1016/j.trf.2021.12.014

- [8] A. G. Mirnig et al., “Stop or go? Let me know!: A field study on visual external communication for automated shuttles,” Proc. of the 13th Int. Conf. on Automotive User Interfaces and Interactive Vehicular Applications, pp. 287-295, 2021. https://doi.org/10.1145/3409118.3475131

- [9] S. Deb, L. J. Strawderman, and D. W. Carruth, “Investigating pedestrian suggestions for external features on fully autonomous vehicles: A virtual reality experiment,” Transportation Research Part F: Traffic Psychology and Behaviour, Vol.59, Part A, pp. 135-149, 2018. https://doi.org/10.1016/j.trf.2018.08.016

- [10] D. Dey et al., “Taming the eHMI jungle: A classification taxonomy to guide, compare, and assess the design principles of automated vehicles’ external human-machine interfaces,” Transportation Research Interdisciplinary Perspectives, Vol.7, Article No.100174, 2020. https://doi.org/10.1016/j.trip.2020.100174

- [11] M. Rettenmaier, D. Albers, and K. Bengler, “After you?! – Use of external human-machine interfaces in road bottleneck scenarios,” Transportation Research Part F: Traffic Psychology and Behaviour, Vol.70, pp. 175-190, 2020. https://doi.org/10.1016/j.trf.2020.03.004

- [12] H. Saeki and K. Shidoji, “Attitudes of person at driver’s seat of level 3–4 automated vehicle affect human driver entering roadway,” Int. J. of Intelligent Transportation Systems Research, Vol.23, No.1, pp. 432-440, 2025. https://doi.org/10.1007/s13177-024-00459-4

- [13] H. Furuya, T. Ikeda, M. Tsuchiya, T. Oota, N. Mori, “Analysis of traffic accidents on the roadside shop entrance,” Infrastructure Planning Review, Vol.21, pp. 983-990, 2004 (in Japanese). https://doi.org/10.2208/journalip.21.983

- [14] SAE International, “Automated driving system (ADS) marker lamp,” SAE J3134, 2019. https://doi.org/10.4271/j3134_201905

- [15] M. Monzel, K. Keidel, W. Schubert, and R. Banse, “A field study investigating road safety effects of a front brake light,” IET Intelligent Transport Systems, Vol.15, No.8, pp. 1043-1052, 2021. https://doi.org/10.1049/itr2.12080

- [16] T. Daimon, M. Taima, and S. Kitazaki, “Pedestrian carelessness toward traffic environment due to external human–machine interfaces of automated vehicles,” J. of Traffic and Logistics Engineering, Vol.9, No.2, pp. 42-47, 2021. https://doi.org/10.18178/jtle.9.2.42-47

- [17] P. Bazilinskyy, D. Dodou, and J. de Winter, “Survey on eHMI concepts: The effect of text, color, and perspective,” Transportation Research Part F: Traffic Psychology and Behaviour, Vol.67, pp. 175-194, 2019. https://doi.org/10.1016/j.trf.2019.10.013

- [18] M. Colley, C. Hummler, and E. Rukzio, “Effects of mode distinction, user visibility, and vehicle appearance on mode confusion when interacting with highly automated vehicles,” Transportation Research Part F: Traffic Psychology and Behaviour, Vol.89, pp. 303-316, 2022. https://doi.org/10.1016/j.trf.2022.06.020

- [19] A. Werner, “New colours for autonomous driving: An evaluation of chromaticities for the external lighting equipment of autonomous vehicles,” Colour Turn, Vol.1, 2019. https://doi.org/10.25538/tct.v0i1.692

- [20] C.-M. Chang, K. Toda, D. Sakamoto, and T. Igarashi, “Eyes on a car: An interface design for communication between an autonomous car and a pedestrian,” Proc. of the 9th Int. Conf. on Automotive User Interfaces and Interactive Vehicular Applications, pp. 65-73, 2017. https://doi.org/10.1145/3122986.3122989

- [21] A. Löcken, C. Golling, and A. Riener, “How should automated vehicles interact with pedestrians?: A comparative analysis of interaction concepts in virtual reality,” Proc. of the 11th Int. Conf. on Automotive User Interfaces and Interactive Vehicular Applications, pp. 262-274, 2019. https://doi.org/10.1145/3342197.3344544

- [22] D. Dey, M. Martens, B. Eggen, and J. Terken, “The impact of vehicle appearance and vehicle behavior on pedestrian interaction with autonomous vehicles,” Proc. of the 9th Int. Conf. on Automotive User Interfaces and Interactive Vehicular Applications Adjunct, pp. 158-162, 2017. https://doi.org/10.1145/3131726.3131750

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.