Paper:

Localization and Situational Awareness for Autonomous Ship Using Trinocular Stereo Vision

Shigehiro Yamamoto*

and Takeshi Hashimoto**

and Takeshi Hashimoto**

*Graduate School of Maritime Sciences, Kobe University

5-1-1 Fukae-minami-machi, Higashinada-ku, Kobe, Hyogo 658-0022, Japan

**Faculty of Engineering, Shizuoka University

3-5-1 Johoku, Chuo-ku, Hamamatsu, Shizuoka 432-8561, Japan

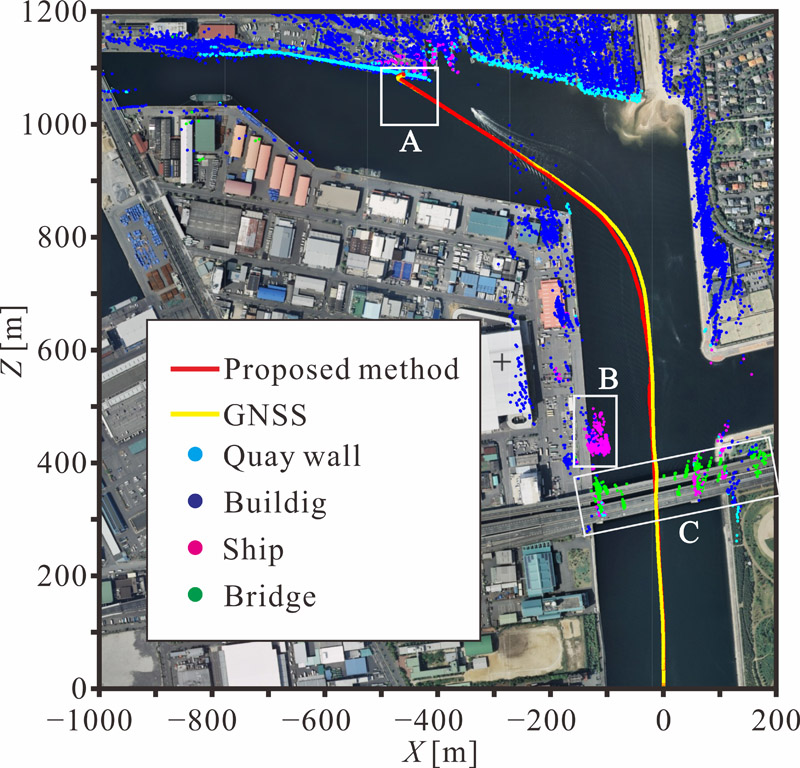

Similar to the demand for automated driving vehicles and autonomous mobile robots on land, marine autonomous surface ships are desired in the maritime field. An autonomous ship must be capable of recognizing objects in its vicinity and accurately determining the relative positions between the ship and objects. In this paper, we propose a novel method for creating a map of the ship’s surroundings using trinocular stereo vision, along with accurately estimating its location and motion within this map. In the proposed method, the world coordinates of feature points—such as land-based structures—are measured at the ship’s initial position. As the ship moved, these feature points in the images are tracked using optical flow. The ship’s world coordinates are then estimated based on the pixel and world coordinates of these feature points. Furthermore, the feature points in the three-dimensional map are classified using deep learning. An experiment involving ship navigation over a distance of 1000 m confirmed that the system can accurately estimate the position, heading, and speed of the ship and can also identify quay walls, other ships, and bridges in three-dimensions. The results indicate that the proposed method is suitable for automating berthing systems.

Ship’s track in the surrounding object map

- [1] K. Kim, J. Jeong, and B. Lee, “Study on the analysis of near-miss ship collisions using logistic regression,” J. Adv. Comput. Intell. Intell. Inform., Vol.21, No.3, pp. 467-473, 2017. https://doi.org/10.20965/jaciii.2017.p0467

- [2] D. Kim, K. Hirayama, and G. Park, “Collision avoidance in multiple-ship situations by distributed local search,” J. Adv. Comput. Intell. Intell. Inform., Vol.18, No.5, pp. 839-848, 2014. https://doi.org/10.20965/jaciii.2014.p0839

- [3] D. Kim, J. Jeong, G. Kim, H. Kim, and T. Hong, “Implementation of an intelligent system for identifying vessels exhibiting abnormal navigation patterns,” J. Adv. Comput. Intell. Intell. Inform., Vol.18, No.4, pp. 665-671, 2014. https://doi.org/10.20965/jaciii.2014.p0665

- [4] A. Sakai, T. Saitoh, and Y. Kuroda, “Robust landmark estimation and unscented particle sampling for SLAM in dynamic outdoor environment,” J. Robot. Mechatron., Vol.22, No.2, pp. 140-149, 2010. https://doi.org/10.20965/jrm.2010.p0140

- [5] A. Sujiwo, T. Ando, E. Takeuchi, Y. Ninomiya, and M. Edahiro, “Monocular vision-based localization using ORB-SLAM with LIDAR-aided mapping in real-world robot challenge,” J. Robot. Mechatron., Vol.28, No.4, pp. 479-490, 2016. https://doi.org/10.20965/jrm.2016.p0479

- [6] T. Pire, R. Baravalle, A. D’Alessandro, and J. Civera, “Real-time dense map fusion for stereo SLAM,” Robotica, Vol.36, pp. 1510-1526, 2018. https://doi.org/10.1017/S0263574718000528

- [7] Y. Ai, Q. Sun, Z. Xi, N. Li, J. Dong, and X. Wang, “Stereo SLAM in dynamic environments using semantic segmentation,” Electronics, Vol.12, No.14, Article No.3112, 2023. https://doi.org/10.3390/electronics12143112

- [8] R. Mur-Artal and J. D. Tardós, “ORB-SLAM2: An open-source SLAM system for monocular, stereo, and RGB-D cameras,” IEEE Trans. on Robotics, Vol.33, No.5, pp. 1255-1262, 2017. https://doi.org/10.1109/TRO.2017.2705103

- [9] J. Cremona, J. Civera, E. Kofman, and T. Pire, “GNSS-stereo-inertial SLAM for arable farming,” J. of Field Robotics, Vol.41, No.7, pp. 2215-2225, 2024. https://doi.org/10.1002/rob.22232

- [10] C. Campos, R. Elvira, J. J. G. Rodríguez, J. M. M. Montiel, and J. D. Tardós, “ORB-SLAM3: An accurate open-source library for visual, visual-inertial, and multimap SLAM,” IEEE Trans. on Robotics, Vol.37, No.6, pp. 1874-1890, 2021. https://doi.org/10.1109/TRO.2021.3075644

- [11] Ł. Marchel, K. Naus, and M. Specht, “Optimisation of the position of navigational aids for the purposes of SLAM technology for accuracy of vessel positioning,” The J. of Navigation, Vol.73, No.2, pp. 282-295, 2020. https://doi.org/10.1017/S0373463319000584

- [12] K. Jaskólski, Ł. Marchel, A. Felski, M. Jaskólski, and M. Specht, “Automatic identification system (AIS) dynamic data integrity monitoring and trajectory tracking based on the simultaneous localization and mapping (SLAM) process model,” Sensors, Vol.21, No.24, Article No.8430, 2021. https://doi.org/10.3390/s21248430

- [13] J. Callmer, D. Törnqvist, F. Gustafsson, H. Svensson, and P. Carlbom, “Radar SLAM using visual features,” EURASIP J. on Advances in Signal Processing, Vol.2011, Article No.71, 2011. https://doi.org/10.1186/1687-6180-2011-71

- [14] A. Engström, D. Geiseler, K. Blanch, O. Benderius, and I. G. Daza, “A lidar-only SLAM algorithm for marine vessels and autonomous surface vehicles,” IFAC-PapersOnLine, Vol.55, No.31, pp. 229-234, 2022. https://doi.org/10.1016/j.ifacol.2022.10.436

- [15] W. Shen, Z. Yang, C. Yang, and X. Li, “A LiDAR SLAM-assisted fusion positioning method for USVs,” Sensors, Vol.23, No.3, Article No.1558, 2023. https://doi.org/10.3390/s23031558

- [16] R. Sawada and K. Hirata, “Mapping and localization for autonomous ship using LiDAR SLAM on the sea,” J. of Marine Science and Technology, Vol.28, No.2, pp. 410-421, 2023. https://doi.org/10.1007/s00773-023-00931-y

- [17] T. Shan, B. Englot, D. Meyers, W. Wang, C. Ratti, and D. Rus, “LIO-SAM: Tightly-coupled lidar inertial odometry via smoothing and mapping,” 2020 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 5135-5142, 2020. https://doi.org/10.1109/IROS45743.2020.9341176

- [18] H. Lu, Y. Zhang, C. Zhang, Y. Niu, Z. Wang, and H. Zhang, “A multi-sensor fusion approach for maritime autonomous surface ships berthing navigation perception,” Ocean Engineering, Vol.316, Article No.119965, 2025. https://doi.org/10.1016/j.oceaneng.2024.119965

- [19] T. Huntsberger, H. Aghazarian, A. Howard, and D. C. Trotz, “Stereo vision-based navigation for autonomous surface vessels,” J. of Field Robotics, Vol.28, No.1, pp. 3-18, 2011. https://doi.org/10.1002/rob.20380

- [20] B. Shin, X. Mou, W. Mou, and H. Wang, “Vision-based navigation of an unmanned surface vehicle with object detection and tracking abilities,” Machine Vision and Applications, Vol.29, pp. 95-112, 2018. https://doi.org/10.1007/s00138-017-0878-7

- [21] Y. Shang, W. Yu, G. Zeng, H. Li, and Y. Wu, “Stereo YOLO: A stereo vision-based method for maritime object recognition and localization,” J. of Marine Science and Engineering, Vol.12, Article No.197, 2024. https://doi.org/10.3390/jmse12010197

- [22] K. Gideon, M. Makoto, S. Fujii, S. Ono, J. Fromager, S. Yu, K. Yoshimura, and J. Tahara, “Development of a measurement system for gas‑autonomous surface vehicle to map marine obstacles using stereo depth and LiDAR cameras,” Artificial Life and Robotics, Vol.27, pp. 842-854, 2022. https://doi.org/10.1007/s10015-022-00796-1

- [23] K. Yoshihara, S. Yamamoto, and T. Hashimoto, “On-ship trinocular stereo vision: An experimental study for long-range high-accuracy localization of other vessels,” J. of Marine Science and Engineering, Vol.13, No.1, Article No.115, 2025. https://doi.org/10.3390/jmse13010115

- [24] L. C. Chen, Y. Zhu, G. Papandreou, F. Schroff, and H. Adam, “Encoder-decoder with atrous separable convolution for semantic image segmentation,” Proc. of the European Conf. on Computer Vision (ECCV 2018), pp. 833-851, 2018. https://doi.org/10.1007/978-3-030-01234-2_49

- [25] J. Shi and C. Tomasi, “Good features to track,” 1994 Proc. of IEEE Conf. on Computer Vision and Pattern Recognition, pp. 593-600, 1994. https://doi.org/10.1109/CVPR.1994.323794

- [26] K. Yoshihara, S. Yamamoto, and T. Hashimoto, “Study on precision improvement of ship’s position measurement using multiple cameras,” J. of Japan Institute of Marine Engineering, Vol.54, No.4, pp. 154-162, 2019 (in Japanese). https://doi.org/10.5988/jime.54.635

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.