Paper:

Real-World Validation of a Learned Policy for Cooperative Object Transport with the Transformer Encoder

Yuichiro Sueoka

, Seiya Ozaki, Yuki Kato, and Takahiro Yoshida

, Seiya Ozaki, Yuki Kato, and Takahiro Yoshida

Department of Mechanical Engineering, Graduate School of Engineering, The University of Osaka

2-1 Yamadaoka, Suita, Osaka 565-0871, Japan

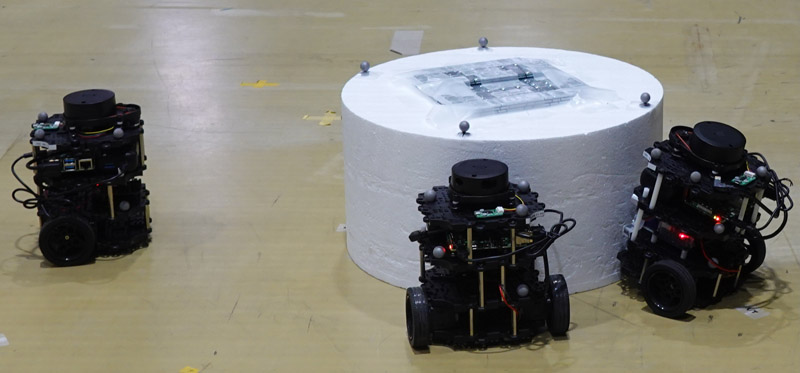

In recent years, swarm robotic systems are expected to be used in open environments such as disaster sites. Considering applications in open environments, systems are required to maintain functionality even when the number of robots changes or communication among them is unstable. On the other hand, the design methodology for systems that take these constraints into account has not been sufficiently discussed. Our previous research proposed the use of the transformer encoder (self-attention mechanism), enabling the design of a neural network that remains effective even when the amount of observation data available to each robot varies. However, that work did not include validation in real-world environments, and it remains unclear whether the cooperative behaviors learned using the transformer encoder can be effectively applied outside of simulation. To address this, this paper aims to investigate the feasibility of sim-to-real transfer and examines the differences between the simulation and real-world environments. After training a policy model for cooperative object transport in a simulation environment, we conduct real-world experiments using TurtleBot3 Burgers to evaluate its applicability, adaptability to changes in robot numbers without retraining, and differences between simulation and real-world environments.

Sim-to-Real transfer for cooperative object transport

- [1] E. Şahin, “Swarm robotics: From sources of inspiration to domains of application,” E. Şahin and W. M. Spears (Eds.), “Swarm Robotics. SR 2004. Lecture Notes in Computer Science, Vol.3342,” pp. 10-20, 2005. https://doi.org/10.1007/978-3-540-30552-1_2

- [2] J. Werfel, K. Petersen, and R. Nagpal, “Designing collective behavior in a termite-inspired robot construction team,” Science, Vol.343, No.6172, pp. 754-758, 2014. https://doi.org/10.1126/science.1245842

- [3] K. Nagatani, M. Abe, K. Osuka, P. j. Chun, T. Okatani, M. Nishio, S. Chikushi, T. Matsubara, Y. Ikemoto, and H. Asama, “Innovative technologies for infrastructure construction and maintenance through collaborative robots based on an open design approach,” Advanced Robotics, Vol.35, No.11, pp. 715-722, 2021. https://doi.org/10.1080/01691864.2021.1929471

- [4] M. Rubenstein, A. Cabrera, J. Werfel, G. Habibi, J. Mclurkin, and R. Nagpal, “Collective transport of complex objects by simple robots: Theory and experiments,” The 12th Int. Conf. on Autonomous Agents and Multiagent Systems (AAMAS 2013), pp. 47-54, 2013.

- [5] J. Chen, M. Gauci, W. Li, A. Kolling, and R. Groß, “Occlusion-based cooperative transport with a swarm of miniature mobile robots,” IEEE Trans. on Robotics, Vol.31, No.2, pp. 307-321, 2015. https://doi.org/10.1109/TRO.2015.2400731

- [6] M. H. M. Alkilabi, A. Narayan, and E. Tuci, “Cooperative object transport with a swarm of e-puck robots: Robustness and scalability of evolved collective strategies,” Swarm Intelligence, Vol.11, pp. 185-209, 2017. https://doi.org/10.1007/s11721-017-0135-8

- [7] G. Eoh and T.-H. Park, “Automatic curriculum design for object transportation based on deep reinforcement learning,” IEEE Access, Vol.9, pp. 137281-137294, 2021. https://doi.org/10.1109/ACCESS.2021.3118109

- [8] T. Niwa, K. Shibata, and T. Jimbo, “Multi-agent reinforcement learning and individuality analysis for cooperative transportation with obstacle removal,” F. Matsuno, S.-i. Azuma, and M. Yamamoto (Eds.), “Distributed Autonomous Robotic Systems. DARS 2021. Springer Proc. in Advanced Robotics, Vol.22,” pp. 202-213, 2022. https://doi.org/10.1007/978-3-030-92790-5_16

- [9] L. Zhang, Y. Sun, A. Barth, and O. Ma, “Decentralized control of multi-robot system in cooperative object transportation using deep reinforcement learning,” IEEE Access, Vol.8, pp. 184109-184119, 2020. https://doi.org/10.1109/ACCESS.2020.3025287

- [10] T. Wang, H. Dong, V. Lesser, and C. Zhang, “Roma: Multi-agent reinforcement learning with emergent roles,” Proc. of the 37th Int. Conf. on Machine Learning (ICML’20), pp. 9876-9886, 2020.

- [11] T. Wang, T. Gupta, A. Mahajan, B. Peng, S. Whiteson, and C. Zhang, “Rode: Learning roles to decompose multi-agent tasks,” Int. Conf. on Learning Representations, 2021.

- [12] S. Reed, K. Zolna, E. Parisotto, S. G. Colmenarejo, A. Novikov, G. Barth-Maron, M. Giménez, Y. Sulsky, J. Kay, J. T. Springenberg, T. Eccles, J. Bruce, A. Razavi, A. Edwards, N. Heess, Y. Chen, R. Hadsell, O. Vinyals, M. Bordbar, and N. de Freitas, “A generalist agent,” Trans. on Machine Learning Research, 2022.

- [13] M. Wen, J. G. Kuba, R. Lin, W. Zhang, Y. Wen, J. Wang, and Y. Yang, “Multi-agent reinforcement learning is a sequence modeling problem,” Proc. of the 36th Int. Conf. on Neural Information Processing Systems (NIPS’22), pp. 16509-16521, 2022.

- [14] A. Cohen, E. Teng, V.-P. Berges, R.-P. Dong, H. Henry, M. Mattar, A. Zook, and S. Ganguly, “On the use and misuse of absorbing states in multi-agent reinforcement learning,” arXiv preprint, arXiv:2111.05992, 2022. https://doi.org/10.48550/arXiv.2111.05992

- [15] Y. Sueoka, T. Yoshida, and K. Osuka, “Reinforcement learning of scalable, flexible and robust cooperative transport behavior using the transformer encoder,” The 17th Int. Symposium on Distributed Autonomous Robotic Systems (DARS2024), 2024.

- [16] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” Proc. of the 31st Int. Conf. on Neural Information Processing Systems (NIPS’17), pp. 6000-6010, 2017.

- [17] S. Ozaki, T. Yoshida, Y. Kato, and Y. Sueoka, “Real-world experiment of cooperative object transportation using self-attention based deep reinforcement learning,” 2025 JSME Conf. on Robotics and Mechatronics, Article No.2P2-I03, 2025 (in Japanese).

- [18] A. Dosovitskiy, L. Beyer, A. Kolesnikov, D. Weissenborn, X. Zhai, T. Unterthiner, M. Dehghani, M. Minderer, G. Heigold, S. Gelly, J. Uszkoreit, and N. Houlsby, “An image is worth 16x16 words: Transformers for image recognition at scale,” arXiv preprint, arXiv:2010.11929, 2020. https://doi.org/10.48550/arXiv.2010.11929

- [19] J. Schulman, F. Wolski, P. Dhariwal, A. Radford, and O. Klimov, “Proximal policy optimization algorithms,” arXiv preprint, arXiv:1707.06347, 2017. https://doi.org/10.48550/arXiv.1707.06347

- [20] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv preprint, arXiv:1412.6980, 2017. https://doi.org/10.48550/arXiv.1412.6980

- [21] J. Schulman, P. Moritz, S. Levine, M. Jordan, and P. Abbeel, “High-dimensional continuous control using generalized advantage estimation,” arXiv preprint, arXiv:1506.02438, 2018. https://doi.org/10.48550/arXiv.1506.02438

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.