Review:

Mobile Robot Navigation Based on Artificial Markers: A Systematic Mapping Study

Moteb Alghamdi*,†

, Ashraf Al-Marakeby**

, Ashraf Al-Marakeby**

, and Salah Abdel-Mageid*,**

, and Salah Abdel-Mageid*,**

*Department of Computer Engineering, Collage of Computer Science and Engineering, Taibah University

Janadah Bin Umayyah Road, Tayba, Madinah 42353, Saudi Arabia

†Corresponding author

**Systems and Computers Department, Faculty of Engineering, Al-Azhar University

Permanent Camp Street, Nasr City, Cairo 11884, Egypt

Mobile robot navigation plays a vital role in robotics, allowing autonomous systems to function effectively across different environments. Artificial markers offer a dependable and efficient solution for localization and mapping, especially in structured environments. This systematic mapping study aims to thoroughly examine the existing body of literature, including research papers that explore mobile robot navigation techniques utilizing artificial markers. Our review systematically analyzes various aspects such as marker types, detection algorithms, and navigation strategies. The study aims to uncover research gaps, highlight emerging trends, and suggest potential future directions. By evaluating the strengths and limitations of current approaches, we strive to address key research questions related to marker-based navigation systems for mobile robots.

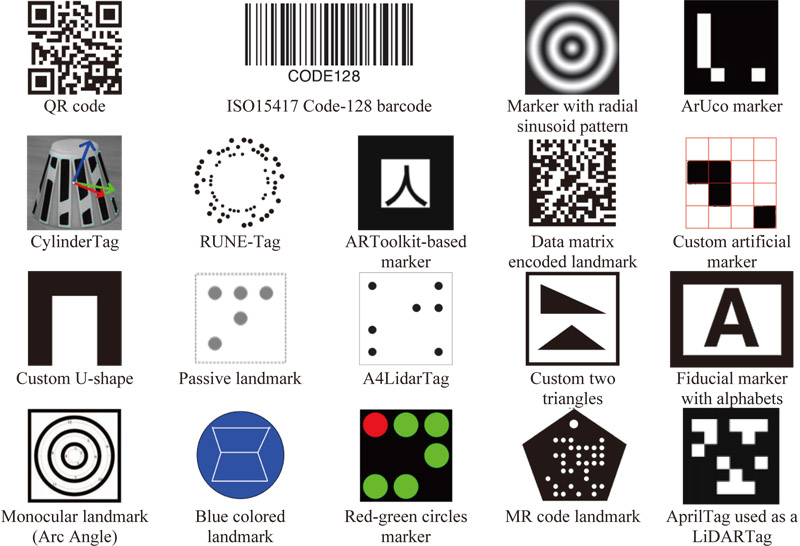

Artificial markers symbols used in the investigated 44 papers

- [1] B. Kitchenham and S. M. Charters, “Guidelines for performing systematic literature reviews in software engineering,” EBSE Technical Report, No.EBSE-2007-01, 2007.

- [2] C. Okoli, “A guide to conducting a standalone systematic literature review,” Communications of the Association for Information Systems, Vol.37, pp. 879-910, 2015. https://doi.org/10.17705/1CAIS.03743

- [3] M. Petticrew and H. Roberts, “Systematic Reviews in the Social Sciences: A Practical Guide,” John Wiley & Sonc, Inc., 2006. https://doi.org/10.1002/9780470754887

- [4] B. Kitchenham and P. Brereton, “A systematic review of systematic review process research in software engineering,” Information and Software Technology, Vol.55, No.12, pp. 2049-2075, 2013. https://doi.org/10.1016/j.infsof.2013.07.010

- [5] H. Cooper, “Research Synthesis and Meta-Analysis: A Step-by-Step Approach,” SAGE Publications, Inc., 2017. https://doi.org/10.4135/9781071878644

- [6] M. J. Grant and A. Booth, “A typology of reviews: An analysis of 14 review types and associated methodologies,” Health Information and Libraries J., Vol.26, No.2, pp. 91-108, 2009. https://doi.org/10.1111/j.1471-1842.2009.00848.x

- [7] X. Dong, K. Yuan, Z. Zhu, and F. Wen, “A hybrid method for robot navigation based on MR code landmark,” 8th World Congress on Intelligent Control and Automation, pp. 6676-6680, 2010. https://doi.org/10.1109/WCICA.2010.5554155

- [8] Y. Xu et al., “Design and recognition of monocular visual artificial landmark based on arc angle information coding,” 33rd Youth Academic Annual Conf. of Chinese Association of Automation, pp. 722-727, 2018. https://doi.org/10.1109/YAC.2018.8406466

- [9] Y. Li, S. Zhu, Y. Yu, and Z. Wang, “An improved graph-based visual localization system for indoor mobile robot using newly designed markers,” Int. J. of Advanced Robotic Systems, Vol.15, No.2, 2018. https://doi.org/10.1177/1729881418769191

- [10] V. Kroumov and K. Okuyama, “Localisation and position correction for mobile robot using artificial visual landmarks,” Int. J. of Advanced Mechatronic Systems, Vol.4, No.2, pp. 112-119, 2012. https://doi.org/10.1504/IJAMECHS.2012.048395

- [11] Y. Lei, X. Wang, L. Ren, and Z. Feng, “Research on artificial landmark recognition method based on omni-vision sensor,” 5th Int. Conf. on Intelligent Networks and Intelligent Systems, pp. 325-328, 2012. https://doi.org/10.1109/ICINIS.2012.68

- [12] Z. Taha and J. A. Mat-Jizat, “Landmark navigation in low illumination using omnidirectional camera,” 9th Int. Conf. on Ubiquitous Robots and Ambient Intelligence, pp. 262-265, 2012. https://doi.org/10.1109/URAI.2012.6462990

- [13] L. Cavanini et al., “A QR-code localization system for mobile robots: Application to smart wheelchairs,” 2017 European Conf. on Mobile Robots, 2017. https://doi.org/10.1109/ECMR.2017.8098667

- [14] S.-H. Bach, P.-B. Khoi, and S.-Y. Yi, “Application of QR code for localization and navigation of indoor mobile robot,” IEEE Access, Vol.11, pp. 28384-28390, 2023. https://doi.org/10.1109/ACCESS.2023.3250253

- [15] M. J. Edwards, M. P. Hayes, and R. D. Green, “High-accuracy fiducial markers for ground truth,” 2016 Int. Conf. on Image and Vision Computing New Zealand, 2016. https://doi.org/10.1109/IVCNZ.2016.7804461

- [16] S. Babu and S. Markose, “IoT enabled robots with QR code based localization,” 2018 Int. Conf. on Emerging Trends and Innovations in Engineering and Technological Research, 2018. https://doi.org/10.1109/ICETIETR.2018.8529028

- [17] S.-J. Lee, G. Tewolde, J. Lim, and J. Kwon, “QR-code based localization for indoor mobile robot with validation using a 3D optical tracking instrument,” 2015 IEEE Int. Conf. on Advanced Intelligent Mechatronics, pp. 965-970, 2015. https://doi.org/10.1109/AIM.2015.7222664

- [18] S. Wang et al., “CylinderTag: An accurate and flexible marker for cylinder-shape objects pose estimation based on projective invariants,” IEEE Trans. on Visualization and Computer Graphics, Vol.30, No.12, pp. 7486-7499, 2024. https://doi.org/10.1109/TVCG.2024.3350901

- [19] F. Bergamasco, A. Albarelli, L. Cosmo, E. Rodolà, and A. Torsello, “An accurate and robust artificial marker based on cyclic codes,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.38, No.12, pp. 2359-2373, 2016. https://doi.org/10.1109/TPAMI.2016.2519024

- [20] G. Li and Z. Jiang, “An artificial landmark design based on mobile robot localization and navigation,” 4th Int. Conf. on Intelligent Computation Technology and Automation, pp. 588-591, 2011. https://doi.org/10.1109/ICICTA.2011.597

- [21] Y.-C. Lee, Christiand, H. Chae, and S.-H. Kim, “Applications of robot navigation based on artificial landmark in large scale public space,” 2011 IEEE Int. Conf. on Robotics and Biomimetics, pp. 721-726, 2011. https://doi.org/10.1109/ROBIO.2011.6181371

- [22] G. Kim and E. M. Petriu, “Fiducial marker indoor localization with artificial neural network,” 2010 IEEE/ASME Int. Conf. on Advanced Intelligent Mechatronics, pp. 961-966, 2010. https://doi.org/10.1109/AIM.2010.5695801

- [23] M. Zhou, J. Gui, B. Deng, D. Xu, and Y. Yan, “Fiducial marker-based metric initialization for monocular SLAM,” 5th Int. Conf. on Automation, Control and Robotics Engineering, pp. 630-635, 2020. https://doi.org/10.1109/CACRE50138.2020.9230158

- [24] Y. Song, B. Lian, and C. Li, “Indoor integrated navigation for differential wheeled robot using ceiling artificial landmark,” Proc. 2013 Int. Conf. on Mechatronic Sciences, Electric Engineering and Computer, pp. 828-831, 2013. https://doi.org/10.1109/MEC.2013.6885173

- [25] Z. Mikulová, F. Duchoň, M. Dekan, and A. Babinec, “Localization of mobile robot using visual system,” Int. J. of Advanced Robotic Systems, Vol.14, No.5, 2017. https://doi.org/10.1177/1729881417736085

- [26] J.-Y. Lee and W. Yu, “Robust self-localization of ground vehicles using artificial landmark,” 11th Int. Conf. on Ubiquitous Robots and Ambient Intelligence, pp. 303-307, 2014. https://doi.org/10.1109/URAI.2014.7057439

- [27] G. Lan, J. Wang, and W. Chen, “An improved indoor localization system for mobile robots based on landmarks on the ceiling,” 2016 IEEE Int. Conf. on Robotics and Biomimetics, pp. 1395-1400, 2016. https://doi.org/10.1109/ROBIO.2016.7866522

- [28] A. Alabbas, M. A. Cabrera, O. Alyounes, and D. Tsetserukou, “ArUcoGlide: A novel wearable robot for position tracking and haptic feedback to increase safety during human-robot interaction,” IEEE 28th Int. Conf. on Emerging Technologies and Factory Automation, 2023. https://doi.org/10.1109/ETFA54631.2023.10275727

- [29] A. Bousaid, T. Theodoridis, and S. Nefti-Meziani, “Introducing a novel marker-based geometry model in monocular vision,” 13th Workshop on Positioning, Navigation and Communications, 2016. https://doi.org/10.1109/WPNC.2016.7822857

- [30] R. Chand, A. Prasad, B. Sharma, and J. Vanualailai, “Landmark aided navigation of a point-mass robot via Lyapunov-based control scheme,” Asia-Pacific World Congress on Computer Science and Engineering, 2014. https://doi.org/10.1109/APWCCSE.2014.7053847

- [31] C. Buchner, P. Gsellmann, M. M. Merkumians, and G. Schitter, “Eye-in-hand pose estimation of industrial robots,” 49th Annual Conf. of the IEEE Industrial Electronics Society, 2023. https://doi.org/10.1109/IECON51785.2023.10312053

- [32] A. Tourani, H. Bavle, J. L. Sanchez-Lopez, R. M. Salinas, and H. Voos, “Marker-based visual SLAM leveraging hierarchical representations,” 2023 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 3461-3467, 2023. https://doi.org/10.1109/IROS55552.2023.10341891

- [33] M. Beinhofer, J. Müller, and W. Burgard, “Near-optimal landmark selection for mobile robot navigation,” 2011 IEEE Int. Conf. on Robotics and Automation, pp. 4744-4749, 2011. https://doi.org/10.1109/ICRA.2011.5979871

- [34] B. Dzodzo, L. Han, X. Chen, H. Qian, and Y. Xu, “Realtime 2D code based localization for indoor robot navigation,” 2013 IEEE Int. Conf. on Robotics and Biomimetics, pp. 486-492, 2013. https://doi.org/10.1109/ROBIO.2013.6739507

- [35] M. Zhang et al., “Visual-marker-inertial fusion localization system using sliding window optimization,” 33rd Chinese Control and Decision Conf., pp. 3566-3572, 2021. https://doi.org/10.1109/CCDC52312.2021.9602344

- [36] Z. Jiang et al., “A mobile robot indoor positioning system based on ArUco array and extended Kalman filter,” 2023 China Automation Congress (CAC), pp. 562-567, 2023. https://doi.org/10.1109/CAC59555.2023.10450326

- [37] G. Lei, X. Xu, X. Yu, Y. Wang, and T. Qu, “An indoor localization method for humanoid robot based on artificial landmark,” 5th Int. Conf. on Instrumentation and Measurement, Computer, Communication and Control, pp. 1854-1857, 2015. https://doi.org/10.1109/IMCCC.2015.394

- [38] N. Strisciuglio, M. L. Vallina, N. Petkov, and R. M. Salinas, “Camera localization in outdoor garden environments using artificial landmarks,” 2018 IEEE Int. Work Conf. on Bioinspired Intelligence, 2018. https://doi.org/10.1109/IWOBI.2018.8464139

- [39] W. Serna, G. Daza, and N. Izquierdo, “Planar approximation of three-dimensional data for refinement of marker-based tracking algorithm,” 2016 XXI Symp. on Signal Processing, Images and Artificial Vision, 2016. https://doi.org/10.1109/STSIVA.2016.7743362

- [40] C. d. S. Fernandes, M. F. M. Campos, and L. Chaimowicz, “A low-cost localization system based on artificial landmarks,” 2012 Brazilian Robotics Symp. and Latin American Robotics Symp, pp. 109-114, 2012. https://doi.org/10.1109/SBR-LARS.2012.25

- [41] Y. Xie et al., “A4LidarTag: Depth-based fiducial marker for extrinsic calibration of solid-state Lidar and camera,” IEEE Robotics and Automation Letters, Vol.7, No.3, pp. 6487-6494, 2022. https://doi.org/10.1109/LRA.2022.3173033

- [42] Q. Zeng et al., “An indoor 2-D LiDAR SLAM and localization method based on artificial landmark assistance,” IEEE Sensors J., Vol.24, No.3, pp. 3681-3692, 2024. https://doi.org/10.1109/JSEN.2023.3341832

- [43] Y.-C. Lee, Christiand, H. Chae, and W. Yu, “Artificial landmark map building method based on grid SLAM in large scale indoor environment,” 2010 IEEE Int. Conf. on Systems, Man and Cybernetics, pp. 4251-4256, 2010. https://doi.org/10.1109/ICSMC.2010.5642489

- [44] M. Li et al., “Artificial landmark positioning system using omnidirectional vision for agricultural vehicle navigation,” 2nd Int. Conf. on Intelligent System Design and Engineering Application, pp. 665-669, 2012. https://doi.org/10.1109/ISdea.2012.730

- [45] M. Beinhofer, H. Kretzschmar, and W. Burgard, “Deploying artificial landmarks to foster data association in simultaneous localization and mapping,” 2013 IEEE Int. Conf. on Robotics and Automation, pp. 5235-5240, 2013. https://doi.org/10.1109/ICRA.2013.6631325

- [46] T. Almeida, V. Santos, B. Lourenço, and P. Fonseca, “Detection of data matrix encoded landmarks in unstructured environments using deep learning,” 2020 IEEE Int. Conf. on Autonomous Robot Systems and Competitions, pp. 74-80, 2020. https://doi.org/10.1109/ICARSC49921.2020.9096211

- [47] J.-K. Huang, S. Wang, M. Ghaffari, and J. W. Grizzle, “LiDARTag: A real-time fiducial tag system for point clouds,” IEEE Robotics and Automation Letters, Vol.6, No.3, pp. 4875-4882, 2021. https://doi.org/10.1109/LRA.2021.3070302

- [48] M. Beinhofer, J. Müller, A. Krause, and W. Burgard, “Robust landmark selection for mobile robot navigation,” 2013 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 2637-2643, 2013. https://doi.org/10.1109/IROS.2013.6696728

- [49] B. P. E. Alvarado Vasquez, R. Gonzalez, F. Matia, and P. De La Puente, “Sensor fusion for tour-guide robot localization,” IEEE Access, Vol.6, pp. 78947-78964, 2018. https://doi.org/10.1109/ACCESS.2018.2885648

- [50] L. E. Ortiz-Fernandez, E. V. Cabrera-Avila, B. M. F. da Silva, and L. M. G. Gonçalves, “Smart artificial markers for accurate visual mapping and localization” Sensors, Vol.21, No.2, Article No.625, 2021. https://doi.org/10.3390/s21020625

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.