Paper:

A Detection System for Measuring the Patient’s Interest in Animal Robot Therapy Observation

Dwi Kurnia Basuki*,**, Azhar Aulia Saputra*, Naoyuki Kubota*

, Kaoru Inoue***, Yoshiki Shimomura*

, Kaoru Inoue***, Yoshiki Shimomura*

, and Kazuyoshi Wada*

, and Kazuyoshi Wada*

*Graduate School of Systems Design, Tokyo Metropolitan University

6-6 Asahigaoka, Hino, Tokyo 191-0065, Japan

**Department of Informatics and Computer Engineering, Politeknik Elektronika Negeri Surabaya

Jl. Raya ITS, Sukolilo, Surabaya, Jawa Timur 60111, Indonesia

***Graduate School of Human Health Sciences, Tokyo Metropolitan University

7-2-10 Higashi-ogu, Arakawa-ku, Tokyo 116-8551, Japan

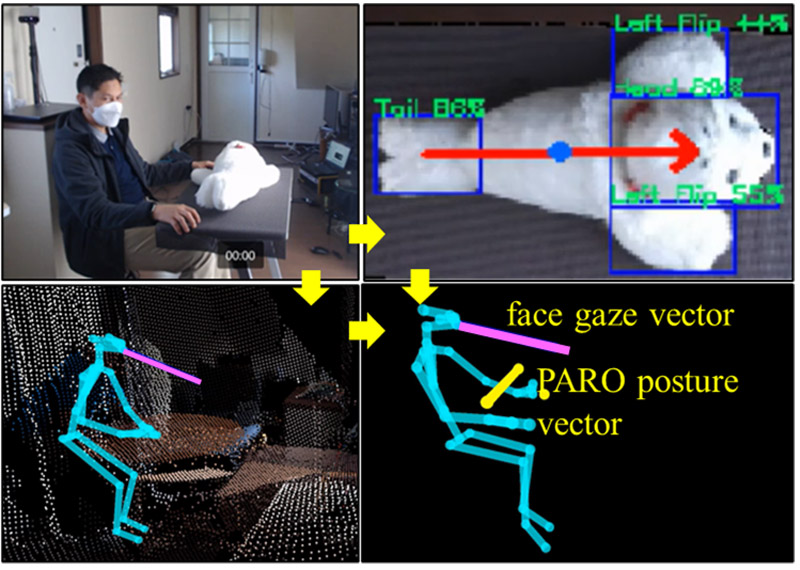

The effectiveness of PARO, animal-like robots, as robot-assisted therapy has shown a positive impact on non-pharmacological therapy. However, the effectiveness of caregivers presenting PARO to patients needs to be evaluated due to the different skills possessed by caregivers. The way caregivers present PARO to patients will impact the patients’ interest response towards PARO. We propose a system that can measure patients’ interest in PARO by recognizing the reciprocal attention between PARO and humans. We built the system by integrating human skeleton tracking and PARO’s posture detection. Human skeleton tracking is reconstructed from multiple Kinect Azure devices, while PARO’s posture detection is achieved using a single RGBD camera placed on top of the field. We extract the attention parameter by calculating the difference in gaze direction between PARO and humans. The results showed that the proposed method successfully detected the gaze interaction between PARO and humans with an average accuracy rate of 97.59%.

Human skeleton–PARO posture integration

- [1] W. Z. Zulkifli et al., “Smile detection tool using OpenCV-Python to measure response in human-robot interaction with animal robot PARO,” Int. J. of Advanced Computer Science and Applications, Vol.9, No.11, pp. 365-370, 2018. https://doi.org/10.14569/IJACSA.2018.091151

- [2] R. Bemelmans, G. J. Gelderblom, P. Jonker, and L. de Witte, “Effectiveness of robot Paro in intramural psychogeriatric care: A multicenter quasi-experimental study,” J. of the American Medical Directors Association, Vol.16, No.11, pp. 946-950, 2015. https://doi.org/10.1016/j.jamda.2015.05.007

- [3] S. Petersen, S. Houston, H. Qin, C. Tague, and J. Studley, “The utilization of robotic pets in dementia care,” J. of Alzheimer’s Disease, Vol.55, No.2, pp. 569-574, 2016. https://doi.org/10.3233/JAD-160703

- [4] Y. Hori et al., “Use of robotic pet in a distributed layout elderly housing with services: A case study on elderly people with cognitive impairment,” J. Robot. Mechatron., Vol.33, No.4, pp. 784-803, 2021. https://doi.org/10.20965/jrm.2021.p0784

- [5] W. Moyle et al., “Use of a robotic seal as a therapeutic tool to improve dementia symptoms: A cluster-randomized controlled trial,” J. of the American Medical Directors Association, Vol.18, No.9, pp. 766-773, 2017. https://doi.org/10.1016/j.jamda.2017.03.018

- [6] T. Shibata and J. F. Coughlin, “Trends of robot therapy with neurological therapeutic seal robot, PARO,” J. Robot. Mechatron., Vol.26, No.4, pp. 418-425, 2014. https://doi.org/10.20965/jrm.2014.p0418

- [7] P. A. Kelly et al., “The effect of PARO robotic seals for hospitalized patients with dementia: A feasibility study,” Geriatric Nursing, Vol.42, No.1, pp. 37-45, 2021. https://doi.org/10.1016/j.gerinurse.2020.11.003

- [8] T. Shibata et al., “PARO as a biofeedback medical device for mental health in the COVID-19 era,” Sustainability, Vol.13, No.20, Article No.11502, 2021. https://doi.org/10.3390/su132011502

- [9] T. Shibata, “Therapeutic seal robot as biofeedback medical device: Qualitative and quantitative evaluations of robot therapy in dementia care,” Proc. of the IEEE, Vol.100, No.8, pp. 2527-2538, 2012. https://doi.org/10.1109/JPROC.2012.2200559

- [10] K. Wada, Y. Ikeda, K. Inoue, and R. Uehara, “Development and preliminary evaluation of a caregiver’s manual for robot therapy using the therapeutic seal robot PARO,” 19th Int. Symp. in Robot and Human Interactive Communication, pp. 533-538, 2010. https://doi.org/10.1109/ROMAN.2010.5598615

- [11] K. Wada, Y. Takasawa, and T. Shibata, “Robot therapy at facilities for the elderly in Kanagawa Prefecture—A report on the experimental result of the first month,” The 23rd IEEE Int. Symp. on Robot and Human Interactive Communication, pp. 193-198, 2014. https://doi.org/10.1109/ROMAN.2014.6926252

- [12] K. Wada and K. Inoue (Eds.), “Caregiver’s Manual for Robot Therapy: Practical Use of Therapeutic Seal Robot Paro in Elderly Facilities,” Tokyo Metropolitan University Press, 2010.

- [13] M. Rodrigo-Claverol et al., “Human–animal bond generated in a brief animal-assisted therapy intervention in adolescents with mental health disorders,” Animals, Vol.13, No.3, Article No.358, 2023. https://doi.org/10.3390/ani13030358

- [14] S. Eisa and A. Moreira, “A behaviour monitoring system (BMS) for ambient assisted living,” Sensors, Vol.17, No.9, Article No.1946, 2017. https://doi.org/10.3390/s17091946

- [15] N. E. Tabbakha, W.-H. Tan, and C.-P. Ooi, “Elderly action recognition system with location and motion data,” 2019 7th Int. Conf. on Information and Communication Technology (ICoICT), 2019. https://doi.org/10.1109/ICoICT.2019.8835224

- [16] M. Buzzelli, A. Albé, and G. Ciocca, “A vision-based system for monitoring elderly people at home,” Applied Sciences, Vol.10, No.1, Article No.374, 2020. https://doi.org/10.3390/app10010374

- [17] M. Li, T. Chen, and H. Du, “Human behavior recognition using range-velocity-time points,” IEEE Access, Vol.8, pp. 37914-37925, 2020. https://doi.org/10.1109/ACCESS.2020.2975676

- [18] Z. Guo et al., “Social visual behavior analytics for autism therapy of children based on automated mutual gaze detection,” Proc. of the 8th ACM/IEEE Int. Conf. on Connected Health: Applications, Systems and Engineering Technologies, pp. 11-21, 2023. https://doi.org/10.1145/3580252.3586976

- [19] K. Kuramochi et al., “Method to record and analyze the operation of seal robot in elderly care,” J. Robot. Mechatron., Vol.33, No.4, pp. 730-738, 2021. https://doi.org/10.20965/jrm.2021.p0730

- [20] Y. Tsutsui et al., “A knowledge sharing method to equalise the interpersonal service quality,” 9th Int. Conf. on Through-Life Engineering Services, 2020. https://doi.org/10.2139/ssrn.3718049

- [21] A. Palanica and R. J. Itier, “Eye gaze and head orientation modulate the inhibition of return for faces,” Attention, Perception, & Psychophysics, Vol.77, No.8, pp. 2589-2600, 2015. https://doi.org/10.3758/s13414-015-0961-y

- [22] M. I. Awal et al., “Action recognition with spatiotemporal analysis and support vector machine for elderly monitoring system,” 2021 Int. Electronics Symp., pp. 470-475, 2021. https://doi.org/10.1109/IES53407.2021.9594010

- [23] E. Cippitelli, S. Gasparrini, E. Gambi, and S. Spinsante, “A human activity recognition system using skeleton data from RGBD sensors,” Computational Intelligence and Neuroscience, Vol.2016, Article No.4351435, 2016. https://doi.org/10.1155/2016/4351435

- [24] A. Manzi, P. Dario, and F. Cavallo, “A human activity recognition system based on dynamic clustering of skeleton data,” Sensors, Vol.17, No.5, Article No.1100, 2017. https://doi.org/10.3390/s17051100

- [25] D. K. Basuki, A. A. Saputra, N. Kubota, and K. Wada, “Joint angle-based activity recognition system for PARO therapy observation,” IFAC-PapersOnLine, Vol.56, No.2, pp. 1145-1151, 2023. https://doi.org/10.1016/j.ifacol.2023.10.1718

- [26] Z. Guan, H. Li, Z. Zuo, and L. Pan, “Design a robot system for tomato picking based on YOLO v5,” IFAC-PapersOnLine, Vol.55, No.3, pp. 166-171, 2022. https://doi.org/10.1016/j.ifacol.2022.05.029

- [27] T. Hu et al., “Research on apple object detection and localization method based on improved YOLOX and RGB-D images,” Agronomy, Vol.13, No.7, Article No.1816, 2023. https://doi.org/10.3390/agronomy13071816

- [28] M. Tan et al., “Animal detection and classification from camera trap images using different mainstream object detection architectures,” Animals, Vol.12, No.15, Article No.1976, 2022. https://doi.org/10.3390/ani12151976

- [29] Y. Xie et al., “Recognition of big mammal species in airborne thermal imaging based on YOLO V5 algorithm,” Integrative Zoology, Vol.18, No.2, pp. 333-352, 2023. https://doi.org/10.1111/1749-4877.12667

- [30] Y. Qiao, Y. Guo, and D. He, “Cattle body detection based on YOLOv5-ASFF for precision livestock farming,” Computers and Electronics in Agriculture, Vol.204, Article No.107579, 2023. https://doi.org/10.1016/j.compag.2022.107579

- [31] A. A. Saputra, A. R. A. Besari, and N. Kubota, “Human joint skeleton tracking using multiple kinect Azure,” 2022 Int. Electronics Symp., pp. 430-435, 2022. https://doi.org/10.1109/IES55876.2022.9888532

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.