Paper:

Gesture Interface with Pointing Direction Classification by Deep Learning Based on RGB Image of Fovea Camera

Takahiro Ikeda

, Tsubasa Imamura, Satoshi Ueki, and Hironao Yamada

, Tsubasa Imamura, Satoshi Ueki, and Hironao Yamada

Faculty of Engineering, Gifu University

1-1 Yanagido, Gifu, Gifu 501-1193, Japan

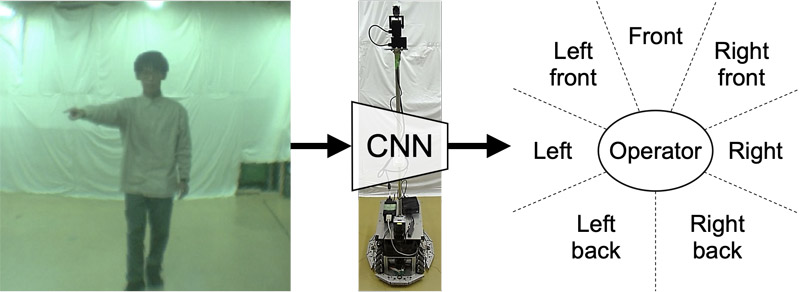

This paper describes a gesture interface for the operation of autonomous mobile robots (AMRs) for transportation in industrial factories. The proposed gesture interface recognizes pointing directions by human operators, who are workers in the factory, based on deep learning using images captured by a fovea-lens camera. The interface could classify pointing gestures into seven directions with a recognition accuracy of 0.89. This paper also introduces the navigation method for AMR to implement the proposed interface. This navigation method enabled the AMR to approach the pointed target by adjusting its horizontal angle based on the object recognition using RGB images. The AMR achieved high position accuracy with a mean position error of 0.052 m by implementing the proposed gesture interface and the navigation method.

Pointing direction classification for AMR

- [1] J. Berg and S. Lu, “Review of interfaces for industrial human-robot interaction,” Current Robotics Reports, Vol.1, No.2, pp. 27-34, 2020. https://doi.org/10.1007/s43154-020-00005-6

- [2] A. K. Abdul-Hassan and A. A. Badr, “A review on voice-based interface for human-robot interaction,” Iraqi J. for Electrical and Electronic Engineering, Vol.16, No.2, pp. 91-102, 2020.

- [3] H. Liu and L. Wang, “Gesture recognition for human-robot collaboration: A review,” Int. J. of Industrial Ergonomics, Vol.68, pp. 355-367, 2018. https://doi.org/10.1016/j.ergon.2017.02.004

- [4] Y. Tamura, M. Sugi, T. Arai, and J. Ota, “Target identification through human pointing gesture based on human-adaptive approach,” J. Robot. Mechatron., Vol.20, No.4, pp. 515-525, 2008. https://doi.org/10.20965/jrm.2008.p0515

- [5] M. Sugi et al., “Development of deskwork support system using pointing gesture interface,” J. Robot. Mechatron., Vol.22, No.4, pp. 430-438, 2010. https://doi.org/10.20965/jrm.2010.p0430

- [6] E. Tamura, Y. Yamashita, T. Yamashita, E. Sato-Shimokawara, and T. Yamaguchi, “Movement operation interaction system for mobility robot using finger-pointing recognition,” J. Adv. Comput. Intell. Intell. Inform., Vol.21, No.4, pp. 709-715, 2017. https://doi.org/10.20965/jaciii.2017.p0709

- [7] K. Yoshida, F. Hibino, Y. Takahashi, and Y. Maeda, “Evaluation of pointing navigation interface for mobile robot with spherical vision system,” 2011 IEEE Int. Conf. on Fuzzy Systems (FUZZ-IEEE 2011), pp. 721-726, 2011. https://doi.org/10.1109/FUZZY.2011.6007673

- [8] Y. Takahashi, K. Yoshida, F. Hibino, and Y. Maeda, “Human pointing navigation interface for mobile robot with spherical vision system,” J. Adv. Comput. Intell. Intell. Inform., Vol.15, No.7, pp. 869-877, 2011. https://doi.org/10.20965/jaciii.2011.p0869

- [9] N. Dhingra, E. Valli, and A. Kunz, “Recognition and localisation of pointing gestures using a RGB-D camera,” HCI Int. 2020 – Posters, Part 1, pp. 205-212, 2020. https://doi.org/10.1007/978-3-030-50726-8_27

- [10] M. Tölgyessy et al., “Foundations of visual linear human–robot interaction via pointing gesture navigation,” Int. J. of Social Robotics, Vol.9, No.4, pp. 509-523, 2017. https://doi.org/10.1007/s12369-017-0408-9

- [11] T. Ikeda, S. Ueki, and H. Yamada, “Pointing gesture interface with fovea-lens camera by deep learning using skeletal information,” 2023 IEEE/SICE Int. Symp. on System Integration (SII), 2023. https://doi.org/10.1109/SII55687.2023.10039372

- [12] T. Ikeda, N. Noda, S. Ueki, and H. Yamada, “Gesture interface and transfer method for AMR by using recognition of pointing direction and object recognition,” J. Robot. Mechatron., Vol.35, No.2, pp. 288-297, 2023. https://doi.org/10.20965/jrm.2023.p0288

- [13] T. Ikeda, S. Ueki, N. Bando, and H. Yamada, “Development on fovea-lens camera system for human recognition in robot system,” 2022 IEEE/SICE Int. Symp. on System Integration (SII), pp. 355-360, 2022. https://doi.org/10.1109/SII52469.2022.9708737

- [14] Y. Zhang, C. Wang, X. Wang, W. Zeng, and W. Liu, “FairMOT: On the fairness of detection and re-identification in multiple object tracking,” Int. J. of Computer Vision, Vol.129, No.11, pp. 3069-3087, 2021. https://doi.org/10.1007/s11263-021-01513-4

- [15] J. Fu, I. V. Bajić, and R. G. Vaughan, “Datasets for face and object detection in fisheye images,” Data in Brief, Vol.27, Article No.104752, 2019. https://doi.org/10.1016/j.dib.2019.104752

- [16] H. Rashed et al., “Generalized object detection on fisheye cameras for autonomous driving: Dataset, representations and baseline,” 2021 IEEE Winter Conf. on Applications of Computer Vision (WACV), pp. 2271-2279, 2021. https://doi.org/10.1109/WACV48630.2021.00232

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.