Paper:

Application of Visual Servoing to Drawer Retrieval and Storage Operations

Kotoya Hasegawa* and Shogo Arai**

*Department of Mechanical and Aerospace Engineering, Graduate School of Science and Technology, Tokyo University of Science

2641 Yamazaki, Noda, Chiba 278-8510, Japan

**Department of Mechanical and Aerospace Engineering, Faculty of Science and Technology, Tokyo University of Science

2641 Yamazaki, Noda, Chiba 278-8510, Japan

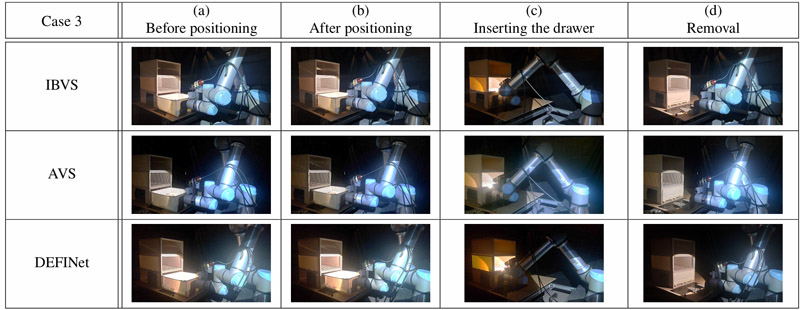

The number of logistics warehouses is increasing due to the expansion of the e-commerce market. Among warehouse tasks, the retrieval, transportation, and storage operations of containers holding products on shelves are becoming increasingly automated because of labor shortages and the physical strain of workers. However, automated warehouse systems face challenges, such as the requirement for extensive space for implementation, high maintenance costs owing to rail-based positioning, and high pre-adjustment costs due to positioning with augmented reality markers or other signs attached to each container and shelf. To solve these problems, we aim to develop a system that enables the retrieval, transportation, and storage operations of containers without relying on positioning tools such as rails or signs. Specifically, we propose a system that uses a fork-inspired hand to handle a drawer equipped with fork pockets for retrieval and storage operations while considering the benefits of using drawers in terms of space efficiency and product management. For positioning, we use visual servoing with images obtained from a camera mounted at the end of the robot arm. In this study, we implement the following three methods in the proposed system and conduct comparative studies: image-based visual servoing (IBVS), which uses luminance values directly as features; active visual servoing (AVS), which projects patterned light onto an object for positioning; and convolutional neural networks-based visual servoing (CNN-VS), which infers the relative pose by using the difference between the feature maps of desired and current images. The experimental results show that CNN-VS outperforms the other methods in terms of the operation success rate.

A robot system for storage operations with visual servoing

- [1] V. Y. Marchuk, O. M. Harmash, and O. V. Ovdiienko, “World trends in warehouse logistics,” Intellectualization of Logistics and Supply Chain Management, Vol.2, pp. 32-50, 2020. https://doi.org/10.46783/smart-scm/2020-2-3

- [2] S. Arai, A. L. Pettersson, and K. Hashimoto, “Fast prediction of a worker’s reaching motion without a skeleton model (F-PREMO),” IEEE Access, Vol.8, pp. 90340-90350, 2020. https://doi.org/10.1109/ACCESS.2020.2992068

- [3] L. Johannsmeier and S. Haddadin, “A hierarchical human-robot interaction-planning framework for task allocation in collaborative industrial assembly processes,” IEEE Robotics and Automation Letters, Vol.2, No.1, pp. 41-48, 2017. https://doi.org/10.1109/LRA.2016.2535907

- [4] A. Cesta, A. Orlandini, G. Bernardi, and A. Umbrico, “Towards a planning-based framework for symbiotic human-robot collaboration,” Proc. IEEE 21st Int. Conf. Emerg. Technol. Factory Autom., 2016. https://doi.org/10.1109/ETFA.2016.7733585

- [5] K. Murakami, S. Huang, M. Ishikawa, and Y. Yamakawa, “Fully automated bead art assembly for smart manufacturing using dynamic compensation approach,” J. Robot. Mechatron., Vol.34, No.5, pp. 936-945, 2022. https://doi.org/10.20965/jrm.2022.p0936

- [6] M. Seki, K. Wada, and T. Tomizawa, “Development of automated display shelf system for new purchasing experience by dynamic product layout changes,” J. Robot. Mechatron., Vol.36, No.6, pp. 1527-1536, 2024. https://doi.org/10.20965/jrm.2024.p1527

- [7] N. Kimura, K. Ito, T. Fuji, K. Fujimoto, K. Esaki, F. Beniyama, and T. Moriya, “Mobile dual-arm robot for automated order picking system in warehouse containing various kinds of products,” Proc. IEEE/SICE Int. Symp. Syst. Integr., pp. 332-338, 2015. https://doi.org/10.1109/SII.2015.7404942

- [8] F. Chaumette and S. Hutchinson, “Visual servo control. I. Basic approaches,” IEEE Robot. Autom. Mag., Vol.13, No.4, pp. 82-90, 2006. https://doi.org/10.1109/MRA.2006.250573

- [9] C. Kingkan, S. Ito, S. Arai, T. Nammoto, and K. Hashimoto, “Model-based virtual visual servoing with point cloud data,” Proc. IEEE/RSJ Int. Conf. Intell. Robots Syst., pp. 5549-5555, 2016. https://doi.org/10.1109/IROS.2016.7759816

- [10] P. Martinet and J. Gallice, “Position based visual servoing using a non-linear approach,” Proc. IEEE/RSJ Int. Conf. Intell. Robots Syst., Vol.1, pp. 531-536, 1999. https://doi.org/10.1109/IROS.1999.813058

- [11] M. Kmich, H. Karmouni, I. Harrade, A. Daoui, and M. Sayyouri, “Image-based visual servoing techniques for robot control,” Proc. Int. Conf. Intell. Syst. Comput. Vis., 2022. https://doi.org/10.1109/ISCV54655.2022.9806078

- [12] G. Silveira and E. Malis, “Direct visual servoing: Vision-based estimation and control using only nonmetric information,” IEEE Trans. Robot., Vol.28, No.4, pp. 974-980, 2012. https://doi.org/10.1109/TRO.2012.2190875

- [13] S. Arai, K. Konada, N. Yoshinaga, A. Kobayashi, and K. Kosuge, “Robust regrasping against error of grasping for bin-picking and kitting,” TechRxiv, 2021. https://doi.org/10.36227/techrxiv.14723607.v1

- [14] S. Arai, Y. Miyamoto, A. Kobayashi, and K. Kosuge, “Optimal projection pattern for active visual servoing (AVS),” IEEE Access, Vol.12, pp. 47110-47118, 2024. https://doi.org/10.1109/ACCESS.2024.3382204

- [15] F. Tokuda, S. Arai, and K. Kosuge, “Convolutional neural network-based visual servoing for eye-to-hand manipulator,” IEEE Access, Vol.9, pp. 91820-91835, 2021. https://doi.org/10.1109/ACCESS.2021.3091737

- [16] S. Huang, K. Murakami, M. Ishikawa, and Y. Yamakawa, “Robotic assistance for peg-and-hole alignment by mimicking annular solar eclipse process,” J. Robot. Mechatron., Vol.34, No.5, pp. 946-955, 2022. https://doi.org/10.20965/jrm.2022.p0946

- [17] Y. Imai, T. Yamada, J. Sato, T. Hayashi, and S. Aono, “Research on robotic assembly of gear motors (stator recognition using keypoint matching and stator insertion using contact position estimation),” Proc. Int. Conf. Arti. Life. Robot., Vol.28, pp. 924-927, 2023. https://doi.org/10.5954/ICAROB.2023.GS5-1

- [18] J.-H. Cho and Y.-T. Kim, “Design of autonomous logistics transportation robot system with fork-type lifter,” Int. J. of Fuzzy Logic and Intelligent System, Vol.17, No.3, pp. 177-186, 2017. https://doi.org/10.5391/IJFIS.2017.17.3.177

- [19] A. Saxena, H. Pandya, G. Kumar, A. Gaud, and K. Krishna, “Exploring convolutional networks for end-to-end visual servoing,” Proc. IEEE Int. Conf. Robot. Autom., pp. 3817-3823, 2017. https://doi.org/10.1109/ICRA.2017.7989442

- [20] J. Salvi, S. Fernandez, T. Pribanic, and X. Llado, “A state of the art in structured light patterns for surface profilometry,” Pattern Recognit., Vol.43, No.8, pp. 2666-2680, 2010. https://doi.org/10.1016/j.patcog.2010.03.004

- [21] M. Gupta, A. Agrawal, A. Veeraraghavan, and S. G. Narasimhan, “Structured light 3d scanning in the presence of global illumination,” Proc. CVPR, pp. 713-720, 2011. https://doi.org/10.1109/CVPR.2011.5995321

- [22] J. L. Posdamer and M. D. Altschuler, “Surface measurement by space-encoded projected beam systems,” Comput. Graph. Image Process., Vol.18, No.1, pp. 1-17, 1982. https://doi.org/10.1016/0146-664X(82)90096-X

- [23] K. Tanaka and S. Arai, “Utilizing hand-eye active visual servoing for automated bolt removal,” Proc. IEEE 18th Int. Conf. Adv. Motion Cntrol, 2024. https://doi.org/10.1109/AMC58169.2024.10505699

- [24] Q. Bateux, E. Marchand, J. Leitner, F. Chaumette, and P. Corke, “Training deep neural networks for visual servoing,” Proc. IEEE Int. Conf. Robot. Autom., pp. 3307-3314, 2018. https://doi.org/10.1109/ICRA.2018.8461068

- [25] C. Yu, Z. Cai, H. Pham, and Q.-C. Pham, “Siamese convolutional neural network for sub-millimeter-accurate camera pose estimation and visual servoing,” Proc. IEEE/RSJ Int. Conf. Intell. Robots Syst., pp. 935-941, 2019. https://doi.org/10.1109/IROS40897.2019.8967925

- [26] J. Knopp, M. Prasad, G. Willems, R. Timofte, and L. Van Gool, “Hough transform and 3D SURF for robust three dimensional classification,” Eur. Conf. Comput. Vis., Vol. 6316, pp. 589-602, 2010. https://doi.org/10.1007/978-3-642-15567-3_43

- [27] I. Sipiran and B. Bustos, “Harris 3D: A robust extension of the harris operator for interest point detection on 3d meshes,” The Visual Computer, Vol.27, pp. 963-976, 2011. https://doi.org/10.1007/s00371-011-0610-y

- [28] S. Arai, N. Fukuchi, and K. Hashimoto, “FAst detection algorithm for 3D keypoints (FADA-3K),” IEEE Access, Vol.8, pp. 189556-189564, 2020. https://doi.org/10.1109/ACCESS.2020.3025534

- [29] Y. Xu, S. Arai, D. Liu, F. Lin, and K. Kosuge, “FPCC: Fast point cloud clustering-based instance segmentation for industrial bin-picking,” Neurocomputing, Vol.494, pp. 255-268, 2022. https://doi.org/10.1016/j.neucom.2022.04.023

- [30] Z. Tian, C. Shen, and H. Chen, “Conditional convolutions for instance segmentation,” Proc. Eur. Conf. Comput. Vis., pp. 282-298, 2020. https://doi.org/10.1007/978-3-030-58452-8_17

- [31] N. Kimura, R. Sakai, S. Katsumata, and N. Chihara, “Simultaneously determining target object and transport velocity for manipulator and moving vehicle in piece-picking operation,” Proc. IEEE Int. Conf. Autom. Sci. Eng., pp. 1066-1073, 2019. https://doi.org/10.1109/COASE.2019.8843236

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.