Paper:

Improvement of Accuracy of Position and Orientation Estimation of AMR Using Camera Tracking and SLAM

Yasuaki Omi, Hibiki Sasa, Daiki Kato, Eiichi Aoyama, Toshiki Hirogaki, and Masao Nakagawa

Doshisha University

1-3 Tataramiyakodani, Kyotanabe, Kyoto 610-0394, Japan

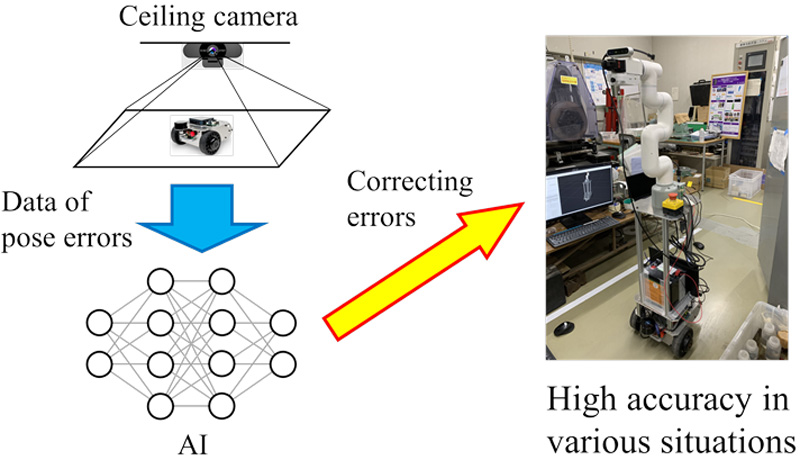

To cope with variable types and quantities of production in factories and a wide variety of orders in distribution centers, the demand for autonomous mobile robots (AMRs), which are not restricted by guide rails, is increasing in place of automated guided vehicles (AGVs). Accurate localization and orientation estimation are necessary to realize a stable and highly efficient AMR transport system. Even if we assume that the cooperative robots compensate for localization and orientation errors generated by AMRs, the errors generated by current AMRs are larger than the operating range of the cooperative robots, and it is necessary to ensure that the errors are at least within the range. To realize this, it is important to investigate how an AMR changes its localization and orientation accuracy while operating and to correct its position and orientation. In this study, we measured the actual pose of an AMR using a ceiling camera while the AMR was operating and examined the accuracy of the AMR’s localization and orientation sequentially when the course geometry changed. We then used these data to correct the position and orientation. It was found that a linear relationship was observed between the orientation estimation error and angular velocity, and the accuracy could be improved by modifying the orientation estimates using this relationship. The accuracy of localization and orientation estimation could be improved by training neural networks with sine and cosine data instead of angular data to learn errors from the ceiling camera and simultaneous localization and mapping (SLAM) data, and correcting errors.

Improving accuracy of pose using NNs

- [1] R. Hino and T. Moriwaki, “Proposal of Holonic Manufacturing System Concept,” Trans. of the Japan Society of Mechanical Engineers, Part C, Vol.67, No.658, pp. 2063-2069, 2001 (in Japanese). https://doi.org/10.1299/kikaic.67.2063

- [2] K. Sugito, T. Inoue, M. Kamijima, S. Takeda, and T. Yokoi, “The Protean Production System as a Method of Improving Production System Responsiveness,” Precision Engineering J., Vol.70, No.6, pp. 737-741, 2004 (in Japanese). https://doi.org/10.2493/jjspe.70.737

- [3] Y. Dobrev, M. Vossiek, M. Christmann, I. Bilous, and P. Gulden, “Steady Delivery: Wireless Local Positioning Systems for Tracking and Autonomous Navigation of Transport Vehicles and Mobile Robots,” IEEE Microwave Magazine, Vol.18, No.6, pp. 32-33, 2017. https://doi.org/10.1109/MMM.2017.2711941

- [4] R. Walenta, T. Schellekens, A. Ferrein, and S. Schiffer, “A decentralised system approach for controlling AGVs with ROS,” IEEE AFRICON, pp. 1436-1441, 2017. https://doi.org/10.1109/AFRCON.2017.8095693

- [5] A. Mizoe, M. Furukawa, E. Miyawaki, M. Watanabe, I. Suzuki, and M. Yamamoto, “A study on Order Picking Navigation in a Large-scale Logistic Center,” Precision Engineering J., Vol.75, No.10, pp. 1260-1264, 2009 (in Japanese). https://doi.org/10.2493/jjspe.75.1260

- [6] W. Hess, D. Kohler, H. Rapp, and D. Andor, “Real-time loop closure in 2D LIDAR SLAM,” IEEE Int. Conf. on Robotics and Automation, pp. 1271-1278, 2016. https://doi.org/10.1109/ICRA.2016.7487258

- [7] Y. Narita and K. Hidaka, “Connection of Maps Created with Multiple Robots SLAM,” Proc. of the 61st Japan Joint Automatic Control Conf., pp. 273-274, 2018 (in Japanese). https://doi.org/10.11511/jacc.61.0_273

- [8] R. Kümmerle, B. Steder, C. Dornhege, M. Ruhnke, G. Grisetti, C. Stachniss, and A. Kleiner, “On measuring the accuracy of SLAM algorithms,” Autonomous Robots, Vol.27, pp. 387-407, 2009. https://doi.org/10.1007/s10514-009-9155-6

- [9] Y. Sun, J. Hu, J. Yun, Y. Liu, D. Bai, X. Liu, G. Zhao, G. Jiang, J. Kong, and B. Chen, “Multi-Objective Location and Mapping Based on Deep Learning and Visual Slam,” Sensors, Vol.22, No.19, Article No.7576, 2022. https://doi.org/10.3390/s22197576

- [10] K. Demura and Y. Nakagawa, “A Monte Carlo Localization Based on Template Matching Using an Omni-directional Camera,” J. of the Robotics Society of Japan, Vol.27, No.2, pp. 249-257, 2009 (in Japanese). https://doi.org/10.7210/jrsj.27.249

- [11] W. Guan, L. Huang, S. Wen, Z. Yan, W. Liang, C. Yang, and Z. Liu, “Robot Localization and Navigation Using Visible Light Positioning and SLAM Fusion,” IEEE J. of Lightwave Technology, Vol.39, No.22, pp. 7040-7051, 2021. https://doi.org/10.1109/JLT.2021.3113358

- [12] H. Wang, C. Wang, and L. Xie, “Intensity-SLAM: Intensity Assisted Localization and Mapping for Large Scale Environment,” IEEE Robotics and Automation Letters, Vol.6, No.2, pp. 1715-1721, 2021. https://doi.org/10.1109/LRA.2021.3059567

- [13] K. Tobita and K. Mima, “Azimuth Angle Detection Method Combining AKAZE Features and Optical Flow for Measuring Movement Accuracy,” J. Robot. Mechatron., Vol.35, No.2, pp. 371-379, 2023. https://doi.org/10.20965/jrm.2023.p0371

- [14] F. J. Streit, M. J. H. Pancho, C. Bobda, and C. Roullet, “Vision-Based Path Construction and Maintenance for Indoor Guidance of Autonomous Ground Vehicles Based on Collaborative Smart Cameras,” Proc. of the 10th Int. Conf. on Distributed Smart Camera, pp. 44-49, 2016. https://doi.org/10.1145/2967413.2967425

- [15] X. Hu, Z. Luo, and W. Jiang, “AGV Localization System Based on Ultra-Wideband and Vision Guidance,” Electronics, Vol.9, No.3, Article No.448, 2020. https://doi.org/10.3390/electronics9030448

- [16] M. Tomonou and Y. Hara, “Current Status and Future Prospects of SLAM,” Trans. of the Institute of Systems, Control and Information Engineers, Vol.64, No.2, pp. 45-50, 2020 (in Japanese). https://doi.org/10.54254/2755-2721/35/20230368

- [17] G. Grisetti, C. Stachniss, and W. Burgard, “Improved Techniques for Grid Mapping With Rao-Blackwellized Particle Filters,” IEEE Trans. on Robotics, Vol.23, No.1, pp. 34-46, 2007. https://doi.org/10.1109/TRO.2006.889486

- [18] F. Dellaert, D. Fox, W. Burgard, and S. Thrun, “Monte Carlo localization for mobile robots,” Proc. of 1999 IEEE Int. Conf. on Robotics and Automation, Vol.2, pp. 1322-1328, 1999. https://doi.org/https://doi.org/10.1109/ROBOT.1999.772544

- [19] Y. Chen, J. Tang, C. Jiang, L. Zhu, M. Lehtomäki, H. Kaartinen, R. Kaijaluoto, Y. Wang, J. Hyyppä, H. Hyyppä, H. Zhou, L. Pei, and, R. Chen, “The Accuracy Comparison of Three Simultaneous Localization and Mapping (SLAM)-Based Indoor Mapping Technologies,” Sensors, Vol.18, No.10, Article No.3228, 2018. https://doi.org/10.3390/s18103228

- [20] H. Hasunuma, “The Diffusion of Light and Gloss of Surfaces,” Japanese J. of Applied Physics, Vol.23, No.11, pp. 501-507, 1954 (in Japanese). https://doi.org/10.11470/oubutsu1932.23.501

- [21] T. Kato and M. Igoshi, “Simulation of Reflection Image Created by Infrared Light in Smoke-filled Environment,” J. of the Japan Society for Precision Engineering, Vol.65, No.7, pp. 1056-1061, 1999 (in Japanese). https://doi.org/10.2493/jjspe.65.1056

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.