Paper:

Optimal Clustering of Point Cloud by 2D-LiDAR Using Kalman Filter

Shuncong Shen*1, Mai Saito*1, Yuka Uzawa*2, and Toshio Ito*3,*4

*1Department of Machinery and Control Systems, Graduate School of Systems Engineering and Science, Shibaura Institute of Technology

307 Fukasaku, Minuma-ku, Saitama City, Saitama 337-8570, Japan

*2College of Systems Engineering and Science, Shibaura Institute of Technology

307 Fukasaku, Minuma-ku, Saitama City, Saitama 337-8570, Japan

*3Department of Machinery and Control Systems, Shibaura Institute of Technology

307 Fukasaku, Minuma-ku, Saitama City, Saitama 337-8570, Japan

*4Hyper Digital Twins Co., Ltd.

2-1-17 Nihonbashi, Chuo-ku, Tokyo 103-0027, Japan

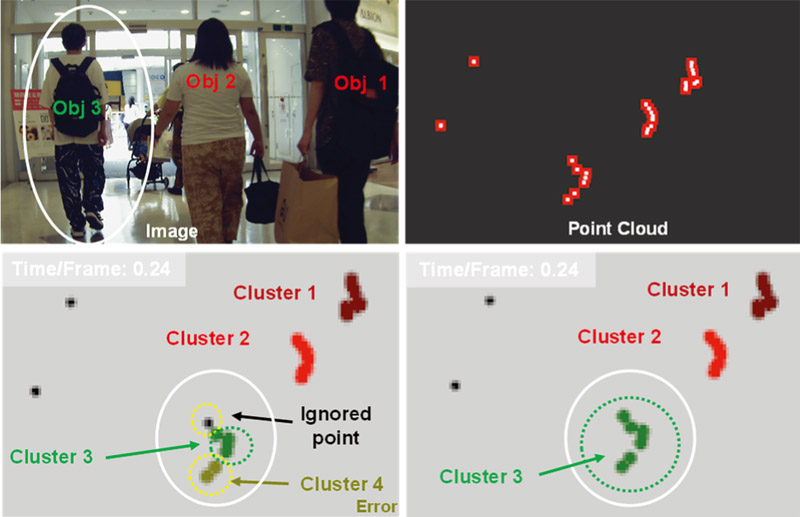

Light detection and ranging (LiDAR) has been the primary sensor for autonomous mobility and navigation system owing to its stability. Although multiple-channel LiDAR (3D-LiDAR) can obtain dense point clouds that provide optimal performance for several tasks, the application scope is limited by its high-cost. When employing single channel LiDAR (2D-LiDAR) as a low-cost alternative, the quantity and quality of the point cloud cause conventional methods to perform poorly in clustering and tracking tasks. In particular, when handling multiple pedestrian scenarios, the point cloud is not distinguished and clustering is unable to succeed. Hence, we propose an optimized clustering method combined with a Kalman filter (KF) for simultaneous clustering and tracking applicable to 2D-LiDAR.

Results with our Kalman filter-based method vs. w/o

- [1] M. Z. Azmi and T. Ito, “Artificial potential field with discrete map transformation for feasible indoor path planning,” Applied Sciences, Vol.10, No.24, pp. 1-13, 2020.

- [2] C. Yamamoto et al., “Development and Usability Evaluation of Automated Set Box for Mobility Scooter,” 2022 JSAE Annual Congress Proc., pp. 111-124, 2022.

- [3] Y. Tazaki and Y. Yokokohji, “Outdoor autonomous navigation utilizing proximity points of 3D Pointcloud,” J. Robot. Mechatron., Vol.32, No.6, pp. 1183-1192, 2020.

- [4] R. Ren et al., “Large-scale outdoor SLAM based on 2D Lidar,” Electronics, Vol.8, No.6, Article No.613, 2019.

- [5] M. G. Ocando et al., “Autonomous 2D SLAM and 3D mapping of an environment using a single 2D LIDAR and ROS,” 2017 Latin American Robotics Symp. and Brazilian Symp. on Robotics, pp. 1-6, 2017.

- [6] G. Welch et al., “An introduction to the kalman filter,” Proc. of SIGGRAPH, Course, Vol.8, No.41, pp. 27599-23175, 2001.

- [7] P. K. Gaddigoudar et al., “Pedestrian detection and tracking using particle filtering,” Int. Conf. on Computing, Communication and Automation (ICCCA), pp. 110-115, 2017.

- [8] S. Gidel et al., “Pedestrian detection method using a multilayer laserscanner: application in urban environment,” 2008 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 173-178, 2008.

- [9] T. Taipalus et al., “Human detection and tracking with knee-high mobile 2D LIDAR,” Int. Conf. on Robotics and Biomimetics, pp. 1672-1677, 2011.

- [10] M. Saito et al., “Interpolation method for sparse point cloud at Long-range using LiDAR and camera sensor fusion,” Trans. of Society of Automotive Engineers of Japan, Vol.53, No.3, pp. 598-604, 2022.

- [11] T. Shan et al., “Simulation-based lidar super-resolution for ground vehicles,” Robotics and Autonomous Systems, Vol.134, Article No.103647, 2020.

- [12] L. Zheng et al., “The obstacle detection method of UAV based on 2D Lidar,” IEEE Access, Vol.7, pp. 163437-163448, 2019.

- [13] J. Chen et al., “Pedestrian detection and tracking based on 2D Lidar,” 2019 6th Int. Conf. on Systems and Informatics (ICSAI), pp. 421-426, 2019.

- [14] Z. Sun et al.,“An improved lidar data segmentation algorithm based on Euclidean clustering,” Proc. of the 11th Int. Conf. Modeling, Identification and Control (ICMIC), pp. 1119-1130, 2020.

- [15] N. Bouhmala, “How good is the Euclidean distance metric for the clustering problem,” 2016 5th IIAI Int. Congress on Advanced Applied Informatics (IIAI-AAI), pp. 312-315, 2016.

- [16] B. Kaleci et al., “2DLaserNet: A deep learning architecture on 2D laser scans for semantic classification of mobile robot locations,” Engineering Science and Technology, an Int. J., Vol.28, Article No.101027, 2022.

- [17] G. D. Tipaldi et al., “Motion clustering and estimation with conditional random fields,” Proc. of the 2009 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 872-877, 2009.

- [18] A. Asvadi et al., “DepthCN: Vehicle detection using 3D-LIDAR and ConvNet,” Proc. IEEE 20th Int. Conf. on Intelligent Transportation Systems (ITSC), pp. 1-6, 2017.

- [19] S. Bandyopadhyay et al., “A point symmetry-based clustering technique for automatic evolution of clusters,” IEEE Trans. on Knowledge and Data Engineering, Vol.20, No.11, pp. 1441-1457, 2008.

- [20] E. Güngör et al., “Distance and density based clustering algorithm using Gaussian kernel,” Expert Systems with Applications, Vol.69, pp. 10-20, 2017.

- [21] O. Li et al., “Kalman filter and its application,” 2015 8th Int. Conf. on Intelligent Networks and Intelligent Systems (ICINIS), pp. 74-77, 2015.

- [22] S. Shen et al., “Feature Detection Based on Virtual Gradient Using Sensor Fusion for Low-Resolution 3D LiDAR,” 2021 10th IEEE CPMT Symp. Japan (ICSJ), pp. 41-44, 2021.

- [23] M. Schütz et al., “Occupancy grid map-based extended object tracking,” 2014 IEEE Intelligent Vehicles Symp., pp. 1205-1210, 2014.

- [24] A. F. Foka et al., “Predictive autonomous robot navigation,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, Vol.1, pp. 490-495, 2002.

- [25] X. Hu et al., “Road centerline extraction in complex urban scenes from LiDAR data based on multiple features,” IEEE Trans. on Geoscience and Remote Sensing, Vol.52, No.11, pp. 7448-7456, 2014.

- [26] M. Quigley et al., “ROS: An open-source robot operating system,” ICRA Workshop on Open Source Software, Vol.3, No.2, pp. 1-6, 2009.

- [27] W. G. Weng et al., “Cellular automaton simulation of pedestrian counter flow with different walk velocities,” Physical Review E, Vol.74, No.3, Article No.036102, 2006.

- [28] Y. Luo et al., “PORCA: Modeling and planning for autonomous driving among many pedestrians,” IEEE Robotics and Automation Letters, Vol.3, No.4, pp. 3418-3425, 2018.

- [29] J. Qi et al., “K-means: An effective and efficient K-means clustering algorithm,” 2016 IEEE Int. Conf. on Big Data and Cloud Computing (BDCloud), Social Computing and Networking (SocialCom), Sustainable Computing and Communications (SustainCom), pp. 242-249, 2016.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.