Paper:

Multi-Thread AI Cameras Using High-Speed Active Vision System

Mingjun Jiang*1, Zihan Zhang*2, Kohei Shimasaki*3, Shaopeng Hu*4, and Idaku Ishii*4

*1Innovative Research Excellence, Honda R&D Co., Ltd.

Midtown Tower 38F, 9-7-1 Akasaka, Minato-ku, Tokyo 107-6238, Japan

*2DENSO TEN Limited

1-2-28 Goshodori, Hyogo-ku, Kobe 652-8510, Japan

*3Digital Monozukuri (Manufacturing) Education Research Center, Hiroshima University

3-10-32 Kagamiyama, Higashi-hiroshima, Hiroshima 739-0046, Japan

*4Graduate School of Advanced Science and Engineering, Hiroshima University

1-4-1 Kagamiyama, Higashi-hiroshima, Hiroshima 739-8527, Japan

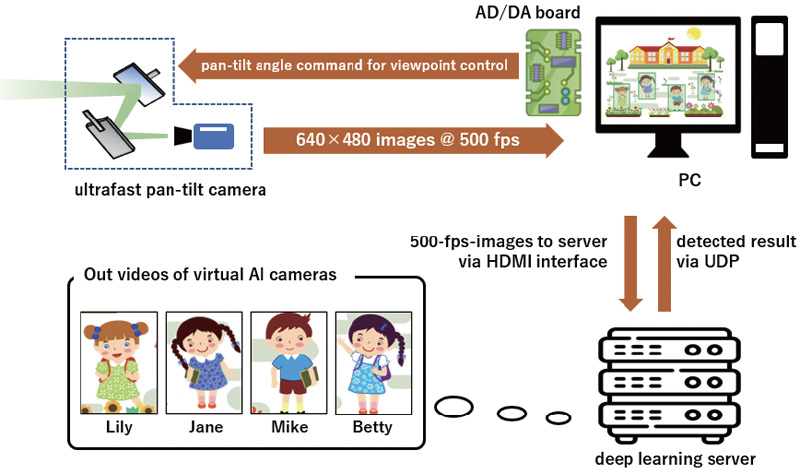

In this study, we propose a multi-thread artificial intelligence (AI) camera system that can simultaneously recognize remote objects in desired multiple areas of interest (AOIs), which are distributed in a wide field of view (FOV) by using single image sensor. The proposed multi-thread AI camera consists of an ultrafast active vision system and a convolutional neural network (CNN)-based ultrafast object recognition system. The ultrafast active vision system can function as multiple virtual cameras with high spatial resolution by synchronizing exposure of a high-speed camera and movement of an ultrafast two-axis mirror device at hundreds of hertz, and the CNN-based ultrafast object recognition system simultaneously recognizes the acquired high-frame-rate images in real time. The desired AOIs for monitoring can be automatically determined after rapidly scanning pre-placed visual anchors in the wide FOV at hundreds of fps with object recognition. The effectiveness of the proposed multi-thread AI camera system was demonstrated by conducting several wide area monitoring experiments on quick response (QR) codes and persons in nature spacious scene such as meeting room, which was formerly too wide for a single still camera with wide angle lens to simultaneously acquire clear images.

Multi-thread AI camera system

- [1] M. Fernandez-Sanjurjo, B. Bosquet, and S. J. Maybank, “Real-time visual detection and tracking system for traffic monitoring,” Eng. Appl. Artif. Intell., Vol.85, pp. 410-420, 2019.

- [2] H. Proença, “The uu-net: Reversible face de-identification for visual surveillance video footage,” IEEE Trans. Circuits Syst. Video Technol., Vol.32, No.2, pp. 496-509, 2021.

- [3] H. Yang and X. Han, “Face recognition attendance system based on real-time video processing,” IEEE Access, Vol.8, pp. 159143-159150, 2020.

- [4] Y. Zhou, H. Li, and L. Kneip, “Canny-vo: Visual odometry with rgb-d cameras based on geometric 3-d–2-d edge alignment,” IEEE Trans. Robot., Vol.35, No.1, pp. 184-199, 2018.

- [5] Y. Zhou, G. Gallego, and S. Shen, “Event-based stereo visual odometry,” IEEE Trans. Robot., Vol.37, No.5, pp. 1433-1450, 2021.

- [6] I. Ishii, T. Tatebe, Q. Gu, Y. Moriue, T. Takaki, and K. Tajima, “2000 fps real-time vision system with high-frame-rate video recording,” Proc. of IEEE Int. Conf. Robot. Autom., pp. 1536-1541, 2010.

- [7] A. Sharma, K. Shimasaki, Q. Gu, J. Chen, T. Aoyama, T. Takaki, I. Ishii, K. Tamura, and K. Tajima, “Super high-speed vision platform that can process 1024×1024 images in real time at 12500 Fps,” Proc. of IEEE Int. Conf. Robot. Autom., pp. 544-549, 2016.

- [8] K. Shimasaki, M. Jiang, T. Takaki, I. Ishii, and K. Yamamoto, “HFR-Video-Based Honeybee Activity Sensing,” IEEE Sensors J., Vol.20, No.10, pp. 5575-5587, 2020.

- [9] I. Ishii, T. Taniguchi, K. Yamamoto, and T. Takaki, “High-Frame-Rate Optical Flow System,” IEEE Trans. Circuits Syst. Video Technol., Vol.22, No.1, pp. 105-112, 2012.

- [10] I. Ishii, T. Tatebe, Q. Gu, and T. Takaki, “Color-histogram-based tracking at 2000 fps,” J. Electron. Imag., Vol.21, No.1, 013010, 2012.

- [11] I. Ishii, T. Ichida, Q. Gu, and T. Takaki, “500-fps Face Tracking System,” J. Real-time Image Process., Vol.8, No.4, pp. 379-388, 2013.

- [12] M. Jiang, K. Shimasaki, S. Hu, T. Senoo, and I. Ishii, “A 500-fps Pan-tilt Tracking System with Deep-learning-based Object Detection,” IEEE Robot. Autom. Lett., Vol.6, No.2, pp. 691-698, 2021.

- [13] M. Jiang, Q. Gu, T. Aoyama, T. Takaki, and I. Ishii, “Real-Time Vibration Source Tracking using High-Speed Vision,” IEEE Sensors J., Vol.17, No.5, pp. 1513-1527, 2017.

- [14] M. Jiang, R. Sogabe, K. Shimasaki, S. Hu, T. Senoo, and I. Ishii, “500-fps Omnidirectional Visual Tracking Using Three-Axis Active Vision System,” IEEE Trans. Instrum. Meas., Vol.70, pp. 1-11, 2021.

- [15] S. Hu, W. Lu, K. Shimasaki, M. Jiang, T. Senoo, and I. Ishii, “View and Scanning-Depth Expansion Photographic Microscope Using Ultrafast Switching Mirrors,” IEEE Trans. Instrum. Meas., Vol.71, pp. 1-13, 2022.

- [16] K. Shimasaki, T. Okamura, M. Jiang, T. Takaki, and I. Ishii, “Real-time High-speed Vision-based Vibration Spectrum Imaging,” Proc. of IEEE/SICE Int. Symp. Syst. Integ., pp. 474-477, 2019.

- [17] T. Aoyama, L. Li, M. Jiang, K. Inoue, T. Takaki, I. Ishii, H. Yang, C. Umemoto, H. Matsuda, M. Chikaraishi, and A. Fujiwara, “Vibration Sensing of a Bridge Model Using a Multithread Active Vision System,” IEEE/ASME Trans. Mechatronics, Vol.23, No.1, pp. 179-189, 2018.

- [18] S. Hu, M. Jiang, T. Takaki, and I. Ishii, “Real-time Monocular Three-dimensional Motion Tracking Using a Multithread Active Vision System,” J. Robot. Mechatron., Vol.30, No.3, pp. 453-466, 2018.

- [19] H. Matsuki, L. Von Stumberg, V. Usenko, J. Stückler, and D. Cremers, “Omnidirectional DSO: Direct sparse odometry with fisheye cameras,” IEEE Robot. Autom. Lett., Vol.3, No.4, pp. 3693-3700, 2018.

- [20] H. Kim, J. Jung, and J. Paik, “Fisheye lens camera based surveillance system for wide field of view monitoring,” Optik, Vol.127, No.14, pp. 5636-5646, 2016.

- [21] P. Yuan, K. Yang, and W. Tsai, “Real-time security monitoring around a video surveillance vehicle with a pair of two-camera omni-imaging devices,” IEEE Trans. Veh. Technol., Vol.60, No.8, pp. 3603-3614, 2011.

- [22] Y. Zhang, X. Hu, K. Kiyokawa, N. Isoyama, H. Uchiyama, and H. Hua, “Realizing mutual occlusion in a wide field-of-view for optical see-through augmented reality displays based on a paired-ellipsoidal-mirror structure,” Optics Express, Vol.29, No.26, pp. 42751-42761, 2021.

- [23] N. Nath, E. Tatlicioglu, and D. M. Dawson, “Range identification for nonlinear parameterizable paracatadioptric systems,” Automatica, Vol.46, No.7, pp. 1129-1140, 2010.

- [24] Y. Zhang, X. Hu, K. Kiyokawa, N. Isoyama, N. Sakata, and H. Hua, “Optical see-through augmented reality displays with wide field of view and hard-edge occlusion by using paired conical reflectors,” Optics Letters, Vol.46, No.17, pp. 4208-4211, 2021.

- [25] P. Deng, X. Yuan, M. Zhao, Y. Zeng, and M. Kavehrad, “Off-axis catadioptric fisheye wide field-of-view optical receiver for free space optical communications,” Optical Engineering, Vol.51, No.6, 063002, 2012.

- [26] J. Ducrocq, G. Caron, and E. M. Mouaddib, “HDROmni: Optical extension of dynamic range for panoramic robot vision,” IEEE Robot. Autom. Lett., Vol.6, No.2, pp. 3561-3568, 2021.

- [27] M. Lee, H. Kim, and J. Paik, “Correction of barrel distortion in fisheye lens images using image-based estimation of distortion parameters,” IEEE Access, Vol.7, pp. 45723-45733, 2019.

- [28] A. Furnari, G. M. Farinella, A. R. Bruna, and S. Battiato, “Affine covariant features for fisheye distortion local modeling,” IEEE Trans. Image Process., Vol.2, No.6, pp. 696-710, 2016.

- [29] L. Bu, H. Huo, X. Liu, and F. Bu, “Concentric circle grids for camera calibration with considering lens distortion,” Optics and Lasers in Engineering, Vol.140, 106527, 2021.

- [30] Y. Tao and Z. Ling, “Deep features homography transformation fusion network – A universal foreground segmentation algorithm for PTZ cameras and a comparative study,” Sensors, Vol.20, No.12, 3420, 2020.

- [31] G. Lisanti, I. Masi, F. Pernici, and A. D. Bimbo, “Continuous localization and mapping of a pan-tilt-zoom camera for wide area tracking,” Machine Vision and Applications, Vol.27, No.7, pp. 1071-1085, 2016.

- [32] D. Yan and H. Hu, “Application of augmented reality and robotic technology in broadcasting: a survey,” Robotics, Vol.6, No.3, 18, 2017.

- [33] F. Fan, G. Wu, M. Wang, Q. Cao, and S. Yang, “Multi-robot cyber physical system for sensing environmental variables of transmission line,” Sensors, Vol.18, No.9, 3146, 2018.

- [34] H. Yong, J. Huang, W. Xiang, X. Hua, and L. Zhang, “Panoramic background image generation for PTZ cameras,” IEEE Trans. Image Process., Vol.28, No.7, pp. 3162-3176, 2019.

- [35] Y. Cai and G. Medioni, “Persistent people tracking and face capture using a PTZ camera,” Machine Vision and Applications, Vol.27, No.3, pp. 397-413, 2016.

- [36] D. Avola, L. Cinque, G. L. Foresti, C. Massaroni, and D. Pannone, “A keypoint-based method for background modeling and foreground detection using a PTZ camera,” Patt. Recog. Lett., Vol.96, pp. 96-105, 2017.

- [37] B. Lamprecht, S. Rass, S. Fuchs, and K. Kyamakya, “Extrinsic camera calibration for an on-board two-camera system without overlapping field of view,” Proc. of IEEE Intell. Transport. Syst. Conf., pp. 265-270, 2007.

- [38] N. Anjum, M. Taj, and A. Cavallaro, “Extrinsic camera calibration for an on-board two-camera system without overlapping field of view,” Proc. of IEEE Int. Conf. Acoust., Speech and Signal Process., Vol.2, II-281, 2007.

- [39] A. Agrawal, “Extrinsic camera calibration without a direct view using spherical mirror,” Proc. of IEEE Int. Conf. Compt. Vision, pp. 2368-2375, 2013.

- [40] E. Ataer-Cansizoglu, T. Taguchi, S. Ramalingam, and Y. Miki, “Calibration of non-overlapping cameras using an external SLAM system,” Proc. of Int. Conf. 3D Vision, pp. 509-516, 2014.

- [41] G. Carrera, A. Angeli, and A. J. Davison, “SLAM-based automatic extrinsic calibration of a multi-camera rig,” Proc. of IEEE Int. Conf. Robot. Autom., pp. 2652-2659, 2011.

- [42] F. Zhao, T. Tamaki, T. Kurita, B. Raytchev, and K. Kaneda, “Marker-based non-overlapping camera calibration methods with additional support camera views,” Image and Vision Computing, Vol.70, pp. 46-54, 2018.

- [43] T. Liu, J. Kuang, W. Ge, P. Zhang, and X. Niu, “A Simple Positioning System for Large-Scale Indoor Patrol Inspection Using Foot-Mounted INS, QR Code Control Points, and Smartphone,” IEEE Sensors J., Vol.21, No.4, pp. 4938-4948, 2020.

- [44] C. Ren, X. He, Q. Teng, Y. Wu, and T. Q Nguyen, “Single image super-resolution using local geometric duality and non-local similarity,” IEEE Trans. Image Process., Vol.25, No.5, pp. 2168-2183, 2016.

- [45] J. Yang, J. Wright, T. S. Huang, and Y. Ma, “Image super-resolution via sparse representation,” IEEE Trans. Image Process., Vol.19, No.11, pp. 2861-2873, 2010.

- [46] C. Dong, C. C. Loy, K. He, and X. Tang, “Image super-resolution using deep convolutional networks,” IEEE Trans. Pattern Anal. Mach. Intell., Vol.38, No.2, pp. 295-307, 2015.

- [47] B. Lim, S. Son, H. Kim, S. Nah, and K. M. Lee, “Enhanced deep residual networks for single image super-resolution,” Proc. of IEEE Conf. Compt. Vis. Patt. Recog. Workshops, pp. 136-144, 2017.

- [48] C. Ledig, L. Theis, F. Huszár, J. Caballero, A. Cunningham, J. Acosta, A. Aitken, A. Tejani, J. Totz, and Z. Wang, “Photo-realistic single image super-resolution using a generative adversarial network,” Proc. of IEEE Conf. Compt. Vis. Patt. Recog., pp. 4681-4690, 2017.

- [49] J. Redmon and A. Farhadi, “YOLOv3: An incremental improvement,” arXiv:1804.02767, 2018,

- [50] J. Deng, J. Guo, N. Xue, and S. Zafeiriou, “Arcface: Additive angular margin loss for deep face recognition,” Proc. of IEEE Conf. Compt. Vis. Patt. Recog., pp. 4690-4699, 2019.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.