Paper:

Autonomous Path Travel Control of Mobile Robot Using Internal and External Camera Images in GPS-Denied Environments

Keita Yamada, Shoya Koga, Takashi Shimoda, and Kazuya Sato

Department of Mechanical Engineering, Faculty of Science and Engineering, Saga University

1 Honjo, Saga, Saga 840-8502, Japan

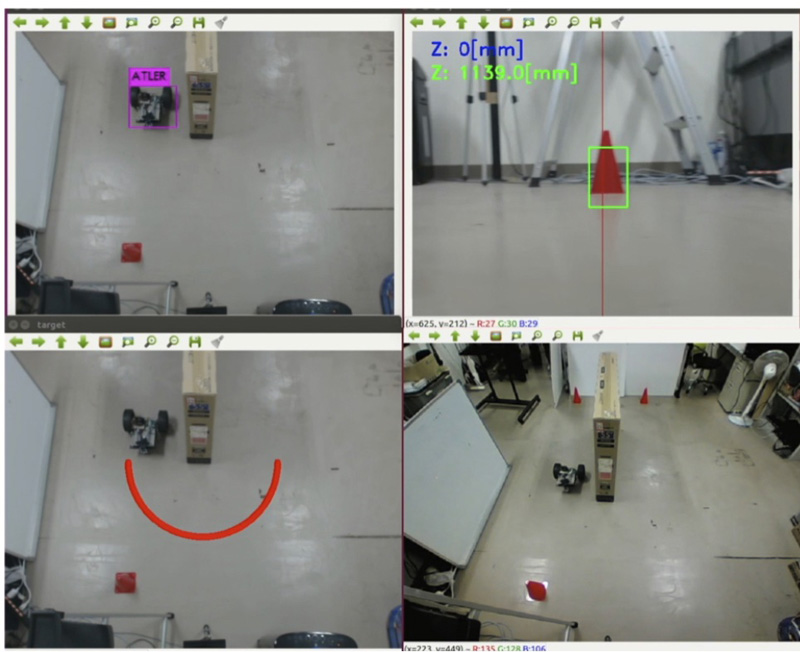

In this study, we developed a system for calculating the relative position and angle between a mobile robot and a marker using information such as the size of the marker of the internal camera of the mobile robot. Using this information, the mobile robot runs autonomously along the path given by the placement of the marker. In addition, we provide a control system that can follow a trajectory using information obtained by recognizing the mobile robot when reflected in an external camera using deep learning. The proposed method can easily achieve autonomous path travel control for mobile robots in environments where GPS cannot be received. The effectiveness of the proposed system is demonstrated under several actual experiments.

Autonomous path travel of mobile robot

- [1] E. Javanmardi, Y. Gu, M. Javanmardi, and S. Kamijo, “Autonomous vehicle self-localization based on abstract map and multi-channel LiDAR in urban area,” IATSS Research, Vol.43, Issue 1, pp. 1-13, 2019.

- [2] L. Zheng, Y. Zhu, B. Xue, M. Liu, and R. Fan, “Low-Cost GPS-Aided LiDAR State Estimation and Map Building,” 2019 IEEE Int. Conf. on Imaging Systems and Techniques (IST), pp. 1-6, 2019.

- [3] S. Milz, G. Arbeiter, C. Witt, B. Abdallah, and S. Yogamani, “Visual SLAM for Automated Driving: Exploring the Applications of Deep Learning,” 2018 IEEE/CVF Conf. on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 360-370, 2018.

- [4] T. Nakagawa, “Automatic Construction Machines by GNSS Information Used,” J. of the Robotics Society of Japan, Vol.37, No.7, pp. 593-597, 2019 (in Japanese).

- [5] L. C. Santos, F. N. Santos, E. J. Solteiro Pires, A. Valente, P. Costa, and S. Magalhães, “Path Planning for ground robots in agriculture: a short review,” 2020 IEEE Int. Conf. on Autonomous Robot Systems and Competitions (ICARSC), pp. 61-66, 2020.

- [6] A. Choudhary, Y. Kobayashi, F. J. Arjonilla, S. Nagasaka, and M. Koike, “Evaluation of mapping and path planning for non-holonomic mobile robot navigation in narrow pathway for agricultural application,” 2021 IEEE/SICE Int. Symp. on System Integration (SII), pp. 17-22, 2021.

- [7] A. Carballo, S. Seiya, J. Lambert, H. Darweesh, P. Narksri, L. Y. Morales, N. Akai, E. Takeuchi, and K. Takeda, “End-to-End Autonomous Mobile Robot Navigation with Model-Based System Support,” J. Robot. Mechatron., Vol.30, No.4, pp. 563-583, 2018.

- [8] H. Zhu, P. Zhao, S. Liu, and X. Ke, “A SLAM Based Navigation System for the Indoor Embedded Control Mobile Robot,” 2020 4th Int. Conf. on Robotics and Automation Sciences (ICRAS), pp. 22-27, 2020.

- [9] T. Ran, L. Yuan, and J. b. Zhang, “Scene perception based visual navigation of mobile robot in indoor environment,” ISA Trans., Vol.109, pp. 389-400, 2021.

- [10] M.-H. Li, B.-R. Hong, Z.-S. Cai, S.-H. Piao, and Q.-C. Huang, “Novel indoor mobile robot navigation using monocular vision,” Engineering Applications of Artificial Intelligence, Vol.21, No.3, pp. 485-497, 2008.

- [11] K. Kurashiki, M. Aguilar, and S. Soontornvanichkit, “Visual Navigation of a Wheeled Mobile Robot Using Front Image in Semi-Structured Environment,” J. Robot. Mechatron., Vol.27, No.4, pp. 392-400, 2015.

- [12] R. Miyamoto, M. Adachi, H. Ishida, T. Watanabe, K. Matsutani, H. Komatsuzaki, S. Sakata, R. Yokota, and S. Kobayashi, “Visual Navigation Based on Semantic Segmentation Using Only a Monocular Camera as an External Sensor,” J. Robot. Mechatron., Vol.32, No.6, pp. 1137-1153, 2020.

- [13] M. Nadour, M. Boumehraz, L. Cherroun, and V. Puig, “Mobile robot visual navigation based on fuzzy logic and optical flow approaches,” Int. J. of System Assurance Engineering and Management, Vol.10, No.6, pp. 1654-1667, 2019.

- [14] H. Fushimi, “Development of Markerless AR System Using Tablet Device,” Fujita Technical Research Report, Vol.56, pp. 71-76, 2020 (in Japanese).

- [15] K. Sato, M. Yoshida, T. Izu, and K. Sumi, “An Autonomous UGV Travel Control Using Only Monocular Camera Information,” Proc. of SICE Annual Conf. 2020, SaBT10.1, 2020.

- [16] K. Sato, M. Yanagi, and K. Tsuruta, “Robust Adaptive Trajectory Control of Nonholonomic Mobile Robot with Compensation of Input Uncertainty,” J. of System Design and Dynamics, Vol.6, No.3, pp. 273-286, 2012.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.