Paper:

Tomato Recognition for Harvesting Robots Considering Overlapping Leaves and Stems

Takeshi Ikeda*1, Ryo Fukuzaki*2, Masanori Sato*3, Seiji Furuno*4, and Fusaomi Nagata*1

*1Sanyo-Onoda City University

1-1-1 Daigaku-dori, Sanyoonoda, Yamaguchi 756-0884, Japan

*2Yanagiya Machinery Co., Ltd.

189-18 Yoshiwa, Ube, Yamaguchi 759-0134, Japan

*3Nagasaki Institute of Applied Science

536 Aba-machi, Nagasaki, Nagasaki 851-0193, Japan

*4National Institute of Technology, Kitakyushu College

5-20-1 Shii, Kokuraminami, Kitakyushu, Fukuoka 802-0985, Japan

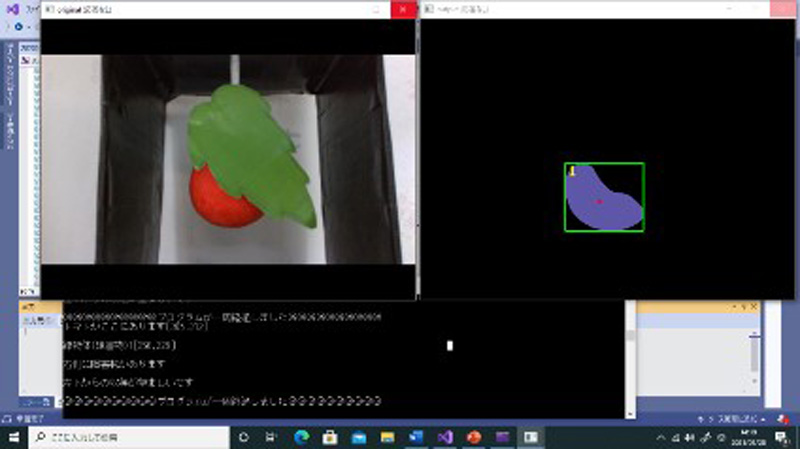

In recent years, the declining and aging population of farmers has become a serious problem. Smart agriculture has been promoted to solve these problems. It is a type of agriculture that utilizes robotics, and information and communication technology to promote labor saving, precision, and realization of high-quality production. In this research, we focused on robots that can harvest tomatoes. Tomatoes are delicate vegetables with a thin skin and a relatively large yield. During automatic harvesting of tomatoes, to ensure the operation of the harvesting arm, an input by image processing is crucial to determine the color of the tomatoes at the time of harvesting. Research on robot image processing technology is indispensable for accurate operation of the arm. In an environment where tomatoes are harvested, obstacles such as leaves, stems, and unripe tomatoes should be taken into consideration. Therefore, in this research, we propose a method of image processing to provide an appropriate route for the arm to ensure easy harvesting, considering the surrounding obstacles.

It was found a hidden tomato under a leaf

- [1] G. Nakamura, K. Sakaguchi, T. Nishi, H. Hamamatsu, and T. Matsuo, “Development of Tomato Harvesting Robot – Development of Arm Part and Examination of Image Processing System –,” Proc. of the 2016 JSME Conf. on Robotics and Mechatronics, 2A1-09a3, 2016 (in Japanese).

- [2] M. Sato, “Development of Vision System and Robot Hand for Tomato Harvesting,” Proc. of the 2016 JSME Conf. on Robotics and Mechatronics, 2A1-08b7, 2016 (in Japanese).

- [3] T. Matsumoto, G. Nakamura, K. Tomoda, K. Nakao, H. Miki, H. Hamamatsu, and T. Matsuo, “Verification of end effector and image processing system of tomato harvesting robot,” Proc. of the 2017 JSME Conf. on Robotics and Mechatronics, 1A1-B03, 2017 (in Japanese).

- [4] S. Yasukawa, B. Li, T. Sonoda, and K. Ishii, “Vision-Based Behavior Strategy for Tomato Harvesting Robot – 3D Reconstruction from Multi-images of Tomato bunch and Fruits’ Pose Estimation –,” Proc. of the 2015 JSME Conf. on Robotics and Mechatronics, 2P1-C06, 2015 (in Japanese).

- [5] S. Yasukawa, M. Nishio, B. Li, and K. Ishii, “Vision-Based Behavior Strategy for Tomato Harvesting Robot (II) – Ripe Fruit Detection Method Using Infrared Images and Specular Reflection –,” Proc. of the 2016 JSME Conf. on Robotics and Mechatronics, 2A1-08b1, 2016 (in Japanese).

- [6] T. Fujinaga, S. Yasukawa, and K. Ishii, “Tomato Growth State Map for the Automation of Monitoring and Harvesting,” J. Robot. Mechatron., Vol.32, No.6, pp. 1279-1291, 2020.

- [7] T. Fujinaga, S. Yasukawa, B. Li, and K. Ishii, “Image Mosaicing Using Multi-Modal Images for Generation of Tomato Growth State Map,” J. Robot. Mechatron., Vol.30, No.2, pp. 187-197, 2018.

- [8] T. Yoshida, T. Fukao, and T. Hasegawa, “Fast Detection of Tomato Peduncle Using Point Cloud with a Harvesting Robot,” J. Robot. Mechatron., Vol.30, No.2, pp. 180-186, 2018.

- [9] T. Yoshida, T. Fukao, and T. Hasegawa, “A Tomato Recognition Method for Harvesting with Robots using Point Clouds,” Proc. of the 2019 IEEE/SICE Int. Symp. on System Integration, Tu1B.2, 2019.

- [10] T. Yoshida, T. Fukao, and T. Hasegawa, “Cutting Point Detection Using a Robot with Point Clouds for Tomato Harvesting,” J. Robot. Mechatron., Vol.32, No.2, pp. 437-444, 2020.

- [11] R. Fukui, K. Kawae, and S. Warisawa, “Development of a Tomato Volume Estimating Robot that Autonomously Searches an Appropriate Measurement Position – Basic Feasibility Study Using a Tomato Bed Mock-Up –,” J. Robot. Mechatron., Vol.30, No.2, pp. 173-179, 2018.

- [12] M. Monta, N. Kondo, S. Arima, and K. Namba, “Robotic Vision for Bioproduction Systems,” J. Robot. Mechatron., Vol.15, No.3, pp. 341-348, 2003.

- [13] N. Kondo and M. Monta, “Fruit Harvesting Robotics,” J. Robot. Mechatron., Vol.11, No.4, pp. 321-325, 1999.

- [14] N. Irie, N. Taguchi, T. Horie, and T. Ishimatsu, “Development of Asparagus Harvester Coordinated with 3-D Vision Sensor,” J. Robot. Mechatron., Vol.21, No.5, pp. 583-589, 2009.

- [15] S. Bachche and K. Oka, “Design, Modeling and Performance Testing of End-Effector for Sweet Pepper Harvesting Robot Hand,” J. Robot. Mechatron., Vol.25, No.4, pp. 705-717, 2013.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.