Paper:

Generating a Visual Map of the Crane Workspace Using Top-View Cameras for Assisting Operation

Yu Wang*, Hiromasa Suzuki*, Yutaka Ohtake*, Takayuki Kosaka**, and Shinji Noguchi**

*Department of Precision Engineering, The University of Tokyo

7-3-1 Hongo, Bunkyo, Tokyo 113-8656, Japan

**Tadano Ltd.

2217-13 Hayashi-cho, Takamatsu, Kagawa 761-0301, Japan

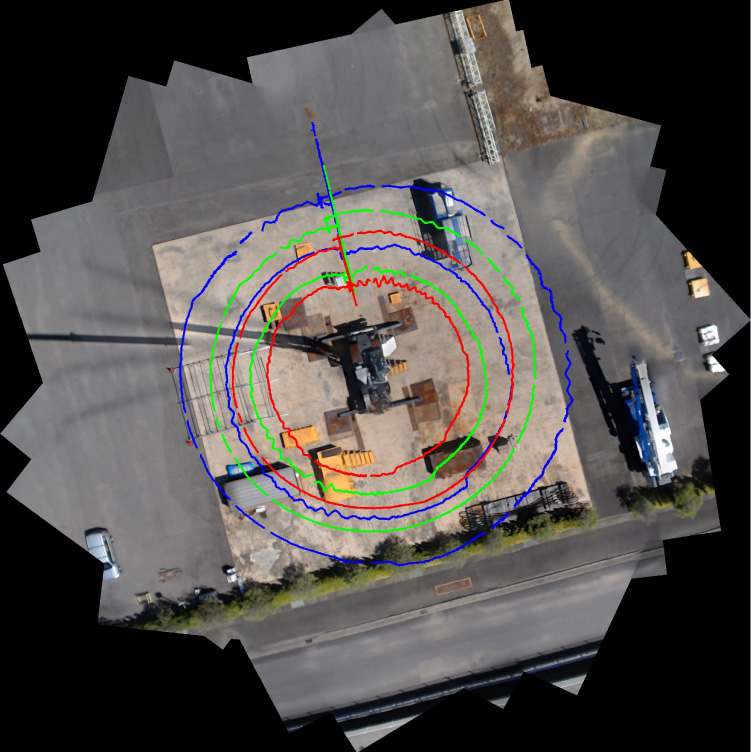

All terrain cranes often work in construction sites. Blind spots, limited information, and high mental workload are problems encountered by crane operators. A top-view camera mounted on the boom head offers a valuable perspective on the workspace that can help eliminate blind spots and provide the basis for assisting operation. In this study, a visual 2D map of a crane workspace is generated from images captured by a top-view camera. Various types of information can be overlaid on this visual 2D map to assist the operator, such as recording the operation and projecting the boom head’s expected path through the workspace. Herein, the process of generating a visual map by stitching and locating the boom head trajectory in that visual map is described. Preliminary proof-of-concept tests show that a precise map and projected trajectories can be generated via image-processing techniques that discriminate foreground objects from the scene below the crane. The location error is analyzed and verified to confirm its applicability. These results show a way to help the operator make more precise operation easily and reduce the operator’s mental workload.

Workspace map with crane boom head location and motion path

- [1] W. Ren, Z. Wu, and L. Zhang, “Real-time planning of a lifting scheme in mobile crane mounted controllers,” Canadian J. of Civil Engineering, Vol.43, No.6, pp. 542-552, 2016.

- [2] H. R. Reddy and K. Varghese, “Automated path planning for mobile crane lifts,” Computer-Aided Civil and Infrastructure Engineering, Vol.17, No.6, pp. 439-448, 2002.

- [3] T. Hirabayashi, K. Abukawa, and T. Sato, “First Trial of Underwater Excavator Work Supported by Acoustic Video Camera,” J. Robot. Mechatron., Vol.28, No.2, pp. 138-148, 2016.

- [4] T. Tanimoto, R. Fukano, and K. Shinohara, “Research on superimposed terrain model for teleoperation work efficiency,” J. Robot. Mechatron., Vol.28, No.2, pp. 173-184, 2016.

- [5] M. Ito, Y. Funahara, and S. Saiki, “Development of a Cross-Platform Cockpit for Simulated and Tele-Operated Excavators,” J. Robot. Mechatron., Vol.31, No.2, pp. 231-229, 2019.

- [6] C. Herley, “Automatic occlusion removal from minimum number of images,” IEEE Int. Conf. on Image Processing 2005, Vol.2, II-1046, 2005.

- [7] R. K. Namdev, A. Kundu, and K. M. Krishna, “Motion segmentation of multiple objects from a freely moving monocular camera,” Proc. of IEEE Conf. on Robotics and Automation, pp. 4092-4099, 2012.

- [8] M. Brown and D. G. Lowe, “Automatic panoramic image stitching using invariant features,” Int. J. of Computer Vision, Vol.74, No.1, pp. 59-73, 2007.

- [9] D. Lowe, “Object recognition from local scale-invariant features,” Proc. of the Int. Conf. on Computer Vision (ICCV), Vol.99, No.2, pp. 1150-1157, 1999.

- [10] R. Serajeh, A. Mousavinia, and F. Safaei, “Motion segmentation with hand held cameras using structure from motion,” Proc. of 2017 Iranian Conf. on Electrical Engineering (ICEE), pp. 1569-1573, 2017.

- [11] M. A. Fischler and R. C. Bolles, “Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography,” Communications of the ACM, Vol.24, No.6, pp. 381-395, 1981.

- [12] E. Ilg, N. Mayer, and T Saikia, “Flownet 2.0: Evolution of optical flow estimation with deep networks,” IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), Vol.2, pp. 2462-2470, 2017.

- [13] P. F. Alcantarilla, A. Bartoli, and A. J. Davison, “KAZE features,” Proc. of European Conf. on Computer Vision, pp. 214-227, 2012.

- [14] R. Hartley and A. Zisserman, “Multiple view geometry in computer vision,” Cambridge University Press, 2004.

- [15] P. J. Burt and E. H. Adelson, “A multiresolution spline with application to image mosaics,” ACM Trans. on Graphics, Vol.2, No.4, pp. 217-236, 1983.

- [16] J. Serra, “Image analysis and mathematical morphology,” Academic Press, Inc., 1983.

- [17] B. Triggs, F. Philip et al., “Bundle adjustment – a modern synthesis,” Proc. of Int. Workshop on Vision Algorithms, pp. 298-372, 1999.

- [18] M. Brown and D. Lowe, “Recognising panoramas,” Proc. of the 9th Int. Conf. on Computer Vision (ICCV03), Vol.2, pp. 1218-1225, 2003.

- [19] A. Braun, S. Tuttas, A. Borrmann, and U. Stilla, “Automated progress monitoring based on photogrammetric point clouds and precedence relationship graphs,” Proc. of the 32nd Int. Symp. on Automation and Robotics in Construction, pp. 1-7, 2015.

- [20] D. H. Ballard, “Generalizing the Hough transform to detect arbitrary shapes,” Pattern recognition, Vol.13, No.2, pp. 111-122, 1981.

- [21] J. Illingworth and J. Kittler, “A survey of the Hough transform,” Computer Vision, Graphics, and Image Processing, Vol.44, No.1, pp. 87-116, 1988.

- [22] J. Canny, “A computational approach to edge detection,” IEEE Trans. on Pattern Analysis and Machine Intelligence, No.6, pp. 679-698, 1986.

- [23] H. Li, “Consensus set maximization with guaranteed global optimality for robust geometry estimation,” Proc. of 2009 IEEE 12th Int. Conf. on Computer Vision, pp. 1074-1080, 2009.

- [24] R. Deriche, “Using Canny’s criteria to derive a recursively implemented optimal edge detector,” Int. J. of Computer Vision, Vol.1, No.2, pp. 167-187, 1987.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.