Review:

Dynamic Intelligent Systems Based on High-Speed Vision

Taku Senoo*, Yuji Yamakawa**, Shouren Huang*, Keisuke Koyama*, Makoto Shimojo*, Yoshihiro Watanabe***, Leo Miyashita*, Masahiro Hirano*, Tomohiro Sueishi*, and Masatoshi Ishikawa*

*Graduate School of Information Science and Technology, The University of Tokyo

7-3-1 Hongo, Bunkyo-ku, Tokyo 113-8656, Japan

**Institute of Industrial Science, The University of Tokyo

4-6-1 Komaba, Meguro-ku, Tokyo 153-5452, Japan

***School of Engineering, Tokyo Institute of Technology

G2-31, 4259 Nagatsuta, Midori-ku, Yokohama, Kanagawa 226-8503, Japan

This paper presents an overview of the high-speed vision system that the authors have been developing, and its applications. First, examples of high-speed vision are presented, and image-related technologies are described. Next, we describe the use of vision systems to track flying objects at sonic speed. Finally, we present high-speed robotic systems that use high-speed vision for robotic control. Descriptions of the tasks that employ high-speed robots center on manipulation, bipedal running, and human-robot cooperation.

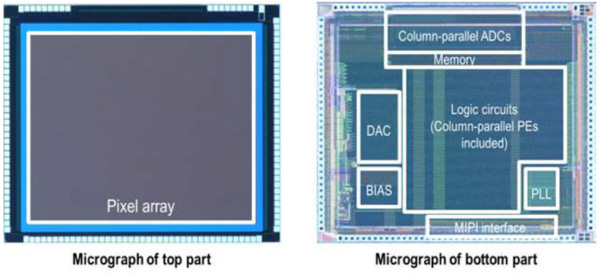

Stacked 1 ms high-speed vision chip

- [1] M. Ishikawa, “Sensor Fusion: The State of the Art,” J. Robot. Mechatron., Vol.2, No.4, pp. 19-28, 1996.

- [2] M. Ishikawa, “Active Sensor System Using Parallel Processing Circuits,” J. Robot. Mechatron., Vol.5, No.1, pp. 31-37, 1988.

- [3] T. Senoo, Y. Yamakawa, Y. Watanabe, H. Oku, and M. Ishikawa, “High-Speed Vision and its Application Systems,” J. Robot. Mechatron., Vol.26, No.3, pp. 287-301, 2014.

- [4] T. Komuro, S. Kagami, and M. Ishikawa, “A Dynamically Reconfigurable SIMD Processor for a Vision Chip,” IEEE J. of Solid-State Circuits, Vol.1, No.1, pp. 265-268, 2004.

- [5] Y. Watanabe, T. Komuro, S. Kagami, and M. Ishikawa, “Multi-Target Tracking Using a Vision Chip and its Applications to Real-Time Visual Measurement,” J. Robot. Mechatron., Vol.17, No.2, pp. 121-129, 2005.

- [6] S. Kagami, M. Shinmeimae, T. Komuro, Y. Watanabe, and M. Ishikawa, “A Pixel-Parallel Algorithm for Detecting and Tracking Fast-Moving Modulated Light Signals,” J. Robot. Mechatron., Vol.17, No.4, pp. 384-397, 2005.

- [7] X. Fei, Y. Igarashi, M. Shinkai, M. Ishikawa, and K. Hashimoto, “Parallel Computation of the Region-Based Level Set Method for Boundary Detection of Moving Objects,” J. Robot. Mechatron., Vol.21, No.6, 698-708, 2009.

- [8] T. Yamazaki, H. Katayama, S. Uehara, A. Nose, M. Kobayashi, S. Shida, M. Odahara, K. Takamiya, Y. Hisamatsu, S. Matsumoto, L. Miyashita, Y. Watanabe, T. Izawa, Y. Muramatsu, and M. Ishikawa, “A 1ms High-Speed Vision Chip with 3D-Stacked 140GOPS Column-Parallel PEs for Spatio-Temporal Image Processing,” Int. Solid-State Circuits Conf., pp. 82-83, 2017.

- [9] K. Okumura, H. Oku, and M. Ishikawa, “High-speed gaze controller for millisecond-order pan/tilt camera,” 2011 IEEE Int. Conf. on Robotics and Automation, pp. 6186-6191, 2011.

- [10] L. Miyashita, Y. Watanabe, and M. Ishikawa, “High-Speed Image Rotator for Blur-Canceling Roll Camera,” Int. Conf. on Intelligent Robots and Systems, pp. 6047-6052, 2015.

- [11] G. Narita, Y. Watanabe, and M. Ishikawa, “Dynamic Projection Mapping onto Deforming Non-Rigid Surface Using Deformable Dot Cluster Marker,” IEEE Trans. on Visualization and Computer Graphics, Vol.23, No.3, pp. 1235-1248, 2017.

- [12] Y. Watanabe, T. Kato, and M. Ishikawa, “Extended Dot Cluster Marker for High-speed 3D Tracking in Dynamic Projection Mapping,” IEEE Int. Symp. on Mixed and Augmented Reality, 2017.

- [13] Y. Watanabe, T. Komuro, and M. Ishikawa, “955-fps Real-Time Shape Measurement of a Moving/Deforming Object Using High-Speed Vision for Numerous-Point Analysis,” IEEE Int. Conf. on Robotics and Automation, pp. 3192-3197, 2007.

- [14] S. Tabata, S. Noguchi, Y. Watanabe, and M. Ishikawa, “High-speed 3D Sensing with Three-view Geometry using a Segmented Pattern,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 3900-3907, 2015.

- [15] M. Yasui, Y. Watanabe, and M. Ishikawa, “Occlusion-Robust 3D Sensing Using Aerial Imaging,” IEEE Int. Conf. on Computational Photography, pp. 170-179, 2016.

- [16] Y. Watanabe, G. Narita, S. Tatsuno, T. Yuasa, K. Sumino, and M. Ishikawa, “High-speed 8-bit Image Projector at 1,000 fps with 3 ms Delay,” The Int. Display Workshops, pp. 1064-1065, 2015.

- [17] M. Maruyama, S. Tabata, Y. Watanabe, and M. Ishikawa, “Multi-Pattern Embedded Phase Shifting using a High-speed Projector for Fast and Accurate Dynamic 3D Measurement,” IEEE Meeting on Applications of Computer Vision, pp. 921-929, 2018.

- [18] M. Hirano, Y. Watanabe, and M. Ishikawa, “3D Rectification of Distorted Document Image based on Tiled Rectangle Fragments,” IEEE Int. Conf. on Image Processing, pp. 2604-2608, 2014.

- [19] L. Miyashita, R. Yonezawa, Y. Watanabe, and M. Ishikawa, “3D Motion Sensing of any Object without Prior Knowledge,” ACM Trans. on Graphics, Vol.34, No.6, pp. 218:1-218:11, 2015.

- [20] Y. Hu, L. Miyashita, Y. Watanabe, and M. Ishikawa, “Robust 6-DOF motion sensing for an arbitrary rigid body by multi-view laser Doppler measurements,” Optics Express, Vol.25, No.24, pp. 30371-30387, 2017.

- [21] L. Miyashita, R. Yonezawa, Y. Watanabe, and M. Ishikawa, “Rapid SVBRDF Measurement by Algebraic Solution Based on Adaptive Illumination,” Int. Conf. on 3D Vision, pp. 232-239, 2014.

- [22] K. Okumura, H. Oku, and M. Ishikawa, “High-speed Gaze Controller for Millisecond-order Pan/tilt Camera,” IEEE Int. Conf. on Robotics and Automation, pp. 6186-6191, 2011.

- [23] K. Okumura, K. Yokoyama, H. Oku, and M. Ishikawa, “1 ms Auto Pan-Tilt - video shooting technology for objects in motion based on Saccade Mirror with background subtraction,” Advanced Robotics, Vol.29, No.7, pp. 457-468, 2015.

- [24] T. Sueishi, M. Ishii, and M. Ishikawa, “Tracking background-oriented schlieren for observing shock oscillations of transonic flying objects,” Applied Optics, Vol.56, No.13, pp. 3789-3798, 2017.

- [25] A. Namiki, Y. Imai, M. Ishikawa, and M. Kaneko, “Development of a High-speed Multifingered Hand System and Its Application to Catching,” IEEE/RSJ Int. Conf. Intelligent Robots and Systems, pp. 2666-2671, 2003.

- [26] K. Koyama, M. Shimojo, T. Senoo, and M. Ishikawa, “High-Speed High-Precision Proximity Sensor for Detection of Tilt, Distance and Contact,” IEEE Robotics and Automation Letters, Vol.3, No.4, pp. 3224-3231, 2018.

- [27] I. Miyamoto, Y. Suzuki, A. Ming, M. Ishikawa, and M. Shimojo, “Basic Study of Touchless Human Interface Using Net Structure Proximity Sensors,” Vol.25, No.3, pp. 553-558, 2013.

- [28] T. Senoo, A. Namiki, and M. Ishikawa, “Hybrid Trajectory Generation of an Articulated Manipulator for High-speed Batting,” J. of the Robotics Society of Japan, Vol.24, No.4, pp. 515-522, 2006.

- [29] T. Senoo, A. Namiki, and M. Ishikawa, “Ball Control in High-Speed Batting Motion using Hybrid Trajectory Generator,” Proc. of IEEE Int. Conf. on Robotics and Automation, pp. 1762-1767, 2006.

- [30] T. Senoo, A. Namiki, and M. Ishikawa, “High-speed Throwing Motion Based on Kinetic Chain Approach,” Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 3206-3211, 2008.

- [31] T. Senoo, Y. Horiuchi, Y. Nakanishi, K. Murakami, and M. Ishikawa, “Robotic Pitching by Rolling Ball on Fingers for a Randomly Located Target,” Proc. of IEEE Int. Conf. on Robotics and Biomimetics, pp. 325-330, 2016.

- [32] S. Huang, Y. Yamakawa, T. Senoo, and M. Ishikawa, “Robotic Needle Threading Manipulation based on High-Speed Motion Strategy using High-Speed Visual Feedback,” IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 4041-4046, 2015.

- [33] Y. Yamakawa, A. Namiki, and M. Ishikawa, “Dynamic Folding of a Cloth using a High-speed Multifingered Hand System,” J. of the Robotics Society of Japan, Vol.30, No.2, pp. 225-232, 2012.

- [34] Y. Yamakawa, S. Nakano, T. Senoo, and M. Ishikawa, “Dynamic Manipulation of a Thin Circular Flexible Object using a High-Speed Multifingered Hand and High-speed Vision,” Proc. 2013 IEEE Int. Conf. on Robotics and Biomimetics, pp. 1851-1857, 2013.

- [35] Y. Yamakawa, “Human-Machine Cooperation Using High-speed Vision and High-speed Robot.” J. of the Robotics Society of Japan, Vol.35, No.8, pp. 596-599, 2017.

- [36] Y. Matsui, Y. Yamakawa, and M. Ishikawa, “Cooperative Operation between a Human and a Robot Based on Real-Time Measurement of Location and Posture of Target Object by High-Speed Vision,” 2017 IEEE Conf. on Control Technology and Applications, pp. 457-462, 2017.

- [37] Y. Yamakawa, W. Tooyama, S. Huang, K. Murakami, and M. Ishikawa, “Module development and fundamental study toward high-speed and high-accuracy positioning of human hand,” Trans. of the JSME, Vol.84, No.858, Paper No.17-00364, 2018.

- [38] T. Tamada, W. Ikarashi, D. Yoneyama, K. Tanaka, Y. Yamakawa, T. Senoo, and M. Ishikawa, “Development of High-speed Bipedal Running Robot System (ACHIRES),” J. of the Robotics Society of Japan, Vol.33, No.7, pp. 482-489, 2015.

- [39] S. Huang, K. Shinya, N. Bergström, Y. Yamakawa, T. Yamazaki, and M. Ishikawa, “Towards Flexible Manufacturing: Dynamic Compensation Robot with a New High-speed Vision System,” Int. J. of Advanced Manufacturing Technology, Vol.95, Issues 9-12, pp. 4523-4533, 2018.

- [40] S. Huang, M. Ishikawa, and Y. Yamakawa, “Human-Robot Collaboration based on Dynamic Compensation: from Micro-manipulation to Macro-manipulation,” Proc. 27th IEEE Int. Symp. on Robot and Human Interactive Communication, pp. 603-604, 2018.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.