Paper:

Color Extraction Using Multiple Photographs Taken with Different Exposure Time in RWRC

Kenji Yamauchi, Naoki Akai, and Koichi Ozaki

Graduate School of Engineering, Utsunomiya University

7-1-2 Yoto, Utsunomiya-City, Tochigi 321-8585, Japan

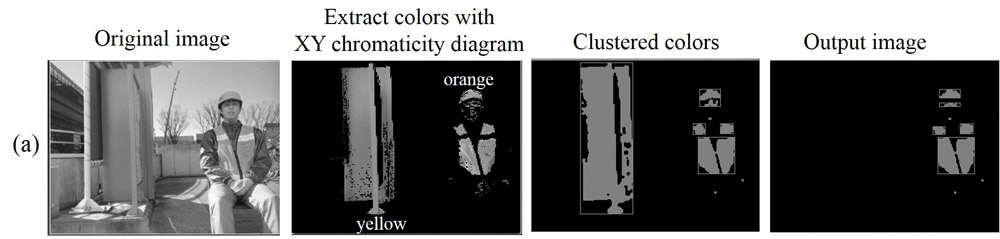

Color extraction rsult in RWRC

- [1] J. Eguchi et al., “Development of the autonomous mobile robot for target-searching in urban areas in the Tsukuba Challenge 2013,” J. of Robotics and Mechatronics, Vol.26, No.2, pp. 166-176, 2014.

- [2] K. Yamauchi et al., “Person detection method based on color layout in real world robot challenge 2013,” J. of Robotics and Mechatronics, Vol.26, No.2, pp. 151-157, 2014.

- [3] K. Yamauchi et al., “Precise color extraction method based on color transition due to change in exposure time,” IEEE/SICE Int. Symposium on System Integration, pp. 275-280, 2014.

- [4] S.-S. Hao et al., “Smoothing algorithms for clip-and-paste model-based video coding,” IEEE Trans. on Consumer Electronics, Vol.45, No.2, pp. 427-435, 1999.

- [5] R. Kawakami et al., “Consistent surface color for texturing large objects in outdoor scenes,” Proc. of the Tenth IEEE Int. Conf. on Computer Vision, Vol.2, pp. 1200-1207, 2005.

- [6] G. Buchbaum, “A spatial processor model for object colour perception,” J. of the Franklin Institute, Vol.310, No.1, pp. 1-26, 1980.

- [7] E. Y. Lam, “Combining gray world and Retinex theory for automatic white balance in digital photography,” Proc. of the Ninth Int. Symposium on Consumer Electronics, pp. 134-139, 2005.

- [8] R. Kawakami et al., “A robust framework to estimate surface color from changing illumination,” Proc. of the Sixth Asian Conf. on Computer Vision, Vol.2, pp. 1026-1031, 2004.

- [9] G. D. Finlayson et al., “Color constancy under varying illumination,” Proc. of IEEE Int. Conf. on Computer Vision, pp. 720-725, 1995.

- [10] P. E. Debeve et al., “Recovering high dynamic range radiance map from photographs,” Proc. of the 24th Annual Conf. on Computer Gaphics and Interactive Techniques, pp. 369-378, 1997.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.