Paper:

Comparative Analysis of PALSAR-2 and Geographical Features for Mapping Urban and Non-Urban Flooded Areas

Ryosuke Nagato*, Ira Karrel San Jose*

, Sesa Wiguna**

, Sesa Wiguna**

, Ryohei Kametaka*

, Ryohei Kametaka*

, Bruno Adriano**

, Bruno Adriano**

, Erick Mas**

, Erick Mas**

, and Shunichi Koshimura**,†

, and Shunichi Koshimura**,†

*Department of Civil and Environmental Engineering, Tohoku University

6-6-06 Aramaki Aza-Aoba, Aoba-ku, Sendai, Miyagi 980-8579, Japan

**International Research Institute of Disaster Science, Tohoku University

Sendai, Japan

†Corresponding author

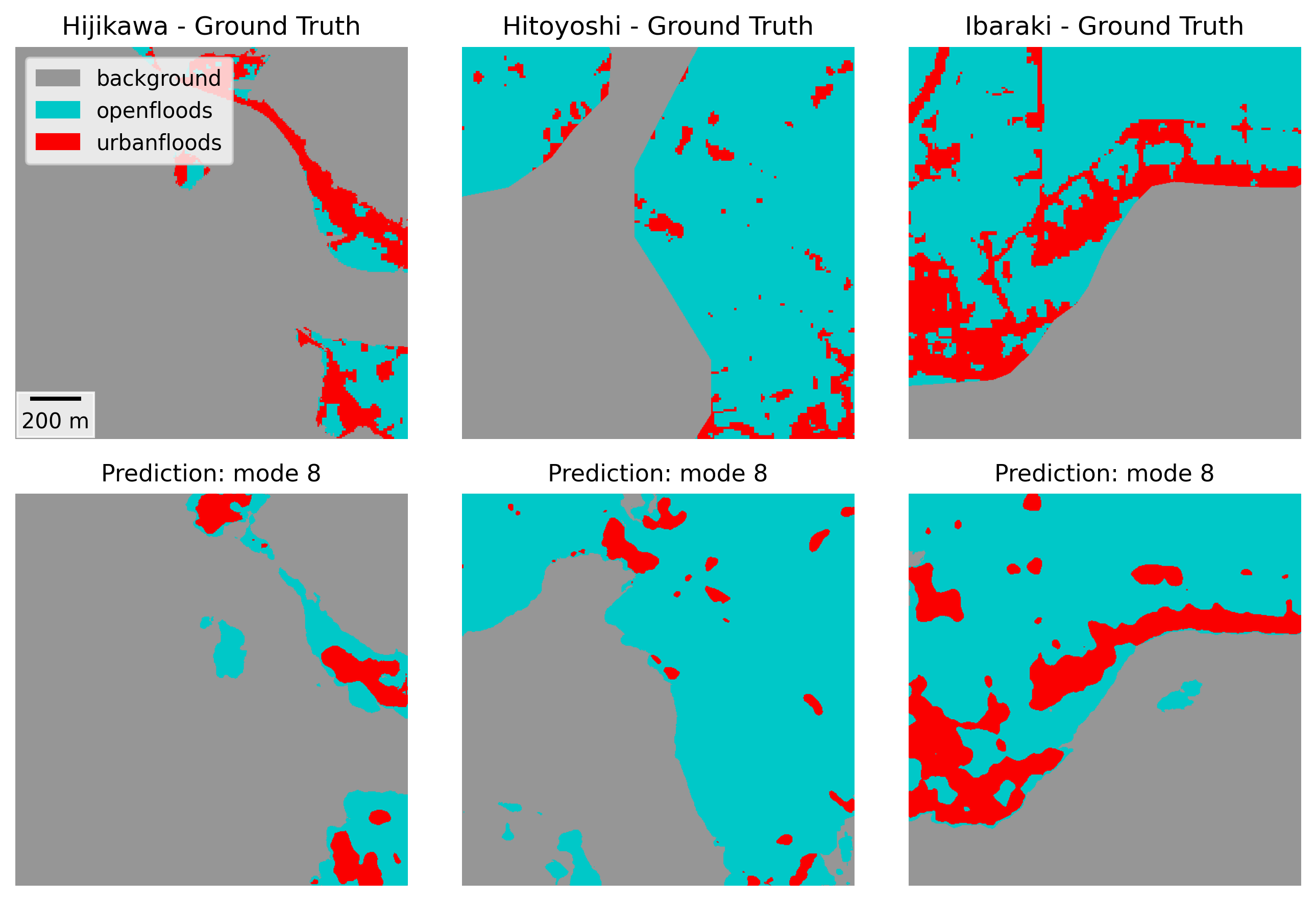

Owing to recent extreme weather events, flood risk has been rising annually, increasing the demand for fast and accurate flood mapping. Synthetic aperture radar imagery has received considerable attention for flood-mapping applications owing to its all-weather, day-and-night imaging capabilities. Although previous studies have achieved accurate mapping in non-urban areas, challenges remain for urban regions. This study focuses on flood events in Japan by employing a deep learning model and PALSAR-2 imagery to classify non-flooded areas, floods in open areas, and floods in urban areas. To understand the complex spectral characteristics specific to urban areas, this study investigates the integration of geographical features, such as slope and building footprints, into the segmentation process. The experimental results suggest that the inclusion of these supplementary data improves the prediction performance of the trained models.

Flood mapping using SAR and geographical features

- [1] J. Rentschler, M. Salhab, and B. A. Jafino, “Flood exposure and poverty in 188 countries,” Nature Communications, Vol.13, No.1, Article No.3527, 2022. https://doi.org/10.1038/s41467-022-30727-4

- [2] Centre for Research on the Epidemiology of Disasters (CRED) “The human cost of weather-related disasters 1995–2016,” United Nations Office for Disaster Risk Reduction (UNDRR), Technical report, 2015.

- [3] S. A. Kulp and B. H. Strauss, “New elevation data triple estimates of global vulnerability to sea-level rise and coastal flooding,” Nature Communications, Vol.10, Article No.4844, 2019. https://doi.org/10.1038/s41467-019-12808-z

- [4] P. C. Oddo and J. D. Bolten, “The value of near real-time earth observations for improved flood disaster response,” Frontiers in Environmental Science, Vol.7, Article No.127, 2019. https://doi.org/10.3389/fenvs.2019.00127

- [5] B. Bauer-Marschallinger, S. Cao, M. E. Tupas, F. Roth, C. Navacchi, T. Melzer, V. Freeman, and W. Wagner, “Satellite-based flood mapping through Bayesian inference from a Sentinel-1 SAR datacube,” Remote Sensing, Vol.14, No.15, Article No.3673, 2022. https://doi.org/10.3390/rs14153673

- [6] X. Jiang, S. Liang, X. He et al., “Rapid and large-scale mapping of flood inundation via integrating spaceborne synthetic aperture radar imagery with unsupervised deep learning,” ISPRS J. of Photogrammetry and Remote Sensing, Vol.178, pp. 36-50, 2021. https://doi.org/10.1016/j.isprsjprs.2021.05.019

- [7] J. Li, L. Li, Y. Song, J. Chen, Z. Wang, Y. Bao, W. Zhang, and L. Meng, “A robust large-scale surface water mapping framework with high spatiotemporal resolution based on the fusion of multi-source remote sensing data,” Int. J. of Applied Earth Observation and Geoinformation, Vol.118, Article No.103288, 2023. https://doi.org/10.1016/j.jag.2023.103288

- [8] Q. Yang, X. Shen, E. N. Anagnostou, C. Mo, J. R. Eggleston, and A. J. Kettner, “A high-resolution flood inundation archive (2016–the present) from Sentinel-1 SAR imagery over CONUS,” Bulletin of the American Meteorological Society, Vol.102, No.5, pp. E1064-E1079, 2021. https://doi.org/10.1175/BAMS-D-19-0319.1

- [9] J. Zhao, R. Pelich, R. Hostache, P. Matgen, W. Wagner, and M. Chini, “A large-scale 2005–2012 flood map record derived from ENVISAT-ASAR data: United Kingdom as a test case,” Remote Sensing of Environment, Vol.256, Article No.112338, 2021. https://doi.org/10.1016/j.rse.2021.112338

- [10] L. Giustarini, R. Hostache, P. Matgen, G. J.-P. Schumann, P. D. Bates, and D. C. Mason, “A change detection approach to flood mapping in urban areas using TerraSAR-X,” IEEE Trans. on Geoscience and Remote Sensing, Vol.51, No.4, pp. 2417-2430, 2012. https://doi.org/10.1109/TGRS.2012.2210901

- [11] J. Zhao, Z. Xiong, and X. X. Zhu, “UrbanSARFloods: Sentinel-1 SLC-based benchmark dataset for urban and open-area flood mapping,” Proc. of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 419-429, 2024. https://doi.org/10.1109/CVPRW63382.2024.00047

- [12] F. Montello, E. Arnaudo, and C. Rossi, “MMFlood: A multimodal dataset for flood delineation from satellite imagery,” IEEE Access, Vol.10, pp. 96774-96787, 2022. https://doi.org/10.1109/ACCESS.2022.3205419

- [13] B. Tavus, R. Can, and S. Kocaman, “A CNN-based flood mapping approach using Sentinel-1 data,” ISPRS Annals of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Vol.V-3-2022, pp. 549-556, 2022. https://doi.org/10.5194/isprs-annals-V-3-2022-549-2022

- [14] S. S. Baghermanesh, S. Jabari, and H. McGrath, “Urban flood detection using TerraSAR-X and SAR simulated reflectivity maps,” Remote Sensing, Vol.14, No.23, 2022. https://doi.org/10.3390/rs14236154

- [15] W. Liu and F. Yamazaki, “Review article: Detection of inundation areas due to the 2015 Kanto and Tohoku torrential rain in Japan based on multi-temporal alos-2 imagery,” Natural Hazards and Earth System Sciences, Vol.18, No.7, pp. 1905-1918, 2018. https://doi.org/10.5194/nhess-18-1905-2018

- [16] Japan Meteorological Agency, “Heavy rain due to the rainy season front and low pressure system July 23–26, 2024 (preliminary report),” 2024 (in Japanese). https://www.data.jma.go.jp/stats/data/bosai/report/2024/20240903/20240903.html [Accessed September 26, 2025]

- [17] Japan Meteorological Agency, “Heavy rainfall caused by Typhoon No.18 September 7–11, 2015 (preliminary report),” 2015 (in Japanese). https://www.data.jma.go.jp/stats/data/bosai/report/2015/20150907/20150907.html [Accessed September 26, 2025]

- [18] Japan Meteorological Agency, “July 2018 heavy rainfall (heavy rainfall caused by the front and Typhoon No.7) June 28 to July 8, 2018,” 2018 (in Japanese). https://www.data.jma.go.jp/stats/data/bosai/report/2018/20180713/20180713.html [Accessed September 26, 2025]

- [19] Japan Meteorological Agency, “July 2020 heavy rainfall July 3–31, 2020 (preliminary report),” 2020 (in Japanese). https://www.data.jma.go.jp/stats/data/bosai/report/2020/20200811/20200811.html [Accessed September 26, 2025]

- [20] Japan Meteorological Agency, “Heavy rain due to a front August 26–29, 2019 (preliminary report),” 2019 (in Japanese). https://www.data.jma.go.jp/stats/data/bosai/report/2019/20190826/20190826.html [Accessed September 26, 2025]

- [21] O. Ronneberger, P. Fischer, and T. Brox, “U-Net: Convolutional networks for biomedical image segmentation,” arXiv:1505.04597, 2015. https://doi.org/10.48550/arXiv.1505.04597

- [22] M. Tan and Q. Le, “EfficientNet: Rethinking model scaling for convolutional neural networks,” Proc. of the 36th Int. Conf. on Machine Learning, pp. 6105-6114, 2019.

- [23] A. G. Roy, N. Navab, and C. Wachinger, “Concurrent spatial and channel ‘squeeze & excitation’ in fully convolutional networks,” Proc. of the 2018 Int. Conf. on Medical Image Computing and Computer-Assisted Intervention (MICCAI’2018), pp. 421-429, 2018. https://doi.org/10.1007/978-3-030-00928-1_48

- [24] J. Deng, W. Dong, R. Socher, L.-J. Li, K. Li, and F.-F. Li, “ImageNet: A large-scale hierarchical image database,” 2009 IEEE Conf. on Computer Vision and Pattern Recognition, pp. 248-255, 2009. https://doi.org/10.1109/CVPR.2009.5206848

- [25] F. Milletari, N. Navab, and S.-A. Ahmadi, “V-net: Fully convolutional neural networks for volumetric medical image segmentation,” 2016 4th Int. Conf. on 3D Vision (3DV), pp. 565-571, 2016. https://doi.org/10.1109/3DV.2016.79

- [26] R. Azad, M. Heidary, K. Yilmaz, M. Hüttemann, S. Karimijafarbigloo, Y. Wu, A. Schmeink, and D. Merhof, “Loss functions in the era of semantic segmentation: A survey and outlook,” arXiv:2312.05391, 2023. https://doi.org/10.48550/arXiv.2312.05391

- [27] X. Wu, Z. Zhang, S. Xiong, W. Zhang, J. Tang, Z. Li, B. An, and R. Li, “A near-real-time flood detection method based on deep learning and SAR images,” Remote Sensing, Vol.15, No.8, Article No.2046, 2023. https://doi.org/10.3390/rs15082046

- [28] J. Zhao, Y. Li, P. Matgen, R. Pelich, R. Hostache, W. Wagner, and M. Chini, “Urban-aware U-Net for large-scale urban flood mapping using multitemporal Sentinel-1 intensity and interferometric coherence,” IEEE Trans. on Geoscience and Remote Sensing, Vol.60, Article No.4209121, 2022. https://doi.org/10.1109/TGRS.2022.3199036

- [29] Y. Li, S. Martinis, and M. Wieland, “Urban flood mapping with an active self-learning convolutional neural network based on TerraSAR-X intensity and interferometric coherence,” ISPRS J. of Photogrammetry and Remote Sensing, Vol.152, pp. 178-191, 2019. https://doi.org/10.1016/j.isprsjprs.2019.04.014

- [30] T. McCormack, J. Campanyà, and O. Naughton, “Reconstructing flood level timeseries at seasonal wetlands in Ireland using Sentinel-1,” Remote Sensing of Environment, Vol.299, Article No.113839, 2023. https://doi.org/10.1016/j.rse.2023.113839

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.