Research Paper:

Braille Character Recognition Independent of Lighting Direction Using Object Detection and Segmentation Models

Akihiro Yamashita*

, Hiroki Furukawa**, Taichi Shirakawa***, and Katsushi Matsubayashi*

, Hiroki Furukawa**, Taichi Shirakawa***, and Katsushi Matsubayashi*

*National Institute of Technology, Tokyo College

1220-2 Kunugida-machi, Hachioji, Tokyo 193-0997, Japan

**Institute of Science Tokyo

2-12-1 Ookayama, Meguro-ku, Tokyo 152-8550, Japan

***Graduate School of Media Design, Keio University

4-1-1 Hiyoshi, Kohoku-ku, Yokohama, Kanagawa 223-8526, Japan

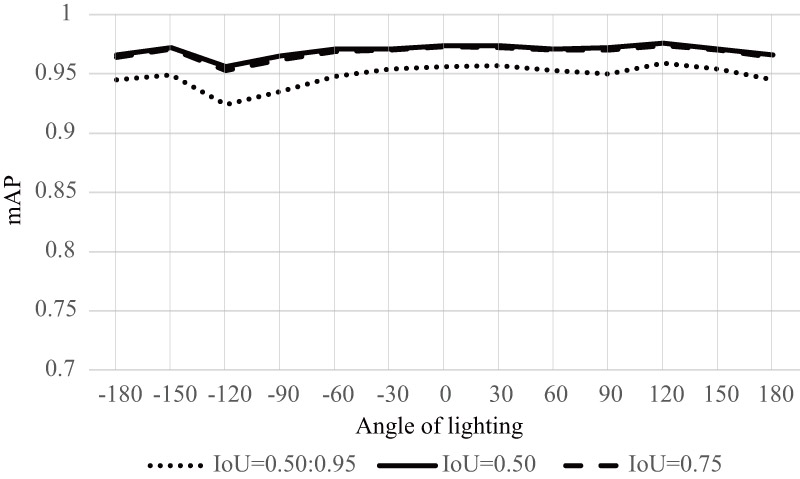

Braille text is used worldwide as a means of communication for the visually impaired. Although braille is primarily used by visually impaired individuals, sighted individuals may also want to read braille documents. For example, teachers at schools for the visually impaired may need to check homework or assignments written in braille, or care workers supporting the daily lives of visually impaired people may need to read braille documents. To meet such social demands, optical braille recognition technologies have been developed, typically using cameras and scanners. In particular, methods using deep learning models have achieved high accuracy in recent years. Because braille is represented by embossed dots on thick paper, the appearance of shadows varies significantly depending on the lighting angle. Therefore, the angle and intensity of light significantly affect the accuracy of braille recognition. Specifically, in the case of double-sided braille, where braille is embossed on both sides, distinguishing between raised and recessed dots is necessary. Previous studies have mostly imposed constraints on the lighting angle, such as limiting the imaging method to scanners or requiring the light source to be positioned on top of the braille document when using a camera. However, for practical use in daily life, it is preferable to recognize braille documents independent of the lighting direction. In this study, we created a dataset of braille images captured under various angle of lighting and developed a braille recognition model that is independent of the lighting angle by fine-tuning the object recognition and segmentation models. We implemented and compared RetinaNet, which was used in previous research, with the anchor-free YOLOX model as an object detection model. We also implemented BraU-Net, a customized segmentation model based on UNet, and compared it with object detection models. The object detection model achieved an accuracy of mAP50=0.98 or higher, regardless of the lighting angle.

Braille text recognition accuracy (mAP)

- [1] Musée Louis Braille website. https://museelouisbraille.com/en/le-braille-aujourd-hui [Accessed May 20, 2025]

- [2] International Council on English Braille, National Library Service for the Blind and Physically Handicapped Library of Congress, “World braille usage third edition,” UNESCO, 2013.

- [3] E. R. Hoskin, M. K. Coyne, M. J. White, S. C. D. Dobri, T. C. Davies, and S. D. Pinder, “Effectiveness of technology for braille literacy education for children: A systematic review,” Disability and Rehabilitation: Assistive Technology, Vol.19, No.1, pp. 120-130, 2024. https://doi.org/10.1080/17483107.2022.2070676

- [4] R. Englebretson, M. C. Holbrook, and S. Fischer-Baum, “A position paper on researching braille in the cognitive sciences: Decentering the sighted norm,” Applied Psycholinguistics, Vol.44, No.3, pp. 400-415, 2023. https://doi.org/10.1017/S0142716423000061

- [5] A. Yamashita, T. Shirakawa, and K. Matsubayashi, “Braille Character Recognition Independent of Lighting Direction Using Object Detection Models,” 2024 Joint 13th Int. Conf. on Soft Computing and Intelligent Systems and 25th Int. Symposium on Advanced Intelligent Systems (SCIS&ISIS), 2024. https://doi.org/10.1109/SCISISIS61014.2024.10759883

- [6] S. Isayed and R. Tahboub, “A review of optical braille recognition,” 2nd World Symposium on Web Applications and Networking (WSWAN), 2015. https://doi.org/10.1109/WSWAN.2015.7210343

- [7] R. Li, H. Liu, X. Wang, and Y. Qian, “DSBI: Double-sided braille image dataset and algorithm evaluation for braille dots detection,” arXiv preprint, arXiv:1811.10893v2, 2018. https://doi.org/10.48550/arXiv.1811.10893

- [8] R. Li, H. Liu, X. Wang, and Y. Qian, “Effective optical braille recognition based on two-stage learning for double-sided braille image,” 16th Pacific Rim Int. Conf. on Artificial Intelligence (PRICAI 2019), Trends in Artificial Intelligence, Lecture Notes in Computer Science, Vol.11672, Springer, 2019. https://doi.org/10.1007/978-3-030-29894-4_12

- [9] R. Li, H. Liu, X. Wang, J. Xu, and Y. Qian, “Optical braille recognition based on semantic segmentation network with auxiliary learning strategy,” 2020 IEEE/CVF Conf. on Computer Vision and Pattern Recognition Workshops (CVPRW), pp. 2362-2368, 2020. https://doi.org/10.1109/CVPRW50498.2020.00285

- [10] Z. Meng, Z. Cai, J. Feng, H. Ma, H. Zhang, and S. Li, “Braille character segmentation algorithm based on Gaussian diffusion,” Computers, Materials & Continua, Vol.79, No.1, pp. 1481-1496, 2024. https://doi.org/10.32604/cmc.2024.048002

- [11] I. G. Ovodov, “Optical braille recognition using object detection neural network,” Proc. of the IEEE/CVF Int. Conf. on Computer Vision Workshops (ICCVW), pp. 1741-1748, 2021. https://doi.org/10.1109/ICCVW54120.2021.00200

- [12] T.-Y. Lin, P. Goyal, R. Girshick, K. He, and P. Dollár, “Focal loss for dense object detection,” arXiv preprint, arXiv:1708.02002, 2017. https://doi.org/10.48550/arXiv.1708.02002

- [13] Y. Zhong, J. Wang, J. Peng, and L. Zhang, “Anchor Box Optimization for Object Detection,” arXiv preprint, arXiv:1812.00469, 2018. https://doi.org/10.48550/arXiv.1812.00469

- [14] W. Ma, T. Tian, H. Xu, Y. Huang, and Z. Li, “AABO: Adaptive Anchor Box Optimization for Object Detection via Bayesian Sub-sampling,” arXiv preprint, arXiv:2007.09336, 2020. https://doi.org/10.48550/arXiv.2007.09336

- [15] Z. Ge, S. Liu, F. Wang, Z. Li, and J. Sun, “YOLOX: Exceeding YOLO Series in 2021,” arXiv preprint, arXiv:2107.08430, 2021. https://doi.org/10.48550/arXiv.2107.08430

- [16] O. Ronneberger, P. Fischer, and T. Brox, “U-Net: Convolutional Networks for Biomedical Image Segmentation,” Medical Image Computing and Computer-Assisted Intervention (MICCAI 2015), Lecture Notes in Computer Science, Vol.9351, pp. 234-241, 2015. https://doi.org/10.1007/978-3-319-24574-4_28

- [17] C. H. Sudre, W. Li, T. Vercauteren, S. Ourselin, and M. J. Cardoso, “Generalized Dice overlap as a deep learning loss function for highly unbalanced segmentations,” Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support, pp. 240-248, 2017. https://doi.org/10.1007/978-3-319-67558-9_28

- [18] EasyTactix website. https://www.easytactix.com/index.html [Accessed May 20, 2025]

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.