Research Paper:

Multiscale Attention-Based Model for Image Enhancement and Classification

Mingyu Guo*

and Tomohito Takubo**

and Tomohito Takubo**

*Graduate School of Engineering, Osaka City University

3-3-138 Sugimoto, Sumiyoshi-ku, Osaka 558-8585, Japan

**Graduate School of Engineering, Osaka Metropolitan University

3-3-138 Sugimoto, Sumiyoshi-ku, Osaka 558-8585, Japan

Fine-grained image classification plays a crucial role in various applications, such as agricultural disease detection, medical diagnosis, and industrial inspection. However, achieving a high classification accuracy while maintaining computational efficiency remains a significant challenge. To address this issue, in this study, enhanced DetailNet (EDNET), a convolutional neural network (CNN) model designed to balance fine-detail preservation and global context understanding, was developed. EDNET integrates multiscale attention mechanisms and self-attention modules, enabling it to capture both local and global information simultaneously. Extensive ablation studies were conducted to evaluate the contribution of each module and EDNET was compared with the mainstream benchmark models ResNet50, EfficientNet, and vision transformers. The results demonstrate that EDNET achieves highly competitive performance in terms of accuracy, F1-score, and area under the receiver operating characteristic curve, while maintaining an optimal balance between parameter count and inference efficiency. In addition, EDNET was tested in both high-performance graphics processing unit (NVIDIA RTX 3090) and resource-constrained environments (Jetson Nano simulation). The results confirm that EDNET is deployable on edge devices, achieving an inference efficiency comparable to that of EfficientNet, while outperforming traditional CNN models in fine-grained classification tasks.

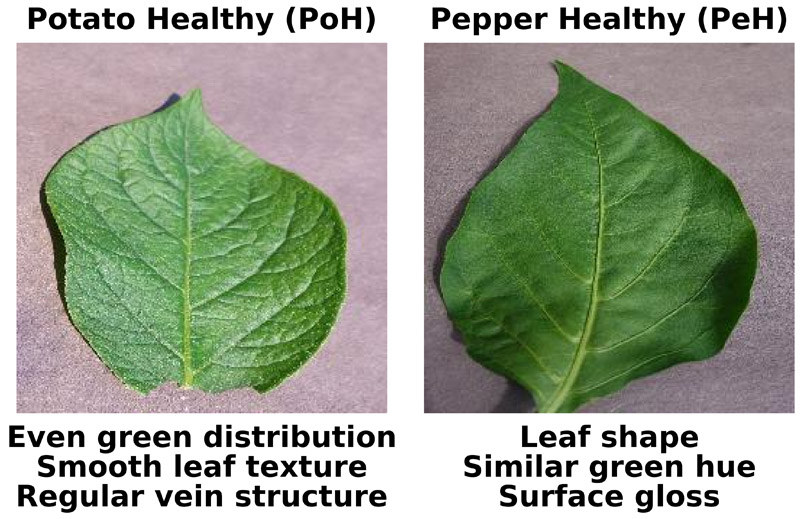

Leaf disease samples

- [1] S. P. Mohanty, D. P. Hughes, and M. Salathé, “Using deep learning for image-based plant disease detection,” Frontiers in Plant Science, Vol.7, Article No.1419, 2016. https://doi.org/10.3389/fpls.2016.01419

- [2] D. S. Kermany, M. Goldbaum et al., “Identifying medical diagnoses and treatable diseases by image-based deep learning,” Cell, Vol.172, No.5, pp. 1122-1131, 2018. https://doi.org/10.1016/j.cell.2018.02.010

- [3] P. Bergmann, M. Fauser, D. Sattlegger, and C. Steger, “MVTec AD – A comprehensive real-world dataset for unsupervised anomaly detection,” Proc. of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 9584-9592, 2019. https://doi.org/10.1109/CVPR.2019.00982

- [4] K. He, X. Zhang, S. Ren, and J. Sun, “Deep residual learning for image recognition,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 770-778, 2016. https://doi.org/10.1109/CVPR.2016.90

- [5] A. Krizhevsky, I. Sutskever, and G. E. Hinton, “ImageNet classification with deep convolutional neural networks,” Advances in Neural Information Processing Systems (NIPS), pp. 1097-1105, 2012.

- [6] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” Int. Conf. on Learning Representations (ICLR), 2015.

- [7] G. Huang, Z. Liu, L. Van Der Maaten, and K. Q. Weinberger, “Densely connected convolutional networks,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 2261-2269, 2017. https://doi.org/10.1109/CVPR.2017.243

- [8] A. Dosovitskiy, L. Beyer, A. Kolesnikov, D. Weissenborn, X. Zhai, T. Unterthiner, and G. Hinton, “An image is worth 16x16 words: Transformers for image recognition at scale,” arXiv preprint, arXiv.2010.11929, 2020. https://doi.org/10.48550/arXiv.2010.11929

- [9] H. Touvron, M. Cord, M. Douze, F. Massa, A. Sablayrolles, and H. Jegou, “Training data-efficient image transformers & distillation through attention,” Int. Conf. on Machine Learning (ICML), pp. 10347-10357, 2021.

- [10] Z. Liu, Y. Lin, Y. Cao, H. Hu, Y. Wei, Z. Zhang, S. Lin, and B. Guo, “Swin transformer: Hierarchical vision transformer using shifted windows,” Proc. of the IEEE/CVF Int. Conf. on Computer Vision (ICCV), pp. 9992-10002, 2021. https://doi.org/10.1109/ICCV48922.2021.00986

- [11] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is all you need,” Advances in Neural Information Processing Systems (NeurIPS), pp. 5998-6008, 2017.

- [12] M. Tan and Q. V. Le, “EfficientNet: Rethinking model scaling for convolutional neural networks,” Int. Conf. on Machine Learning (ICML), pp. 6105-6114, 2019.

- [13] S. Mehta and M. Rastegari, “MobileViT: Light-weight, general-purpose, and mobile-friendly vision transformer,” arXiv preprint, arXiv.2110.02178, 2021. https://doi.org/10.48550/arXiv.2110.02178

- [14] L.-C. Chen, G. Papandreou, F. Schroff, and H. Adam, “Rethinking atrous convolution for semantic image segmentation,” arXiv preprint, arXiv.1706.05587, 2017. https://doi.org/10.48550/arXiv.1706.05587

- [15] J. Hu, L. Shen, and G. Sun, “Squeeze-and-excitation networks,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 7132-7141, 2018. https://doi.org/10.1109/CVPR.2018.00745

- [16] S. Woo, J. Park, J.-Y. Lee, and I. S. Kweon, “CBAM: Convolutional block attention module,” Proc. of the European Conf. on Computer Vision (ECCV), pp. 3-19, 2018. https://doi.org/10.1007/978-3-030-01234-2_1

- [17] Q. Wang, B. Wu, P. Zhu, P. Li, W. Zuo, and Q. Hu, “ECA-Net: Efficient channel attention for deep convolutional neural networks,” Proc. of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 11531-11539, 2020. https://doi.org/10.1109/CVPR42600.2020.01155

- [18] T.-Y. Lin, P. Dollár, R. Girshick, K. He, B. Hariharan, and S. Belongie, “Feature Pyramid Networks for Object Detection,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 2117-2125, 2017. https://doi.org/10.1109/CVPR.2017.106

- [19] D. G. Lowe, “Distinctive image features from scale-invariant keypoints,” Int. J. of Computer Vision, Vol.60, No.2, pp. 91-110, 2004. https://doi.org/10.1023/B:VISI.0000029664.99615.94

- [20] N. Dalal and B. Triggs, “Histograms of oriented gradients for human detection,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 886-893, 2005. https://doi.org/10.1109/CVPR.2005.177

- [21] X. Wang, R. Girshick, A. Gupta, and K. He, “Non-local neural networks,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 7794-7803, 2018. https://doi.org/10.1109/CVPR.2018.00813

- [22] T.-Y. Lin, A. RoyChowdhury, and S. Maji, “Bilinear CNN models for fine-grained visual recognition,” Proc. of the IEEE Int. Conf. on Computer Vision (ICCV), pp. 1449-1457, 2015. https://doi.org/10.1109/ICCV.2015.170

- [23] K. Sun, B. Xiao, D. Liu, and J. Wang, “Deep high-resolution representation learning for human pose estimation,” Proc. of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 5693-5703, 2019. https://doi.org/10.1109/CVPR.2019.00584

- [24] M. Tan, R. Pang, and Q. V. Le, “EfficientDet: Scalable and efficient object detection,” Proc. of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 10781-10790, 2020. https://doi.org/10.1109/CVPR42600.2020.01079

- [25] M. Sandler, A. Howard, M. Zhu, A. Zhmoginov, and L.-C. Chen, “MobileNetV2: Inverted residuals and linear bottlenecks,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 4510-4520, 2018. https://doi.org/10.1109/CVPR.2018.00474

- [26] N. Ma, X. Zhang, H.-T. Zheng, and J. Sun, “ShuffleNet V2: Practical guidelines for efficient CNN architecture design,” Proc. of the European Conf. on Computer Vision (ECCV), pp. 122-138, 2018. https://doi.org/10.1007/978-3-030-01264-9_8

- [27] K. Han, Y. Wang, Q. Tian, J. Guo, C. Xu, and C. Xu, “GhostNet: More features from cheap operations,” Proc. of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 1577-1586, 2020. https://doi.org/10.1109/CVPR42600.2020.00165

- [28] N. d’Ascoli, D. Schmid, and T. Brox, “ConViT: Improving vision transformers with soft convolutional inductive biases,” Proc. of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), 2021.

- [29] K. Choromanski, V. Likhosherstov, D. Dohan, X. Song, A. Gane, T. Sarlos, P. Hawkins, J. Davis, A. Mohiuddin, L. Kaiser, D. Belanger, L. Colwell, and A. Weller, “Rethinking attention with performers,” Int. Conf. on Learning Representations (ICLR), 2021.

- [30] Y. Xiong, Z. Zeng, R. Chakraborty, M. Tan, G. Fung, Y. Li, and V. Singh, “Nyströmformer: A nyström-based algorithm for approximating self-attention,” Proc. of the AAAI Conf. on Artificial Intelligence (AAAI), Vol.35, No.16, pp. 14138-14148, 2021.

- [31] H. Zhang, I. Goodfellow, D. Metaxas, and A. Odena, “Self-attention generative adversarial networks,” Proc. of the Int. Conf. on Machine Learning (ICML), pp. 7354-7363, 2019.

- [32] Q. Hou, D. Zhou, and J. Feng, “Coordinate attention for efficient mobile network design,” Proc. of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 13708-13717, 2021. https://doi.org/10.1109/CVPR46437.2021.01350

- [33] W. Wang, E. Xie, X. Li, D.-P. Fan, K. Song, D. Liang, T. Lu, P. Luo, and L. Shao, “Pyramid vision transformer: A versatile backbone for dense prediction without convolutions,” Proc. of the IEEE/CVF Int. Conf. on Computer Vision (ICCV), pp. 546-558, 2021. https://doi.org/10.1109/ICCV48922.2021.00061

- [34] H. Fan, B. Xiong, K. Mangalam, Y. Li, Z. Yan, J. Malik, and C. Feichtenhofer, “Multiscale vision transformers,” Proc. of the IEEE/CVF Int. Conf. on Computer Vision (ICCV), pp. 6804-6815, 2021. https://doi.org/10.1109/ICCV48922.2021.00675

- [35] Y. Li et al., “Rethinking vision transformers for mobilenet size and speed,” Proc. of the IEEE/CVF Int. Conf. on Computer Vision (ICCV), pp. 16843-16854, 2023. https://doi.org/10.1109/ICCV51070.2023.01549

- [36] R. Zhang, “Making convolutional networks shift-invariant again,” Int. Conf. on Machine Learning (ICML), pp. 7324-7334, 2019.

- [37] M. Lin, Q. Chen, and S. Yan, “Network in network,” Int. Conf. on Learning Representations (ICLR), 2014.

- [38] J. Yu, L. Yang, N. Xu, J. Yang, and T. S. Huang, “Slimmable neural networks,” Int. Conf. on Learning Representations (ICLR), 2019.

- [39] H. Cai, C. Gan, T. Wang, Z. Zhang, and S. Han, “Once-for-all: Train one network and specialize it for efficient deployment,” Int. Conf. on Learning Representations (ICLR), 2020.

- [40] B. Yang, G. Bender, Q. V. Le, and J. Ngiam, “CondConv: Conditionally parameterized convolutions for efficient inference,” Advances in Neural Information Processing Systems (NeurIPS), 2019.

- [41] Y. Cui, M. Jia, T.-Y. Lin, Y. Song, and S. Belongie, “Class-balanced loss based on effective number of samples,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 9260-9269, 2019. https://doi.org/10.1109/CVPR.2019.00949

- [42] K. Cao, C. Wei, A. Gaidon, N. Arechiga, and T. Ma, “Learning imbalanced datasets with label-distribution-aware margin loss,” Advances in Neural Information Processing Systems (NeurIPS), 2019.

- [43] A. K. Menon, S. Jayasumana, A. S. Rawat, H. Jain, A. Veit, and S. Kumar, “Long-tail learning via logit adjustment,” Int. Conf. on Learning Representations (ICLR), 2021.

- [44] A. K. Singh, B. K. Singh, and S. K. Pandey, “Automated defect detection in casting components using deep learning,” J. of Manufacturing Systems, Vol.58, pp. 231-245, 2021.

- [45] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv preprint, arXiv.1412.6980, 2014. https://doi.org/10.48550/arXiv.1412.6980

- [46] K. He, X. Zhang, S. Ren, and J. Sun, “Delving deep into rectifiers: Surpassing human-level performance on ImageNet classification,” Proc. of the IEEE Int. Conf. on Computer Vision (ICCV), pp. 1026-1034, 2015. https://doi.org/10.1109/ICCV.2015.123

- [47] D. S. Moore, G. P. McCabe, and B. A. Craig, “Introduction to the Practice of Statistics (6th ed.),” W. H. Freeman and Company, 2007.

- [48] J. A. Rice, “Mathematical Statistics and Data Analysis (3rd ed.),” Duxbury Press, 2006.

- [49] Student, “The probable error of a mean,” Biometrika, Vol.6, No.1, pp. 1-25, 1908. https://doi.org/10.2307/2331554

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.