Research Paper:

SAIE-GZSL: Semantic Attribute Interpolation Enhancement for Generalized Zero-Shot Learning

Xiaomeng Zhang*

, Zhi Zheng*,**,†

, Zhi Zheng*,**,†

, and Xiaomin Lin*

, and Xiaomin Lin*

*College of Computer and Cyber Security, Fujian Normal University

No.8 Xuefu South Road, Shangjie, Minhou, Fuzhou, Fujian 350117, China

**College of Control Science and Engineering, Zhejiang University

No.38 Zheda Road, West Lake District, Hangzhou, Zhejiang 310027, China

†Corresponding author

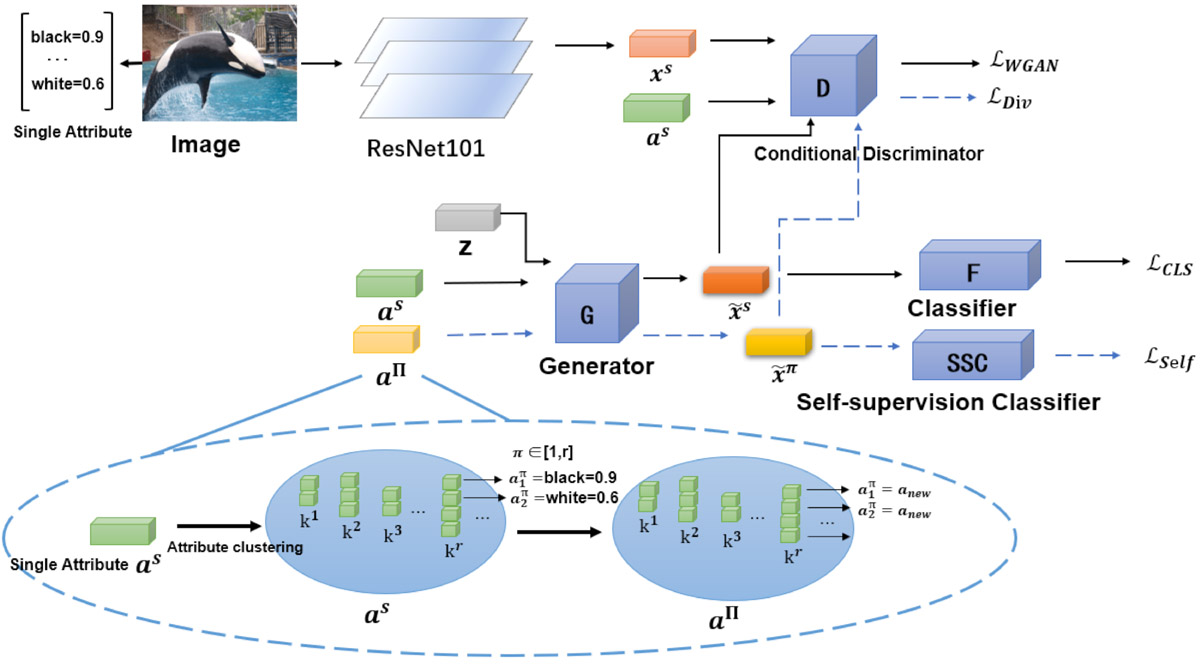

Generalized zero-shot learning (GZSL) focuses on recognizing classes, both seen and unseen, without the need for labeled data specifically for the unseen classes. Generative GZSL has attracted considerable attention because it transforms the traditional GZSL into a fully supervised learning task. Most generative GZSL methods use a single semantic attribute (each category can only correspond to a specific semantic attribute) and Gaussian noise to generate visual features, assuming a one-to-one correspondence between these visual features and single semantic attributes. However, in practice, there may be cases of attribute missingness in images, leading to visual features that lack certain attributes, thus failing to achieve a good mapping between semantic attributes and visual features. Therefore, visual features of the same class should have diverse semantic attributes. To address this issue, we propose a new method for enhancing semantic attributes, called “Semantic Attribute Interpolation Enhancement for Generalized Zero-Shot Learning (SAIE-GZSL).” This method uses interpolation to address the problem of semantic attribute missingness in real-world situations, thereby enhancing semantic diversity and generating more realistic and diverse visual features. We assessed the performance of the proposed model across four benchmark datasets, and the findings demonstrated substantial enhancements over current state-of-the-art methods, particularly in handling categories with severe attribute missingness in the datasets.

Semantic attribute enhancement for GZSL

- [1] M. Palatucci, D. Pomerleau, G. Hinton, and T. M. Mitchell, “Zero-shot learning with semantic output codes,” Proc. of the 23rd Int. Conf. on Neural Information Processing Systems, pp. 1410-1418, 2009.

- [2] R. Socher, M. Ganjoo, C. D. Manning, and A. Y. Ng, “Zero-shot learning through cross-modal transfer,” Proc. of the 27th Int. Conf. on Neural Information Processing Systems, Vol.1, pp. 935-943, 2013.

- [3] A. Farhadi, I. Endres, D. Hoiem, and D. Forsyth, “Describing objects by their attributes,” 2009 IEEE Conf. on Computer Vision and Pattern Recognition, pp. 1778-1785, 2009. https://doi.org/10.1109/CVPR.2009.5206772

- [4] Y. Xian, B. Schiele, and Z. Akata, “Zero-shot learning – The good, the bad and the ugly,” 2017 IEEE Conf. on Computer Vision and Pattern Recognition, pp. 3077-3086, 2017. https://doi.org/10.1109/CVPR.2017.328

- [5] Y. Shigeto, I. Suzuki, K. Hara, M. Shimbo, and Y. Matsumoto, “Ridge regression, hubness, and zero-shot learning,” Proc. of the European Conf. on Machine Learning and Knowledge Discovery in Databases, pp. 135-151, 2015. https://doi.org/10.1007/978-3-319-23528-8_9

- [6] Y. Yu, Z. Ji, J. Han, and Z. Zhang, “Episode-based prototype generating network for zero-shot learning,” 2020 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 14032-14041, 2020. https://doi.org/10.1109/CVPR42600.2020.01405

- [7] V. K. Verma, G. Arora, A. Mishra, and P. Rai, “Generalized zero-shot learning via synthesized examples,” 2018 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 4281-4289, 2018. https://doi.org/10.1109/CVPR.2018.00450

- [8] Y. Xian, T. Lorenz, B. Schiele, and Z. Akata, “Feature generating networks for zero-shot learning,” 2018 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 5542-5551, 2018. https://doi.org/10.1109/CVPR.2018.00581

- [9] Z. Han, Z. Fu, S. Chen, and J. Yang, “Contrastive embedding for generalized zero-shot learning,” 2021 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 2731-2381, 2021. https://doi.org/10.1109/CVPR46437.2021.00240

- [10] Z. Wang et al., “Bi-directional distribution alignment for transductive zero-shot learning,” 2023 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 19893-19902, 2023. https://doi.org/10.1109/CVPR52729.2023.01905

- [11] Y. Liu et al., “Transductive zero-shot learning with generative model-driven structure alignment,” Pattern Recognition, Vol.153, Article No.110561, 2024. https://doi.org/10.1016/j.patcog.2024.110561

- [12] J. Li et al., “Leveraging the invariant side of generative zero-shot learning,” 2019 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 7394-7403, 2019. https://doi.org/10.1109/CVPR.2019.00758

- [13] Y. Xian, S. Sharma, B. Schiele, and Z. Akata, “F-VAEGAN-D2: A feature generating framework for any-shot learning,” 2019 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 10267-10276, 2019. https://doi.org/10.1109/CVPR.2019.01052

- [14] S. Narayan, A. Gupta, F. S. Khan, C. G. M. Snoek, and L. Shao, “Latent embedding feedback and discriminative features for zero-shot classification,” Proc. of the 16th European Conf. on Computer Vision, Part 22, pp. 479-495, 2020. https://doi.org/10.1007/978-3-030-58542-6_29

- [15] X. Zhao, Y. Shen, S. Wang, and H. Zhang, “Boosting generative zero-shot learning by synthesizing diverse features with attribute augmentation,” Proc. of the AAAI Conf. on Artificial Intelligence, Vol.36, No.3, pp. 3454-3462, 2022. https://doi.org/10.1609/aaai.v36i3.20256

- [16] C. M. Bishop, “Pattern Recognition and Machine Learning,” Springer, 2006.

- [17] C. H. Lampert, H. Nickisch, and S. Harmeling, “Learning to detect unseen object classes by between-class attribute transfer,” 2009 IEEE Conf. on Computer Vision and Pattern Recognition, pp. 951-958, 2009. https://doi.org/10.1109/CVPR.2009.5206594

- [18] P. Welinder et al., “Caltech-UCSD birds 200,” CNS-TR-2010-001, California Institute of Technology, 2010.

- [19] G. Patterson and J. Hays, “Sun attribute database: Discovering, annotating, and recognizing scene attributes,” 2012 IEEE Conf. on Computer Vision and Pattern Recognition, pp. 2751-2758, 2012. https://doi.org/10.1109/CVPR.2012.6247998

- [20] D. P. Kingma and J. Ba, “Adam: A method for stochastic optimization,” arXiv:1412.6980v9, 2017. https://doi.org/10.48550/arXiv.1412.6980

- [21] M. Arjovsky, S. Chintala, and L. Bottou, “Wasserstein generative adversarial networks,” Proc. of the 34th Int. Conf. on Machine Learning, pp. 214-223, 2017.

- [22] L. van der Maaten and G. E. Hinton, “Visualizing data using t-SNE,” J. of Machine Learning Research, Vol.9, pp. 2579-2605, 2008.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.