Research Paper:

Fall Detection Based on Inverted-Pendulum Model as Training Data for Monitoring Elderly People Living Solitarily Using Depth Camera

Eisuke Tomita* and Akinori Sekiguchi**,†

*Sustainable Engineering Program, Graduate School of Engineering, Tokyo University of Technology

1404-1 Katakura, Hachioji, Tokyo 192-0982, Japan

**Department of Mechanical Engineering, Tokyo University of Technology

1404-1 Katakura, Hachioji, Tokyo 192-0982, Japan

†Corresponding author

This study aims to develop a monitoring system for elderly individuals living solitarily using time-series data generated via simulation as training data. In particular, we focus on classifying three types of motion: falling, static standing, and walking. First, we create a system that calculates body velocity and acceleration using a depth camera. Based on actual measurements of each motion, we identify their distinct characteristics. Subsequently, we implement an inverted-pendulum model, which is commonly used for human-motion analysis, in a dynamics simulator. Simulations of falling, static standing, and walking are conducted, which successfully generated time-series data closely resembling the actual measured motions. Finally, using the simulation-derived time-series data as training data, we perform a machine-learning-based classification of falling, static standing, and walking motions measured using Azure Kinect. Although some misclassifications occurred, the system accurately classified most of the motions.

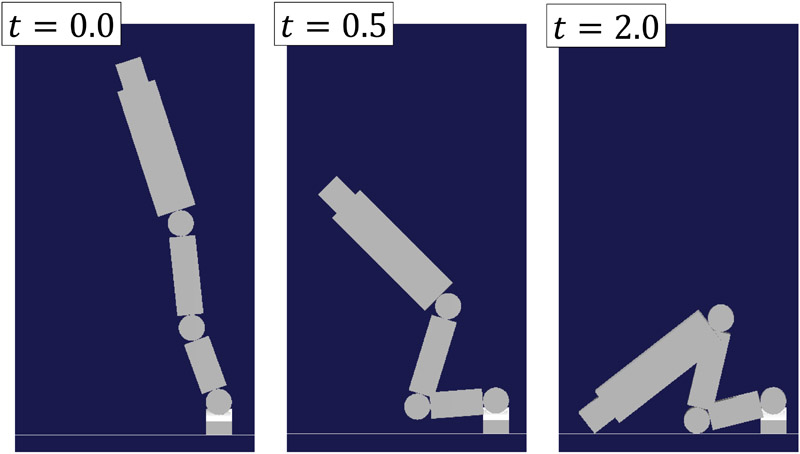

Falling-motion simulation using IPM

- [1] Cabinet Office, “Annual Report on the Aging Society: 2023 Edition (Complete Version),” 2024. https://www8.cao.go.jp/kourei/whitepaper/w-2024/zenbun/06pdf_index.html [Accessed December 13, 2024]

- [2] Tokyo Fire Department, “The Reality of Daily Life Accidents as Seen from Emergency Transport Data,” Disaster Prevention and Safety Division, Disaster Prevention Department, Tokyo Fire Department, 2023. https://www.tfd.metro.tokyo.lg.jp/learning/elib/kkhdata/index.html [Accessed December 13, 2024]

- [3] S. Shuo, K. Yamamoto, and N. Kubota, “An Elderly Monitoring System Based on Multiple Ultra-Sensitive Vibration and Pneumatic Sensors,” J. Adv. Comput. Intell. Intell. Inform., Vol.25, No.4, pp. 423-431, 2021. https://doi.org/10.20965/jaciii.2021.p0423

- [4] S. Shuo, N. Kubota, K. Hotta, and T. Sawayama, “Behavior Estimation Based on Multiple Vibration Sensors for Elderly Monitoring Systems,” J. Adv. Comput. Intell. Intell. Inform., Vol.25, No.4, pp. 489-497, 2021. https://doi.org/10.20965/jaciii.2021.p0489

- [5] L. Palmerini, J. Klenk, C. Becker, and L. Chiari, “Accelerometer-Based Fall Detection Using Machine Learning: Training and Testing on Real-World Falls,” Sensors, Vol.20, No.22, Article No.6479, 2020. https://doi.org/10.3390/s20226479

- [6] Y.-D. Lee and W.-Y. Chung, “Wireless sensor network based wearable smart shirt for ubiquitous health and activity monitoring,” Sensors and Actuators B: Chemical, Vol.140, No.2, pp. 390-395, 2009. https://doi.org/10.1016/j.snb.2009.04.040

- [7] H. Ramirez, S. A. Velastin, I. Meza, E. Fabregas, D. Makris, and G. Farias, “Fall Detection and Activity Recognition Using Human Skeleton Features,” IEEE Access, Vol.9, pp. 33532-33542, 2021. https://doi.org/10.1109/ACCESS.2021.3061626

- [8] T. Imamura, V. G. Moshnyaga, and K. Hashimoto, “Automatic fall detection by using Doppler-radar and LSTM-based recurrent neural network,” 2022 IEEE 4th Global Conf. on Life Sciences and Technologies (LifeTech), pp. 36-37, 2022. https://doi.org/10.1109/LifeTech53646.2022.9754883

- [9] J. Kim, S. Cheon, and J. Lim, “IoT-Based Unobtrusive Physical Activity Monitoring System for Predicting Dementia,” IEEE Access, Vol.10, pp. 26078-26089, 2022. https://doi.org/10.1109/ACCESS.2022.3156607

- [10] M. Mihalec, Y. Zhao, and J. Yi, “Recoverability Estimation and Control for an Inverted Pendulum Walker Model Under Foot Slip,” 2020 IEEE/ASME Int. Conf. on Advanced Intelligent Mechatronics (AIM), pp. 771-776, 2020. https://doi.org/10.1109/AIM43001.2020.9159043

- [11] T. Zielinska, G. R. Rivera Coba, and W. Ge, “Variable Inverted Pendulum Applied to Humanoid Motion Design,” Robotica, Vol.39, No.8, pp. 1368-1389, 2021. https://doi.org/10.1017/S0263574720001228

- [12] J. Zhang, C. Wu, and Y. Wang, “Human Fall Detection Based on Body Posture Spatio-Temporal Evolution,” Sensors, Vol.20, No.3, Article No.946, 2020. https://doi.org/10.3390/s20030946

- [13] Microsoft, Azure Kinect DK depth camera. https://learn.microsoft.com/en-us/previous-versions/azure/kinect-dk/hardware-specification [Accessed December 19, 2024]

- [14] S. Nakaoka, “Choreonoid: Extensible virtual robot environment built on an integrated GUI framework,” 2012 IEEE/SICE Int. Symp. on System Integration (SII), pp. 79-85, 2012. https://doi.org/10.7210/jrsj.31.226

- [15] H. Zhao, H. Zhou, and D. Cai, “Simulation of Human Falling Action Considering the Movable Ranges of Body Joints,” Proc. of the 65th National Convention of the Information Processing Society, Vol.1, pp. 181-182, 2003 (in Japanese).

- [16] K. Gtz-Neumann, “Ganganalyse in der Physiotherapie,” Stuttgart, Thieme Verlag, 2016.

- [17] M. A. Khatun et al., “Deep CNN-LSTM with Self-Attention Model for Human Activity Recognition Using Wearable Sensor,” IEEE J. of Translational Engineering in Health and Medicine, Vol.10, pp. 1-16, 2022. https://doi.org/10.1109/JTEHM.2022.3177710

- [18] W. Ahmad, B. M. Kazmi, and H. Ali, “Human Activity Recognition using Multi-Head CNN followed by LSTM,” 2019 15th Int. Conf. on Emerging Technologies (ICET), pp. 1-6, 2019. https://doi.org/10.1109/ICET48972.2019.8994412

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.