Research Paper:

Automatic Classification of Sleep-Wake States of Newborns Using Only Body and Face Videos

Yuki Ito*, Kento Morita*

, Asami Matsumoto**, Harumi Shinkoda***, and Tetsushi Wakabayashi*

, Asami Matsumoto**, Harumi Shinkoda***, and Tetsushi Wakabayashi*

*Graduate School of Engineering, Mie University

1577 Kurimamachiya-cho, Tsu, Mie 514-8507, Japan

**Suzuka University of Medical Science

3500-3 Minamitamagaki, Suzuka, Mie 513-8670, Japan

***Fukuoka Jo Gakuin Nursing University

1-1-7 Chidori, Koga, Fukuoka 811-3113, Japan

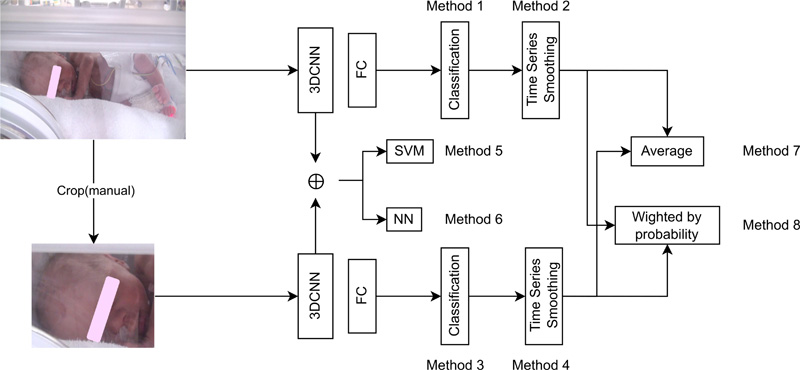

The premature newborn receives specialized medical care in the neonatal intensive care unit (NICU), where various medical devices emit excessive light and sound stimulation, and those prolonged exposures to stimuli may cause stress and hinder the development of the newborn’s nervous system. The formation of their biological clock or circadian rhythm, influenced by light and sound, is crucial for establishing sleep patterns. Therefore, it is essential to investigate how the NICU environment affects a newborn’s sleep quality and rhythms. Brazelton’s classification criteria measure the sleep-wake state of newborns, but the visual classification is time-consuming. Therefore, we propose a method to reduce the burden by automatically classifying the sleep-wake state of newborns from video images. We focused on videos of whole-body and face-only videos of newborns and classified them into five states according to Brazelton’s classification criteria. In this paper, we propose and compare methods of classifying whole-body and face-only videos separately using a three-dimensional convolutional neural network (3D CNN) and combining the two results obtained from whole-body and face-only videos with time-series smoothing. Experiments using 16 videos of 8 newborn subjects showed that the highest accuracy of 0.611 and kappa score of 0.623 were achieved by weighting the time-series smoothed results from whole-body and face-only videos by the output probabilities from the 3D CNN. This result indicated that the time-series smoothing and combining the results based on probabilities is effective.

Flow of the sleep-wake states classification methods

- [1] H. Shinkoda, Y. Kinoshita, R. Mitsutake, F. Ueno, H. Arata, C. Kiyohara, Y. Suetsugu, Y. Koga, K. Anai, M. Shiramizu, M. Ochiai, and T. Kaku, “The Influence of Premature Infants / Sleep and Physiological Response Under NICU Environment (Illuminance, Noise)–Seen From Circadian Variation and Comparison of Day and Night–,” Mie Nursing J., Vol.17, No.1, pp. 35-44, 2015 (in Japanese).

- [2] M. Mirmiran and S. Lunshof, “Perinatal Development of Human Circadian Rhythms,” Progress in Brain Research, Vol.111, pp. 217-226, 1996. https://doi.org/10.1016/s0079-6123(08)60410-0

- [3] M. Mirmiran and R. L. Ariagno, “Influence of Light in the NICU on the Development of Circadian Rhythms in Preterm Infants,” Vol.24, Issue 4, pp. 247-257, 2000. https://doi.org/10.1053/sper.2000.8593

- [4] S. F. Abbasi, H. Jamil, and W. Chen, “EEG-Based Neonatal Sleep Stage Classification Using Ensemble Learning,” Computers, Materials and Continua, Vol.70, No.3, pp. 4619-4633, 2022. https://doi.org/10.32604/cmc.2022.020318

- [5] N. Koolen, L. Oberdorfer, Z. Róna, V. Giordano, T. Werther, K. Klebermass-Schrehof, N. J. Stevenson, and S. Vanhatalo, “Automated classification of neonatal sleep states using EEG,” Clinical Neurophysiology, Vol.128, pp. 1100-1108, 2017.

- [6] J. Werth, M. Radha, P. Andriessen, R. M. Aarts, and X. Long, “Deep Learning Approach for ECG-Based Automatic Sleep State Classification in Preterm Infants,” Biomedical Signal Processing and Control, Vol.56 Article No.101663, 2020. https://doi.org/10.1016/j.bspc.2019.101663

- [7] S. Cabon, F. Poree, A. Simon, B. Met-Montot, P. Pladys, O. Rosec, N. Nardi, and G. Carrault, “Audio- and Video-Based Estimation of the Sleep Stages of Newborns in Neonatal Intensive Care Unit,” Biomedical Signal Processing and Control, Vol.52, pp. 362-370, 2019. https://doi.org/10.1016/j.bspc.2019.04.011

- [8] M. Awais, X. Long, B. Yin, S. F. Abbasi, S. Akbarzadeh, C. Lu, X. Wang, L. Wang, J. Zhang, J. Dudink, and W. Chen, “A Hybrid DCNN-SVM Model for Classifying Neonatal Sleep and Wake States Based on Facial Expressions in Video,” IEEE J. of Biomedical and Health Informatics, Vol.25, Issue 5, pp. 1441-1449, 2021. https://doi.org/10.1109/JBHI.2021.3073632

- [9] H. F. Prechtl, “The Behavioral States of the Newborn Infant (A Review),” Brain Research, Vol.76, Issue 2, pp. 185-212, 1974. https://doi.org/10.1016/0006-8993(74)90454-5

- [10] S. R. Gunn, “Support Vector Machines for Classification and Regression,” ISIS Technical Report, Vol.14, No.1, pp. 5-16, 1998.

- [11] T. B. Brazelton, ”Neonatal Behavioral Assessment Scale,” Pastics International Medical Publications, 1973.

- [12] J. Donahue, L. Anne Hendricks, S. Guadarrama, M. Rohrbach, S. Venugopalan, K. Saenko, and T. Darrell, “Long-Term Recurrent Convolutional Networks for Visual Recognition and Description,” Proc. of the 2015 IEEE Conf. on Computer Vision and Pattern Recognition, pp. 2625-2634, 2015. https://doi.org/10.1109/CVPR.2015.7298878

- [13] J. Y.-H. Ng, M. Hausknecht, S. Vijayanarasimhan, O. Vinyals, R. Monga, and G. Toderici, “Beyond Short Snippets: Deep Networks for Video Classification,” Proc. of the 2015 IEEE Conf. on Computer Vision and Pattern Recognition, pp. 4694-4702, 2015. https://doi.org/10.1109/CVPR.2015.7299101

- [14] L. Wang, W. Li, W. Li, and L. Van Gool, “Appearance-and-Relation Networks for Video Classification,” 2018 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 1430-1439, 2018. https://doi.org/10.1109/CVPR.2018.00155

- [15] C. Feichtenhofer, H. Fan, J. Malik, and K. He, “SlowFast Networks for Video Recognition,” 2019 IEEE/CVF Int. Conf. on Computer Vision, pp. 6201-6210, 2019. https://doi.org/10.1109/ICCV.2019.00630

- [16] W. Kay, J. Carreira, K. Simonyan, B. Zhang, C. Hillier, S. Vijayanarasimhan, F. Viola, T. Green, T. Back, P. Natsev, M. Suleyman, and A. Zisserman, “The Kinetics Human Action Video Dataset,” arXiv:1705.06950, 2017. https://doi.org/10.48550/arXiv.1705.06950

- [17] R. Vrskova, R. Hudec, P. Kamencay, and P. Sykora, “Human Activity Classification Using the 3DCNN Architecture,” Applied Sciences, Vol.12, No.2, Article No.931, 2022. https://doi.org/10.3390/app12020931

- [18] Z. Guo, Y. Chen, W. Huang, and J. Zhang, “An Efficient 3D-NAS Method for Video-Based Gesture Recognition,” Proc. of the 28th Int. Conf. on Artificial Neural Networks (ICANN 2019), pp. 319-329, 2019. https://doi.org/10.1007/978-3-030-30508-6_26

- [19] H. Hou, Y. Li, C. Zhang, H. Liao, Y. Zhang, and Y. Liu, “Vehicle Behavior Recognition Using Multi-Stream 3D Convolutional Neural Network,” 2021 36th Youth Academic Annual Conf. of Chinese Association of Automation (YAC), pp. 355-360, 2021. https://doi.org/10.1109/YAC53711.2021.9486615

- [20] M. Hattori, K. Morita, T. Wakabayashi, H. Shinkoda, A. Matsumoto, Y. Noguchi, and M. Shiramizu, “Neonatal Sleeping State Estimation by Body Movement Detection Using Optical Flow,” Proc. of the Annual Conf. of Biomedical Fuzzy Systems Association, Vol.33, pp. 56-59, 2020 (in Japanese). https://doi.org/10.24466/pacbfsa.33.0_56

- [21] S. Ji, W. Xu, M. Yang, and K. Yu, “3D Convolutional Neural Networks for Human Action Recognition,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.35, No.1, pp. 221-231, 2013. https://doi.org/10.1109/TPAMI.2012.59

- [22] K. He, X. Zhang, S. Ren, and J. Sun, “Deep Residual Learning for Image Recognition,” arXiv:1512.03385, 2015. https://doi.org/10.48550/arXiv.1512.03385

- [23] J. Cohen, “Weighted Kappa: Nominal Scale Agreement Provision for Scaled Disagreement or Partial Credit,” Psychological Bulletin, Vol.70, No.4, pp. 213-220, 1968. https://psycnet.apa.org/doi/10.1037/h0026256

- [24] C.-Y. Wang, A. Bochkovskiy, and H.-Y. M. Liao, “YOLOv7: Trainable Bag-of-Freebies Sets New State-of-the-Art for Real-Time Object Detectors,” arXiv:2207.02696, 2022. https://doi.org/10.48550/arXiv.2207.02696

- [25] C.-Y. Wang, H.-Y. M. Liao, and I.-H. Yeh, “Designing Network Design Strategies Through Gradient Path Analysis,” arXiv:2211.04800, 2022. https://doi.org/10.48550/arXiv.2211.04800

- [26] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, S. E. Reed, C. Fu, and A. C. Berg, “SSD: Single Shot MultiBox Detector,” ECCV 2016, pp. 21-37, 2016. https://doi.org/10.1007/978-3-319-46448-0_2

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.