Research Paper:

Toward Question-Answering with Multi-Hop Reasoning and Calculation over Knowledge Using a Neural Network Model with External Memories

Yuri Murayama

and Ichiro Kobayashi

and Ichiro Kobayashi

Ochanomizu University

2-1-1 Otsuka, Bunkyo-ku, Tokyo 112-8610, Japan

The differentiable neural computer (DNC) is a neural network model with an addressable external memory that can solve algorithmic and question-answering tasks. Improved versions of the DNC have been proposed, including the robust and scalable DNC (rsDNC) and DNC-deallocation-masking-sharpness (DNC-DMS). However, integrating structured knowledge and calculations into these DNC models remains a challenging research question. In this study, we incorporate an architecture for knowledge and calculations into the DNC, rsDNC, and DNC-DMS to improve their abilities to generate correct answers for questions with multi-hop reasoning and provide calculations over structured knowledge. Our improved rsDNC model achieves the best performance for the mean top-1 accuracy, and our improved DNC-DMS model scores the highest for the top-10 accuracy in the GEO dataset. In addition, our improved rsDNC model outperforms other models in regards to the mean top-1 accuracy and mean top-10 accuracy in the augmented GEO dataset.

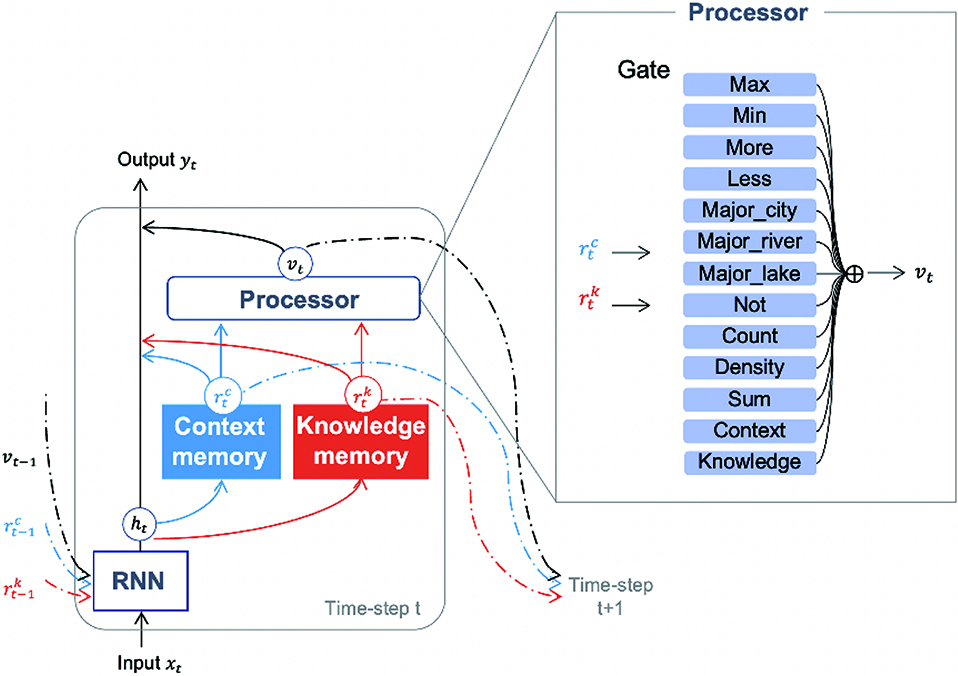

Overview of our proposed model

- [1] H. T. Siegelmann and E. D. Sontag, “On the Computational Power of Neural Nets,” J. Comput. Syst. Sci., Vol.50, No.1, pp. 132-150, 1995. https://doi.org/10.1006/jcss.1995.1013

- [2] Y. Bengio, P. Simard, and P. Frasconi, “Learning Long-Term Dependencies with Gradient Descent is Difficult,” Trans. Neur. Netw., Vol.5, No.2, pp. 157-166, 1994. https://doi.org/10.1109/72.279181

- [3] S. Hochreiter and J. Schmidhuber, “Long short-term memory,” Neural Computation, Vol.9, No.8, pp. 1735-1780, 1997. https://doi.org/10.1162/neco.1997.9.8.1735

- [4] I. Sutskever, O. Vinyals, and Q. V. Le, “Sequence to Sequence Learning with Neural Networks,” arXiv:1409.3215, 2014. https://doi.org/10.48550/arXiv.1409.3215

- [5] D. Bahdanau, K. Cho, and Y. Bengio, “Neural Machine Translation by Jointly Learning to Align and Translate,” arXiv:1409.0473, 2014. https://doi.org/10.48550/arXiv.1409.0473

- [6] T. Luong, H. Pham, and C. D. Manning, “Effective Approaches to Attention-Based Neural Machine Translation,” Proc. of the 2015 Conf. on Empirical Methods in Natural Language Processing, pp. 1412-1421, 2015. http://doi.org/10.18653/v1/D15-1166

- [7] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, L. Kaiser, and I. Polosukhin, “Attention is All You Need,” Advances in Neural Information Processing Systems 30 (NIPS 2017), pp. 5998-6008, 2017.

- [8] Z. Dai, Z. Yang, Y. Yang, J. Carbonell, Q. Le, and R. Salakhutdinov, “Transformer-XL: Attentive Language Models Beyond a Fixed-Length Context,” Proc. of the 57th Annual Meeting of the Association for Computational Linguistics, pp. 2978-2988, 2019. http://doi.org/10.18653/v1/P19-1285

- [9] J. W. Rae, A. Potapenko, S. M. Jayakumar, and T. P. Lillicrap, “Compressive Transformers for Long-Range Sequence Modelling,” arXiv:1911.05507, 2019. https://doi.org/10.48550/arXiv.1911.05507

- [10] P. H. Martins, Z. Marinho, and A. Martins, “∞-former: Infinite Memory Transformer,” Proc. of the 60th Annual Meeting of the Association for Computational Linguistics, Vol.1 (Long Papers), pp. 5468-5485, 2022. http://doi.org/10.18653/v1/2022.acl-long.375

- [11] J. v. Neumann, “First Draft of a Report on the EDVAC,” Technical Report, 1945.

- [12] A. Graves, G. Wayne, and I. Danihelka. “Neural Turing Machines,” arxiv:1410.5401, 2014. https://doi.org/10.48550/arXiv.1410.5401

- [13] A. Graves, G. Wayne, M. Reynolds, T. Harley, I. Danihelka, A. Grabska-Barwińska, S. G. Colmenarejo, E. Grefenstette, T. Ramalho, J. Agapiou, A. P. Badia, K. M. Hermann, Y. Zwols, G. Ostrovski, A. Cain, H. King, C. Summerfield, P. Blunsom, K. Kavukcuoglu, and D. Hassabis, “Hybrid computing using a neural network with dynamic external memory,” Nature, Vol.538, pp. 471-476, 2016. https://doi.org/10.1038/nature20101

- [14] J. Franke, J. Niehues, and A. Waibel, “Robust and Scalable Differentiable Neural Computer for Question Answering,” Proc. of the Workshop on Machine Reading for Question Answering, pp. 47-59, 2018. http://doi.org/10.18653/v1/W18-2606

- [15] R. Csordás and J. Schmidhuber, “Improving Differentiable Neural Computers Through Memory Masking, De-Allocation, and Link Distribution Sharpness Control,” arXiv:1904.10278, 2019. https://doi.org/10.48550/arXiv.1904.10278

- [16] J. M. Zelle and R. J. Mooney, “Learning to Parse Database Queries Using Inductive Logic Programming,” Proc. of the 13th National Conf. on Artificial Intelligence (AAAI’96), Vol.2, pp. 1050-1055, 1996.

- [17] T. Tieleman and G. Hinton, “Lecture 6.5-rmsprop: Divide the gradient by a running average of its recent magnitude,” COURSERA: Neural Networks for Machine Learning, Vol.4, pp. 26-31, 2012.

- [18] J. Devlin, M.-W. Chang, K. Lee, and K. Toutanova, “BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding,” Proc. of the 2019 Conf. of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Vol.1 (Long and Short Papers), pp. 4171-4186, 2019. http://doi.org/10.18653/v1/N19-1423

- [19] M. Geva, A. Gupta, and J. Berant, “Injecting Numerical Reasoning Skills into Language Models,” Proc. of the 58th Annual Meeting of the Association for Computational Linguistics, pp. 946-958, 2020. http://doi.org/10.18653/v1/2020.acl-main.89

- [20] M. Stone, “Cross-validatory choice and assessment of statistical predictions,” J. Roy. Stat. Soc. Series B (Methodological), Vol.36, No.2, pp. 111-147, 1974.

- [21] P. Pasupat and P. Liang, “Compositional Semantic Parsing on Semi-Structured Tables,” Proc. of the 53rd Annual Meeting of the Association for Computational Linguistics and the 7th Int. Joint Conf. on Natural Language Processing, Vol.1 (Long Papers), pp. 1470-1480, 2015. http://doi.org/10.3115/v1/P15-1142

- [22] J. W. Rae, J. J. Hunt, T. Harley, I. Danihelka, A. W. Senior, G. Wayne, A. Graves, and T. P. Lillicrap, “Scaling Memory-Augmented Neural Networks with Sparse Reads and Writes,” arXiv:1610.09027, 2016. https://doi.org/10.48550/arXiv.1610.09027

- [23] I. Ben-Ari and A. J. Bekker, “Differentiable Memory Allocation Mechanism for Neural Computing.” https://home.ttic.edu/klivescu/MLSLP2017/MLSLP2017_ben-ari.pdf [Accessed April 24, 2023]

- [24] M. S. Rasekh and F. Safi-Esfahani, “EDNC: Evolving Differentiable Neural Computers,” Neurocomputing, Vol.412, pp. 514-542, 2020. https://doi.org/10.1016/j.neucom.2020.06.018

- [25] S. Seo, D. Lee, and J.-H. Kim, “Shallow Convolution-Augmented Transformer with Differentiable Neural Computer for Low-Complexity Classification of Variable-Length Acoustic Scene,” Proc. Interspeech 2021, pp. 576-580, 2021. http://doi.org/10.21437/Interspeech.2021-1308

- [26] Y. Tao and Z. Zhang, “HiMA: A Fast and Scalable History-Based Memory Access Engine for Differentiable Neural Computer,” 54th Annual IEEE/ACM Int. Symp. on Microarchitecture (MICRO ’21), pp. 845-856, 2021. https://doi.org/10.1145/3466752.3480052

- [27] D. Lee, H. Park, S. Seo, H. Son, G. Kim, and J.-H. Kim, “Robustness of Differentiable Neural Computer Using Limited Retention Vector-Based Memory Deallocation in Language Model,” KSII Trans. on Internet and Information Systems, Vol.15, No.3, pp. 837-852, 2021. https://doi.org/10.3837/tiis.2021.03.002

- [28] A. Kumar, O. Irsoy, P. Ondruska, M. Iyyer, J. Bradbury, I. Gulrajani, V. Zhong, R. Paulus, and R. Socher, “Ask Me Anything: Dynamic Memory Networks for Natural Language Processing,” Proc. of the 33rd Int. Conf. on Machine Learning (PMLR), Vol.48, pp. 1378-1387, 2016.

- [29] C. Xiong, S. Merity, and R. Socher, “Dynamic Memory Networks for Visual and Textual Question Answering,” Proc. of the 33rd Int. Conf. on Machine Learning (PMLR), Vol.48, pp. 2397-2406, 2016.

- [30] S. Sukhbaatar, A. Szlam, J. Weston, and R. Fergus, “End-to-End Memory Networks,” Advances in Neural Information Processing Systems 28 (NIPS 2015), pp. 2440-2448, 2015.

- [31] J. Moon, H. Yang, and S. Cho, “Finding ReMO (Related Memory Object): A Simple Neural Architecture for Text Based Reasoning,” arXiv:1801.08459, 2018. https://doi.org/10.48550/arXiv.1801.08459

- [32] C. Akita, M. Mase, and Y. Kitamura, “Natural Language Questions and Answers for RDF Information Resources,” J. Adv. Comput. Intell. Intell. Inform., Vol.14, No.4, pp. 384-389, 2010. https://doi.org/10.20965/jaciii.2010.p0384

- [33] A. Miller, A. Fisch, J. Dodge, A.-H. Karimi, A. Bordes, and J. Weston, “Key-Value Memory Networks for Directly Reading Documents,” Proc. of the 2016 Conf. on Empirical Methods in Natural Language Processing, pp. 1400-1409, 2016. http://doi.org/10.18653/v1/D16-1147

- [34] A. Saha, V. Pahuja, M. Khapra, K. Sankaranarayanan, and S. Chandar, “Complex Sequential Question Answering: Towards Learning to Converse over Linked Question Answer Pairs with a Knowledge Graph,” Proc. of the AAAI Conf. on Artificial Intelligence, Vol.32, 2018. http://doi.org/10.1609/aaai.v32i1.11332

- [35] I. V. Serban, A. Sordoni, Y. Bengio, A. C. Courville, and J. Pineau, “Hierarchical Neural Network Generative Models for Movie Dialogues,” arXiv:1507.04808v1, 2015. https://doi.org/10.48550/arXiv.1507.04808

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.