Paper:

Coarse TRVO: A Robust Visual Odometry with Detector-Free Local Feature

Yuhang Gao and Long Zhao

School of Automation Science and Electrical Engineering, Beihang University

Beijing 100191, China

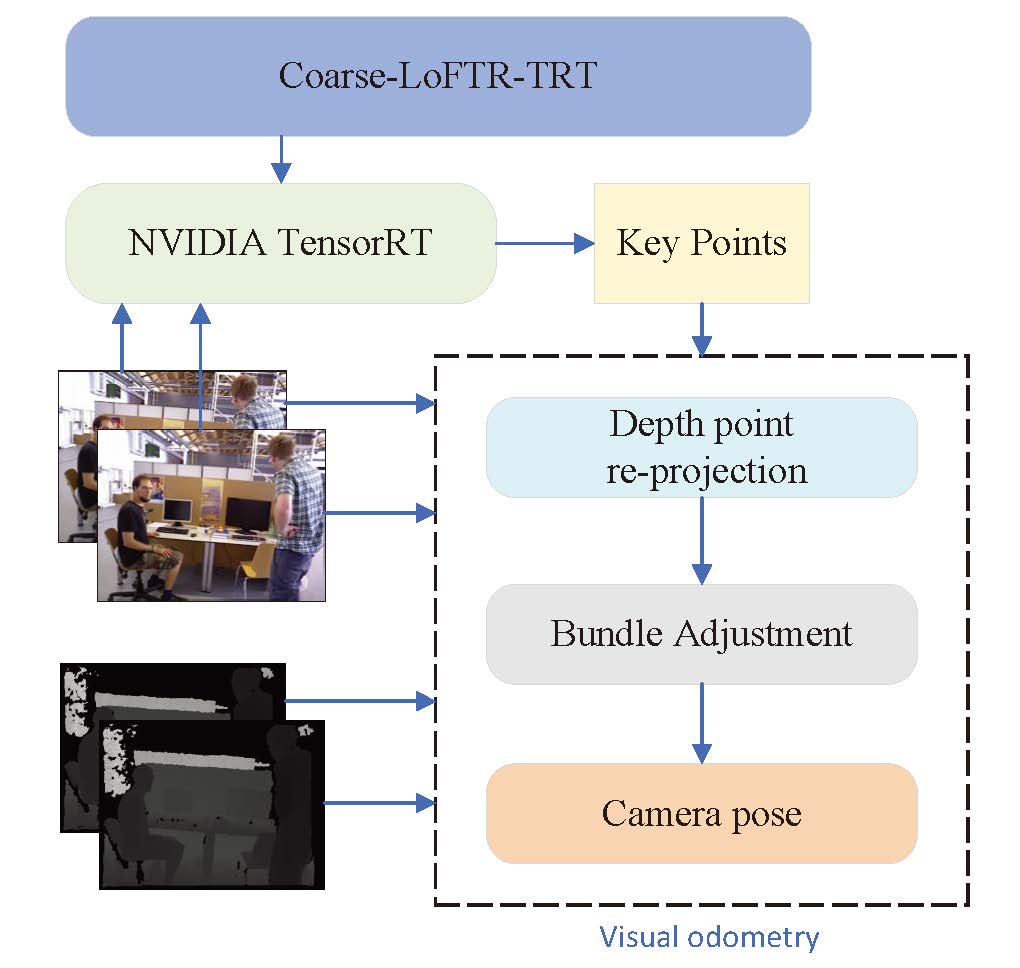

The visual SLAM system requires precise localization. To obtain consistent feature matching results, visual features acquired by neural networks are being increasingly used to replace traditional manual features in situations with weak texture, motion blur, or repeated patterns. However, to improve the level of accuracy, most deep learning enhanced SLAM systems, which have a decreased efficiency. In this paper, we propose Coarse TRVO, a visual odometry system that uses deep learning for feature matching. The deep learning network uses a CNN and transformer structures to provide dense high-quality end-to-end matches for a pair of images, even under indistinctive settings with low-texture regions or repeating patterns occupying the majority of the field of view. Meanwhile, we made the proposed model compatible with NVIDIA TensorRT runtime to boost the performance of the algorithm. After obtaining the matching point pairs, the camera pose is solved in an optimized way by minimizing the re-projection error of the feature points. Experiments based on multiple data sets and real environments show that Coarse TRVO achieves a higher robustness and relative positioning accuracy in comparison with the current mainstream visual SLAM system.

Framework of the system

- [1] D. G. Lowe, “Distinctive Image Features from Scale-Invariant Keypoints,” Int. J. of Computer Vision, Vol.60, pp. 91-110, 2004.

- [2] H. Bay, A. Ess, T. Tuytelaars, and L. V. Gool, “Speeded-Up Robust Features (SURF),” Computer Vision and Image Understanding, Vol.110, Issue 3, pp. 346-359, 2008.

- [3] E. Rublee, V. Rabaud, K. Konolige, and G. Bradski, “ORB: An efficient alternative to SIFT or SURF,” 2011 Int. Conf. on Computer Vision, pp. 2564-2571 2011.

- [4] R. Kang, J. Shi, X. Li, Y. Liu, and X. Liu, “DF-SLAM: A deep-learning enhanced visual SLAM system based on deep local features,” arXiv:1901.07223, 2019.

- [5] J. Sun, Z. Shen, Y. Wang, H. Bao, and X. Zhou, “LoFTR: Detector-free local feature matching with transformers,” Proc. of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 8922-8931, 2021.

- [6] A. Vaswani, N. Shazeer, N. Parmar, J. Uszkoreit, L. Jones, A. N. Gomez, Ł. Kaiser, and I. Polosukhin, “Attention is All You Need,” I. Guyon, U. V. Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett (Eds.), “Advances in Neural Information Processing Systems 30,” Curran Associates, Inc., 2017.

- [7] K. Kolodiazhnyi, “Local Feature Matching with Transformers for Low-End Devices,” arXiv:2202.00770, 2022.

- [8] Y. Kameda, “Parallel tracking and mapping for small AR workspaces (PTAM) Augmented Reality,” The J. of the Institute of Image Information and Television Engineers, Vol.66, No.1, pp. 45-51, 2012.

- [9] R. Mur-Artal, J. M. M. Montiel, and J. D. Tardós, “ORB-SLAM: A versatile and accurate monocular SLAM system,” IEEE Trans. on Robotics, Vol.31, No.5, pp. 1147-1163, 2015.

- [10] R. Mur-Artal and J. D. Tardós, “ORB-SLAM2: An open-source SLAM system for monocular, stereo, and RGB-D cameras,” IEEE Trans. on Robotics, Vol.33, No.5, pp. 1255-1262, 2017.

- [11] C. Campos, R. Elvira, J. J. G. Rodríguez, J. M. M. Montiel, and J. D. Tardós, “ORB-SLAM3: An accurate open-source library for visual, visual–inertial, and multimap SLAM,” IEEE Trans. on Robotics, Vol.37, No.6, pp. 1874-1890, 2021.

- [12] T. Qin, P. Li, and S. Shen, “VINS-Mono: A robust and versatile monocular visual-inertial state estimator,” IEEE Trans. on Robotics, Vol.34, No.4, pp. 1004-1020, 2018.

- [13] J. Tang, L. Ericson, J. Folkesson, and P. Jensfelt, “GCNv2: Efficient correspondence prediction for real-time SLAM,” IEEE Robotics and Automation Letters, Vol.4, No.4, pp. 3505-3512, 2019.

- [14] D. Li, X. Shi, Q. Long, S. Liu, W. Yang, F. Wang, Q. Wei, and F. Qiao, “DXSLAM: A robust and efficient visual SLAM system with deep features,” 2020 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 4958-4965, 2020.

- [15] P.-E. Sarlin, C. Cadena, R. Siegwart, and M. Dymczyk, “From coarse to fine: Robust hierarchical localization at large scale,” 2019 IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 12708-12717, 2019.

- [16] D. DeTone, T. Malisiewicz, and A. Rabinovich, “SuperPoint: Self-supervised interest point detection and description,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR) Workshops, pp. 224-236, 2018.

- [17] Y. Gao and L. Zhao, “TRVO: A Robust Visual Odometry with Deep Features,” The 7th Int. Workshop on Advanced Computational Intelligence and Intelligent Informatics (IWACIII), 2021.

- [18] D. DeTone, T. Malisiewicz, and A. Rabinovich, “Toward geometric deep SLAM,” arXiv:1707.07410, 2017.

- [19] P.-E. Sarlin, D. DeTone, T. Malisiewicz, and A. Rabinovich, “Superglue: Learning feature matching with graph neural networks,” Proc. of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 4938-4947, 2020.

- [20] Q. Zhou, T. Sattler, and L. Leal-Taixe, “Patch2pix: Epipolar-guided pixel-level correspondences,” Proc. of the IEEE/CVF Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 4669-4678, 2021.

- [21] M. A. Fischler and R. C. Bolles, “Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography,” Communications of the ACM, Vol.24, No.6, pp. 381-395, 1981.

- [22] R. Kümmerle, G. Grisetti, H. Strasdat, K. Konolige, and W. Burgard, “G2o: A general framework for graph optimization,” 2011 IEEE Int. Conf. on Robotics and Automation, pp. 3607-3613, 2011.

- [23] S. Agarwal, K. Mierle, and the Ceres Solver Team, “Ceres Solver,” http://ceres-solver.org [accessed June 15, 2021]

- [24] T. Sattler, W. Maddern, C. Toft, A. Torii, L. Hammarstrand, E. Stenborg, D. Safari, M. Okutomi, M. Pollefeys, J. Sivic, F. Kahl, and T. Pajdla, “Benchmarking 6DOF outdoor visual localization in changing conditions,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 8601-8610, 2018.

- [25] M. Grupp, “evo: Python package for the evaluation of odometry and SLAM,” https://github.com/MichaelGrupp/evo [accessed February 21, 2022]

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.