Paper:

Simultaneous Detection of Loop-Closures and Changed Objects

Kanji Tanaka, Kousuke Yamaguchi, and Takuma Sugimoto

Graduate School of Engineering, University of Fukui

3-9-1 Bunkyo, Fukui, Fukui 910-8507, Japan

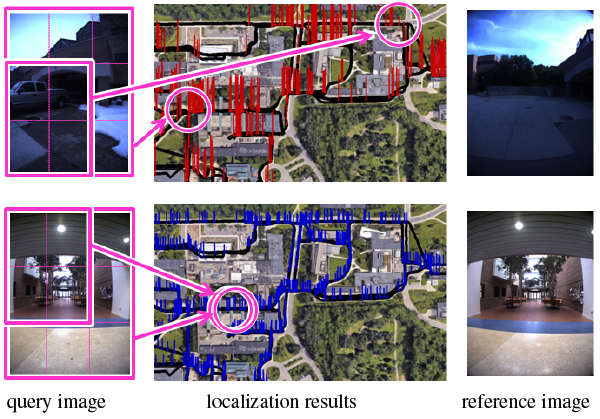

Loop-closure detection (LCD) in large non-stationary environments remains an important challenge in robotic visual simultaneous localization and mapping (vSLAM). To reduce computational and perceptual complexity, it is helpful if a vSLAM system has the ability to perform image change detection (ICD). Unlike previous applications of ICD, time-critical vSLAM applications cannot assume an offline background modeling stage, or rely on maintenance-intensive background models. To address this issue, we introduce a novel maintenance-free ICD framework that requires no background modeling. We demonstrate that LCD can be reused as the main process for ICD with minimal extra cost. Based on these concepts, we develop a novel vSLAM component that enables simultaneous LCD and ICD. ICD experiments based on challenging cross-season LCD scenarios validate the efficacy of the proposed method.

A maintenance-free change object detector

- [1] R. Mur-Artal and J. D. Tardós, “Visual-inertial monocular SLAM with map reuse,” IEEE Robotics and Automation Letters, Vol.2, No.2, pp. 796-803, 2017.

- [2] M. Cummins and P. Newman, “Appearance-only SLAM at large scale with FAB-MAP 2.0,” The Int. J. of Robotics Research, Vol.30, No.9, pp. 1100-1123, 2011.

- [3] K. Tanaka, “Cross-season place recognition using NBNN scene descriptor,” 2015 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 729-735, 2015.

- [4] M. Cummins and P. Newman, “FAB-MAP: Probabilistic localization and mapping in the space of appearance,” The Int. J. of Robotics Research, Vol.27, No.6, pp. 647-665, 2008.

- [5] D. Gálvez-López and J. D. Tardós, “Bags of Binary Words for Fast Place Recognition in Image Sequences,” IEEE Trans. on Robotics, Vol.28, No.5, pp. 1188-1197, 2012.

- [6] R. Mur-Artal, J. M. M. Montiel, and J. D. Tardós, “ORB-SLAM: A Versatile and Accurate Monocular SLAM System,” IEEE Trans. on Robotics, Vol.31, No.5, pp. 1147-1163, 2015.

- [7] E. Garcia-Fidalgo and A. Ortiz, “iBoW-LCD: An Appearance-Based Loop-Closure Detection Approach Using Incremental Bags of Binary Words,” IEEE Robotics and Automation Letters, Vol.3, No.4, pp. 3051-3057, 2018.

- [8] R. J. Radke, S. Andra, O. Al-Kofahi, and B. Roysam, “Image change detection algorithms: a systematic survey,” IEEE Trans. on Image Processing, Vol.14, No.3, pp. 294-307, 2005.

- [9] L. Gueguen and R. Hamid, “Large-scale damage detection using satellite imagery,” Proc. of the IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 1321-1328, 2015.

- [10] W. Sultani, C. Chen, and M. Shah, “Real-world Anomaly Detection in Surveillance Videos,” 2018 IEEE/CVF Conf. on Computer Vision and Pattern Recognition, pp. 6479-6488, 2018.

- [11] W. Churchill and P. Newman, “Experience-based navigation for long-term localisation,” The Int. J. of Robotics Research, Vol.32, No.14, pp. 1645-1661, 2013.

- [12] M. Bürki, M. Dymczyk, I. Gilitschenski, C. Cadena, R. Siegwart, and J. Nieto, “Map Management for Efficient Long-Term Visual Localization in Outdoor Environments,” 2018 IEEE Intelligent Vehicles Symp. (IV), 2018.

- [13] P. Mühlfellner, M. Bürki, M. Bosse, W. Derendarz, R. Philippsen, and P. Furgale, “Summary maps for lifelong visual localization,” J. of Field Robotics, Vol.33, No.5, pp. 561-590, 2016.

- [14] M. Paton, K. MacTavish, M. Warren, and T. D. Barfoot, “Bridging the appearance gap: Multi-experience localization for long-term visual teach and repeat,” 2016 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 1918-1925, 2016.

- [15] K. Tanaka, “Unsupervised part-based scene modeling for visual robot localization,” 2015 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 6359-6365, 2015.

- [16] K. Tanaka, “Self-localization from images with small overlap,” 2016 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS 2016), pp. 4497-4504, 2016.

- [17] K. Kim, T. H. Chalidabhongse, D. Harwood, and L. Davis, “Real-time foreground–background segmentation using codebook model,” Real-Time Imaging, Vol.11, No.3, pp. 172-185, 2005.

- [18] P. Xu, F. Davoine, J.-B. Bordes, H. Zhao, and T. Denœux, “Multimodal information fusion for urban scene understanding,” Machine Vision and Applications, Vol.27, No.3, pp. 331-349, 2016.

- [19] N. Carlevaris-Bianco, A. K. Ushani, and R. M. Eustice, “University of Michigan North Campus long-term vision and lidar dataset,” The Int. J. of Robotics Research, Vol.39, No.9, pp. 1023-1035, 2015.

- [20] Y. Takahashi, K. Tanaka, and N. Yang, “Scalable Change Detection from 3D Point Cloud Maps: Invariant Map Coordinate for Joint Viewpoint-Change Localization,” 2018 21st Int. Conf. on Intelligent Transportation Systems (ITSC), doi: 10.1109/ITSC.2018.8569294, 2018.

- [21] S. Khan, X. He, F. Porikli, M. Bennamoun, F. Sohel, and R. Togneri, “Learning Deep Structured Network for Weakly Supervised Change Detection,” Proc. of the 26th Int. Joint Conf. on Artificial Intelligence, pp. 2008-2015, 2017.

- [22] V. Chandola, A. Banerjee, and V. Kumar, “Anomaly detection: A survey,” ACM Computing Surveys (CSUR), Vol.41, No.3, Article No.15, 2009.

- [23] M. Sabokrou, M. Fathy, and M. Hoseini, “Video anomaly detection and localisation based on the sparsity and reconstruction error of auto-encoder,” Electronics Letters, Vol.52, No.13, pp. 1122-1124, 2016.

- [24] T. Bouwmans, “Traditional and recent approaches in background modeling for foreground detection: An overview,” Computer Science Review, Vol.11-12, pp. 31-66, 2014.

- [25] D. Hahnel, R. Triebel, W. Burgard, and S. Thrun, “Map building with mobile robots in dynamic environments,” Proc. of the 2003 IEEE Int. Conf. on Robotics and Automation, Volume 2, pp. 1557-1563, 2003.

- [26] D. Arbuckle, A. Howard, and M. Mataric, “Temporal occupancy grids: a method for classifying the spatio-temporal properties of the environment,” Proc. of IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, Volume 1, pp. 409-414, 2002.

- [27] J. Saarinen, H. Andreasson, and A. J. Lilienthal, “Independent Markov chain occupancy grid maps for representation of dynamic environment,” 2012 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 3489-3495, 2012.

- [28] D. Meyer-Delius, M. Beinhofer, and W. Burgard, “Occupancy Grid Models for Robot Mapping in Changing Environments,” Proc. of the 26th AAAI Conf. on Artificial Intelligence, pp. 2024-2030, 2012.

- [29] K. Tanaka, “Detection-by-Localization: Maintenance-Free Change Object Detector,” 2019 Int. Conf. on Robotics and Automation (ICRA), doi: 10.1109/ICRA.2019.8793482, 2019.

- [30] J. Redmon and A. Farhadi, “YOLO9000: Better, Faster, Stronger,” arXiv preprint, arXiv:1612.08242, 2016.

- [31] N. Merrill and G. Huang, “Lightweight Unsupervised Deep Loop Closure,” Proc. of Robotics: Science and Systems (RSS), doi: 10.15607/RSS.2018.XIV.032, 2018.

- [32] T. Sugimoto, K. Yamaguchi, Z. Bao, M. Ye, H. Tomoe, and T. Kanji, “Fault-Diagnosing Monocular-SLAM for Scale-Aware Change Detection,” 2021 IEEE/SICE Int. Symp. on System Integration (SII), pp. 276-283, 2021.

- [33] A. Mourão, F. Martins, and J. Magalhães, “Multimodal medical information retrieval with unsupervised rank fusion,” Computerized Medical Imaging and Graphics, Vol.39, pp. 35-45, 2015.

- [34] J. G. Mangelson, D. Dominic, R. M. Eustice, and R. Vasudevan, “Pairwise Consistent Measurement Set Maximization for Robust Multi-robot Map Merging,” Proc. of the IEEE Int. Conf. on Robotics and Automation, doi: 10.1109/ICRA.2018.8460217, 2018.

- [35] J. Košecka, “Detecting changes in images of street scenes,” Asian Conf. on Computer Vision, pp. 590-601, 2012.

- [36] D. G. Lowe, “Object recognition from local scale-invariant features,” Proc. of the 7th IEEE Int. Conf. on Computer Vision, Volume 2, pp. 1150-1157, 1999.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.