Paper:

Interestingness Improvement of Face Images by Learning Visual Saliency

Dao Nam Anh

Electric Power University

235 Hoang Quoc Viet Road, Hanoi, Vietnam

Connecting features of face images with the interestingness of a face may assist in a range of applications such as intelligent visual human-machine communication. To enable the connection, we use interestingness and image features in combination with machine learning techniques. In this paper, we use visual saliency of face images as learning features to classify the interestingness of the images. Applying multiple saliency detection techniques specifically to objects in the images allows us to create a database of saliency-based features. Consistent estimation of facial interestingness and using multiple saliency methods contribute to estimate, and exclusively, to modify the interestingness of the image. To investigate interestingness – one of the personal characteristics in a face image, a large benchmark face database is tested using our method. Taken together, the method may advance prospects for further research incorporating other personal characteristics and visual attention related to face images.

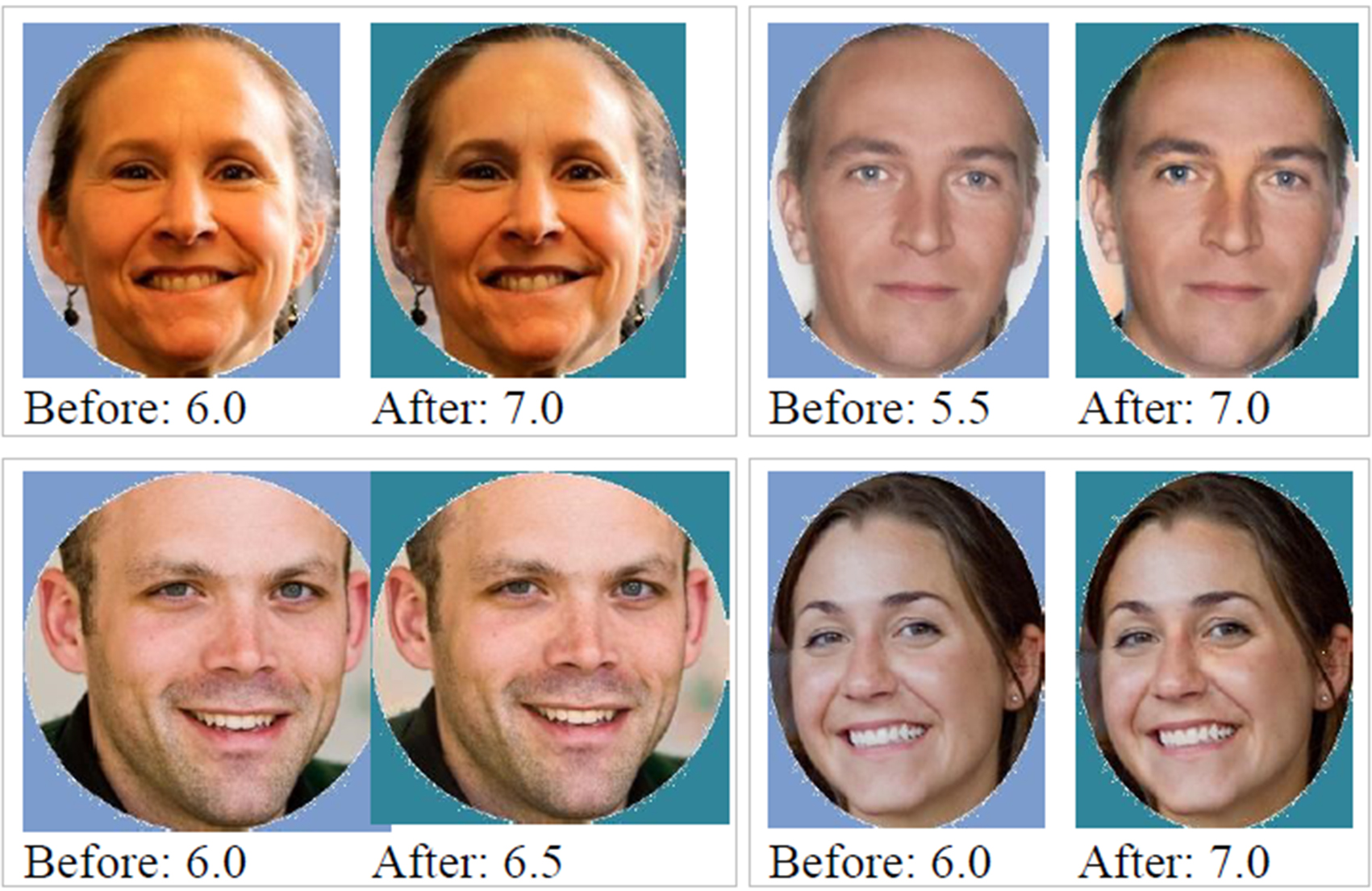

Examples of raising interestingness

- [1] D. N. Anh and T. M. Duc, “Modification of Happiness Expression in Face Images,” Int. J. of Natural Computing Research, Vol.6, Issue 2, pp. 58-69, doi: 10.4018/IJNCR.2017070104, 2018.

- [2] X. Amengual, A. Bosch, and J. L. de la Rosa, “Review of Methods to Predict Social Image Interestingness and Memorability,” Lecture Notes in Computer Science Vol.9256, Computer Analysis of Images and Patterns, pp. 64-76, 2015.

- [3] D. N. Anh, “Multivariate Filter for Saliency,” 2018 1st Int. Conf. on Multimedia Analysis and Pattern Recognition, pp. 1-6, doi: 10.1109/MAPR.2018.8337522, 2018.

- [4] W. A. Bainbridge, P. Isola, and A. Oliva, “The Intrinsic Memorability of Face Photographs,” J. of Experimental Psychology: General, Vol.142, No.4, pp. 1323-1334, 2013.

- [5] A. Khosla, W. A. Bainbridge, A. Torralba, and A. Oliva, “Modifying the memorability of face photographs,” Proc. of the IEEE Int. Conf. on Computer Vision, pp. 3200-3207, 2013.

- [6] G. J. Edwards, C. J. Taylor, and T. F. Cootes, “Interpreting face images using active appearance models,” Proc. of 3rd IEEE Int. Conf. on Automatic Face and Gesture Recognition, pp. 300-305, 1998.

- [7] N. Dalal and B. Triggs, “Histograms of oriented gradients for human detection,” Proc. of the 2005 IEEE Computer Society Conf. on Computer Vision and Pattern Recognition (CVPR), Vol.1, pp. 886-893, 2005.

- [8] L. Itti, C. Koch, and E. Niebur, “A Model of Saliency-Based Visual Attention for Rapid Scene Analysis,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.20, No.11, pp. 1254-1259, 1998.

- [9] C. H. Demarty, M. Sjöberg, M. G. Constantin et al., “Predicting Interestingness of Visual Content,” J. Benois-Pineau and P. Le Callet (Eds.), “Visual Content Indexing and Retrieval with Psycho-Visual Models,” pp. 233-265, Springer, 2017.

- [10] A. Silva, A. F. Macedo, P. B. Albuquerque, and J. Arantes, “Always on My Mind? Recognition of Attractive Faces May Not Depend on Attention,” Frontiers in Psychology, Vol.7, 2016.

- [11] B. Celikkale, A. Erdem, and E. Erdem, “Predicting memorability of images using attention-driven spatial pooling and image semantics,” Image Vision Computing, Vol.42, pp. 35-46, 2015.

- [12] F. Arbabzadah, G. Montavon, K.-R. Müller, and W. Samek, “Identifying individual facial expressions by deconstructing a neural network,” Lecture Notes in Computer Science, Vol.9796, Pattern Recognition, pp. 344-354, 2016.

- [13] B. Ghariba, M. S. Shehata, and P. McGuire, “Visual Saliency Prediction Based on Deep Learning,” Information, Vol.10, No.8, Article 257, 2019.

- [14] Y. Baveye, R. Cohendet, M. P. Da Silva, and P. Le Callet, “Deep Learning for Image Memorability Prediction: the Emotional Bias,” Proc. of the 24th ACM Int. Conf. on Multimedia, pp. 491-495, 2016.

- [15] X. Hou, J. Harel, and C. Koch, “Image Signature: Highlighting Sparse Salient Regions,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.34, No.1, pp. 194-201, 2012.

- [16] Y. Fang, Z. Chen, W. Lin, and C.-W. Lin, “Saliency-based image retargeting in the compressed domain,” Proc. of the 19th ACM Int. Conf. on Multimedia, pp. 1049-1052, 2011.

- [17] P. Viola and M. Jones, “Robust Real-time Object Detection,” Int. J. of Computer Vision, Vol.57, No.2, pp. 137-154, 2004.

- [18] M. Hu, “Visual Pattern Recognition by Moment Invariants,” IRE Trans. on Information Theory, Vol.8, No.2, pp. 179-187, 1962.

- [19] K. P. Murphy, “Machine Learning: A Probabilistic Perspective,” The MIT Press, 2012.

- [20] B. E. Boser, I. M. Guyon, and V. N. Vapnik, “A training algorithm for optimal margin classifiers,” Proc. of the 5th Annual Workshop on Computational Learning Theory, pp. 142-152, 1992.

- [21] J. M. Keller, M. R. Gray, and J. A. Givens Jr., “A Fuzzy K-Nearest Neighbor Algorithm,” IEEE Trans. on Systems, Man, and Cybernetics, Vol.15, No.4, pp. 580-585, 1985.

- [22] R. J. Hyndman and A. B. Koehler, “Another look at measures of forecast accuracy,” Int. J. of Forecasting, Vol.22, No.4, pp. 679-688, 2006.

- [23] D. N. Anh, “Partial Ellipse Filter for Maximizing Region Similarity for Noise Removal and Color Regulation,” Lecture Notes in Computer Science, Vol.11248, Multi-disciplinary Trends in Artificial Intelligence, pp. 3-18, 2018.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.