Paper:

Emotion Recognition Based on Multi-Composition Deep Forest and Transferred Convolutional Neural Network

Xiaobo Liu*,**, Xu Yin*,**, Min Wang*,**, Yaoming Cai***, and Guang Qi*

*School of Automation, China University of Geosciences

388 Lumo Road, Wuhan, Hubei 430074, China

**Hubei Key Laboratory of Advanced Control and Intelligent Automation for Complex Systems

388 Lumo Road, Wuhan, Hubei 430074, China

***School of Computer Science, China University of Geosciences

388 Lumo Road, Wuhan, Hubei 430074, China

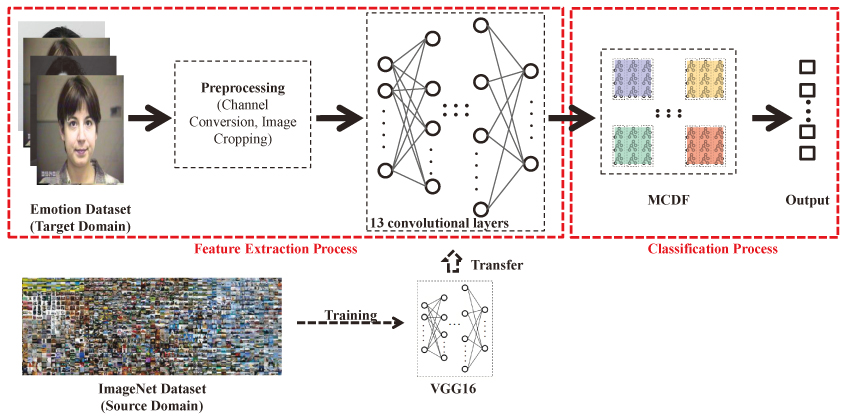

In human-machine interaction, facial emotion recognition plays an important role in recognizing the psychological state of humans. In this study, we propose a novel emotion recognition framework based on using a knowledge transfer approach to capture features and employ an improved deep forest model to determine the final emotion types. The structure of a very deep convolutional network is learned from ImageNet and is utilized to extract face and emotion features from other data sets, solving the problem of insufficiently labeled samples. Then, these features are input into a classifier called multi-composition deep forest, which consists of 16 types of forests for facial emotion recognition, to enhance the diversity of the framework. The proposed method does not need require to train a network with a complex structure, and the decision tree-based classifier can achieve accurate results with very few parameters, making it easier to implement, train, and apply in practice. Moreover, the classifier can adaptively decide its model complexity without iteratively updating parameters. The experimental results for two emotion recognition problems demonstrate the superiority of the proposed method over several well-known methods in facial emotion recognition.

The overall architecture for emotion recognition

- [1] M. E. Ayadi, M. S. Kamel, and F. Karray, “Survey on speech emotion recognition: features, classification schemes, and databases,” Pattern Recogn., Vol.44, No.3, pp. 572-587, 2011.

- [2] D. H. Kim, M. K. Lee, D. Y. Choi, and B. C. Song, “Multi-modal emotion recognition using semi-supervised learning and multiple neural networks in the wild,” Proc. of the 19th ACM Int. Conf. on Multimodal Interaction, pp. 529-535, 2017.

- [3] C. Busso, Z. Deng, S. Yildirim, M. Bulut, et al., “Analysis of emotion recognition using facial expressions, speech and multimodal information,” ACM Int. Conf. on Multimodal Interface, No.4, pp. 205-211, 2004.

- [4] S. E. Kahou, X. Bouthillier, P. Lamblin, et al., “Emonets: Multimodal deep learning approaches for emotion recognition in video,” J. on Multimodal User Interfaces, Vol.10, No.2, pp. 99-111, 2016.

- [5] L. Q. Niu, Z. T. Zhao, and S. N. Zhang, “Extraction method for facial expression features based on gabor feature fusion and LBP histogram,” J. of Shenyang Univ. of Technol., Vol.38, No.1, pp. 63-68, 2016. (In Chinese)

- [6] Q. Jia, X. Gao, H. Guo, et al., “Multi-layer sparse representation for weighted LBP-patches based facial expression recognition,” Sensors, Vol.15, No.3, pp. 6719-6739, 2015.

- [7] Y. H. Byeon and K. C. Kwak, “Facial expression recognition using 3D convolutional neural network,” Int. J. of Adv. Comp. Science and Appl., Vol.5, No.12, pp. 107-112, 2014.

- [8] K. Simonyan and A. Zisserman, “Very deep convolutional networks for large-scale image recognition,” arXiv preprint, arXiv:1409.1556, 2014.

- [9] W. Samek, A. Binder, G. Mintavon, S. Lapuschkin, and K. R. Muller, “Evaluating the visualization of what a deep neural network has learned,” IEEE Trans. on Neural Networks and Learning Systems, No.99, pp. 1-14, 2016.

- [10] H. W. Ng, V. D. Nguyen, V. Vonliikakis, and S. Winkler, “Deep learning for emotion recognition on small datasets using transfer learning,” Proc. ACM Int. Conf. on Multimodal Interaction, pp. 443-449, 2018.

- [11] Y. Bengio. “Deep learning of representations for unsupervised and transfer learning,” Workshop on Unsupervised and Transfer Learning, No.7, pp. 1-20, 2012.

- [12] P. Michel and R. Kaliouby, “Real time facial expression recognition in video using support vector machines,” Proc. ACM Int. Conf. on Multimodal Interaction, pp. 258-264, 2003.

- [13] M. Murugappan, “Human emotion classification using wavelet transform and KNN,” Int. Conf. on Pattern Anal. and Intelli. Rob., No.1, pp. 148-153, 2011.

- [14] J. Wang and B. Liu, “A study on emotion classification of image based on BP neural network,” Inf. Science and Manage. Eng., No.1 pp. 100-104, 2010.

- [15] Y. J. An, S. T. Sun, and S. J. Wang, “Naive bayes classifiers for music rmotion classification based on lyrics,” IEEE/ACIS Int. Conf. on Comp. and Inf. Science, 2017.

- [16] Z. H. Zhou and J. Feng, “Deep forest: towards an alternative to deep neural networks,” 26th Int. Joint Conf. on Artifical Intelli., pp. 3553-3559, 2017.

- [17] Y. Guo, S. Liu, Z. Li, and X. Shang, “BCDForest: a boosting cascade deep forest model towards the classification of cancer subtypes based on gene expression data,” Bmc Bioinf., Vol.19, No.5, pp. 118, 2018.

- [18] H. C. Shin, H. R. Roth, M. Gao, L. Lu, et al., “Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning,” IEEE Trans. on Med. Imaging, Vol.35, No.5, pp. 1285, 2018.

- [19] A. Sengupta, Y. Ye, R. Wang, C. Liu, and K. Roy, “Going deeper in spiking neural networks: VGG and residual architectures,” Comp. Vision and Pattern Recognit., 2018.

- [20] T. Wu, Y. H. Zhao, L. F. Liu, H. X. Li, et al., “A novel hierarchical regression approach for human facial age estimation based on deep forest,” IEEE Instrum. Meas. Technol. Conf., pp. 1-6, 2018.

- [21] P. Lucey, J. F. Cohn, T. Kanade, J. Saragih, et al., “The extended cohn-kanade dataset (CK+): a complete dataset for action unit and emotion-specified expression,” Comp. Vision and Pattern Recognit. Workshops, Vol.36, No.1, pp. 94-101, 2010.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.