Paper:

Scalable Change Detection Using Place-Specific Compressive Change Classifiers

Kanji Tanaka

University of Fukui

3-9-1 Bunkyo, Fukui, Fukui 910-8507, Japan

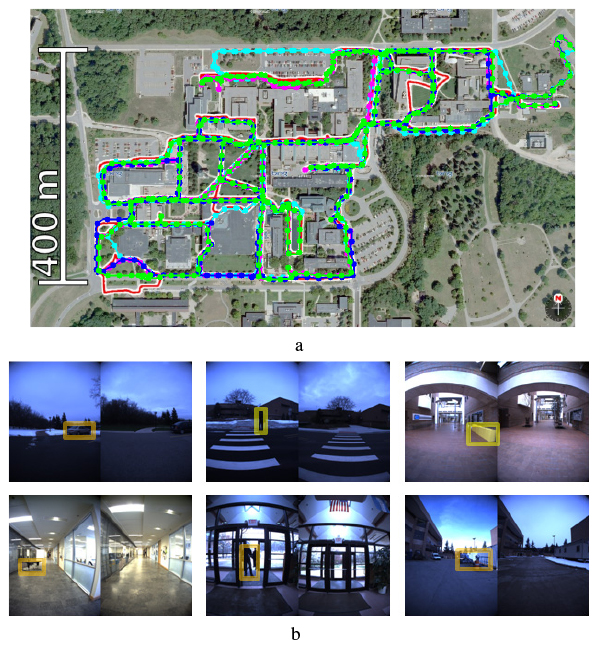

With recent progress in large-scale map maintenance and long-term map learning, the task of change detection on a large-scale map from a visual image captured by a mobile robot has become a problem of increasing criticality. In this paper, we present an efficient approach of change-classifier-learning, more specifically, in the proposed approach, a collection of place-specific change classifiers is employed. Our approach requires the memorization of only training examples (rather than the classifier itself), which can be further compressed in the form of bag-of-words (BoW). Furthermore, through the proposed approach the most recent map can be incorporated into the classifiers by straightforwardly adding or deleting a few training examples that correspond to these classifiers. The proposed algorithm is applied and evaluated on a practical long-term cross-season change detection system that consists of a large number of place-specific object-level change classifiers.

Scalable change detection for long-term map maintenance

- [1] N. Carlevaris-Bianco, A. K. Ushani, and R. M. Eustice, “University of Michigan North Campus long-term vision and lidar dataset,” Int. J. of Robotics Research, Vol.35, No.9, pp. 1023-1035, 2016.

- [2] F. Pomerleau, P. Krüsi, F. Colas, P. Furgale, and R. Siegwart, “Long-term 3D map maintenance in dynamic environments,” Proc. of 2014 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 3712-3719, 2014.

- [3] M. Fehr, M. Dymczyk, S. Lynen, and R. Siegwart, “Reshaping our model of the world over time,” Proc. of 2016 IEEE Int. Conf. on Robotics and Automation (ICRA) pp. 2449-2455, 2016.

- [4] P. F. Alcantarilla, S. Stent, G. Ros, R. Arroyo, and R. Gherardi, “Street-View Change Detection with Deconvolutional Networks,” Autonomous Robots, Vol.42, No.7, pp. 1301-1322, 2018.

- [5] R. J. Radke, S. Andra, O. Al-Kofahi, and B. Roysam, “Image change detection algorithms: a systematic survey,” IEEE Trans. image processing, Vol.14, No.3, pp. 294-307, 2005.

- [6] J. Košecka, “Detecting changes in images of street scenes,” Proc. of the 11th Asian Conf. on Computer Vision (ACCV) – Volume Part IV, pp. 590-601, 2012.

- [7] S. Stent, R. Gherardi, B. Stenger, and R. Cipolla, “Detecting Change for Multi-View, Long-Term Surface Inspection,” Proc of the 26th British Machine Vision Conf. (BMVC), pp. 127.1-127.12, 2015.

- [8] L. Gueguen and R. Hamid, “Large-scale damage detection using satellite imagery,” Proc. of 2015 IEEE Conf. on Computer Vision and Pattern Recognition (CVPR), pp. 1321-1328, 2015.

- [9] A. A. Nielsen, “The regularized iteratively reweighted MAD method for change detection in multi- and hyperspectral data,” IEEE Trans. on Image Processing, Vol.16, No.2, pp. 463-478, 2007.

- [10] F. Bovolo, L. Bruzzone, and M. Marconcini, “A novel approach to unsupervised change detection based on a semisupervised SVM and a similarity measure,” IEEE Trans. on Geoscience and Remote Sensing, Vol.46, No.7, pp. 2070-2082, 2008.

- [11] L. Bruzzone and D. F. Prieto, “Automatic analysis of the difference image for unsupervised change detection,” IEEE Trans. on Geoscience and Remote Sensing, Vol.38, No.3, pp. 1171-1182, 2000.

- [12] B. Yamauchi and P. Langley, “Place learning in dynamic real-world environments,” Proc. RoboLearn, Vol.96, pp. 123-129, 1996.

- [13] E. Gavves, T. Mensink, T. Tommasi, C. G. M. Snoek, and T. Tuytelaars, “Active transfer learning with zero-shot priors: Reusing past datasets for future tasks,” Proc. of 2015 IEEE Int. Conf. on Computer Vision (ICCV), pp. 2731-2739, 2015.

- [14] C. H. Lampert, H. Nickisch, and S. Harmeling, “Attribute-based classification for zero-shot visual object categorization,” IEEE Trans. on Pattern Analysis and Machine Intelligence (PAMI), Vol.36, No.3, pp. 453-465, 2014.

- [15] K. Tanaka, “Cross-season place recognition using NBNN scene descriptor,” Proc. of 2015 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), pp. 729-735, 2015.

- [16] C. Cortes and V. Vapnik, “Support-vector networks,” Machine Learning, Vol.20, No.3, pp. 273-297, 1995.

- [17] T. Cover and P. Hart, “Nearest neighbor pattern classification,” IEEE Trans. on Information Theory, Vol.13, No.1, pp. 21-27, 1967.

- [18] D. W. J. M. van de Wouw, G. Dubbelman, and P. H. N. de With, “Hierarchical 2.5-D Scene Alignment for Change Detection With Large Viewpoint Differences,” IEEE Robotics and Automation Letters, Vol.1, No.1, pp. 361-368, 2016.

- [19] A. Taneja, L. Ballan, and M. Pollefeys, “Geometric change detection in urban environments using images,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.37, No.11, pp. 2193-2206, 2015.

- [20] J. P. Underwood, D. Gillsjö, T. Bailey, and V. Vlaskine, “Explicit 3D change detection using ray-tracing in spherical coordinates,” Proc. of 2013 IEEE Int. Conf. on Robotics and Automation, pp. 4735-4741, 2013.

- [21] W. Li, X. Li, Y. Wu, and Z. Hu, “A novel framework for urban change detection using VHR satellite images,” Proc. of 18th Int. Conf. on Pattern Recognition (ICPR’06), Vol.2, pp. 312-315, 2006.

- [22] S. Ren, K. He, R. Girshick, and J. Sun, “Faster R-CNN: Towards real-time object detection with region proposal networks,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.39, No.6, pp. 1137-1149, 2017.

- [23] H. Andreasson, M. Magnusson, and A. Lilienthal, “Has something changed here? Autonomous difference detection for security patrol robots,” Proc. of 2007 IEEE/RSJ Int. Conf. on Intelligent Robots and Systems, pp. 3429-3435, 2007.

- [24] P. Ross, A. English, D. Ball, B. Upcroft, G. Wyeth, and P. Corke, “Novelty-based visual obstacle detection in agriculture,” Proc. of 2014 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 1699-1705, 2014.

- [25] S. Stent, R. Gherardi, B. Stenger, K. Soga, and R. Cipolla, “An Image-Based System for Change Detection on Tunnel Linings,” MVA 2013 IAPR Int. Conf. on Machine Vision Applications, pp. 359-362, 2013.

- [26] T. Murase, K. Tanaka, and A. Takayama, “Change Detection with Global Viewpoint Localization,” 2017 4th IAPR Asian Conf. on Pattern Recognition (ACPR), pp. 31-36, 2017.

- [27] X. Fei and K. Tanaka, “Unsupervised Place Discovery for Place-Specific Change Classifier,” Computing Research Repository (CoRR), 1706.02054, 2017.

- [28] K. Tanaka, Y. Kimuro, N. Okada, and E. Kondo, “Global localization with detection of changes in non-stationary environments,” Proc. of 2004 IEEE Int. Conf. on Robotics and Automation (ICRA), Vol.2, pp. 1487-1492, 2004.

- [29] M. Ando, Y. Chokushi, K. Tanaka, and K. Yanagihara, “Leveraging image-based prior in cross-season place recognition,” Proc. of 2015 IEEE Int. Conf. on Robotics and Automation (ICRA), pp. 5455-5461, 2015.

- [30] J. Yang, Y.-G. Jiang, A. G. Hauptmann, and C.-W. Ngo, “Evaluating bag-of-visual-words representations in scene classification,” Proc. of Int. workshop on multimedia information retrieval, pp. 197-206, 2007.

- [31] E. Rublee, V. Rabaud, K. Konolige, and G. Bradski, “ORB: An efficient alternative to SIFT or SURF,” 2011 Int. Conf. on Computer Vision, pp. 2564-2571, 2011.

- [32] R. Mur-Artal, J. M. M. Montiel, and J. D. Tardós, “ORB-SLAM: a versatile and accurate monocular SLAM system,” IEEE Tran. on Robotics, Vol.31, No.5, pp. 1147-1163, 2015.

- [33] X. Fei, K. Tanaka, K. Inamoto, and G. Hao, “Unsupervised place discovery for visual place classification,” 2017 15th IAPR Int. Conf. on Machine Vision Applications (MVA), pp. 109-112, 2017.

- [34] M.-M. Cheng, Z. Zhang, W.-Y. Lin, and P. Torr, “BING: Binarized normed gradients for objectness estimation at 300fps,” Proc. of 2014 IEEE Conf. on Computer Vision and Pattern Recognition, pp. 3286-3293, 2014.

- [35] R. Raguram, O. Chum, M. Pollefeys, J. Matas, and J.-M. Frahm, “USAC: a universal framework for random sample consensus,” IEEE Trans. on Pattern Analysis and Machine Intelligence, Vol.35, No.8, pp. 2022-2038, 2013.

- [36] J. Ma, J. Zhao, J. Tian, A. L. Yuille, and Z. Tu, “Robust point matching via vector field consensus,” IEEE Trans. on Image Processing, Vol.23, No.4, pp. 1706-1721, 2014.

- [37] C. Elkan and K. Noto, “Learning classifiers from only positive and unlabeled data,” Proc. of ACM SIGKDD Int. Conf. on Knowledge discovery and data mining, pp. 213-220, 2008.

- [38] T. Joachims, “Making large-Scale (SVM) Learning Practical,” B. Schölkopf, C. Burges, and A. Smola (Eds.), “Advances in Kernel Methods-Support Vector Learning,” pp. 169-184, MIT Press, http://svmlight.joachims.org, 1999 [accessed April 11, 2016]

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.

This article is published under a Creative Commons Attribution-NoDerivatives 4.0 Internationa License.